Concept

The challenge of executing a large block of securities is an exercise in managing a fundamental market paradox. A large order represents significant intent, and the very act of expressing that intent through trading activity inevitably perturbs the market, creating an adverse price impact that increases execution costs. The traditional approach to this problem has been to design static or semi-static execution algorithms, such as Time-Weighted Average Price (TWAP) or Volume-Weighted Average Price (VWAP), which slice the order into smaller pieces and execute them according to a predetermined schedule. These are open-loop systems; they execute a plan without meaningfully incorporating the market’s real-time reaction to their own actions.

Reinforcement Learning (RL) introduces a fundamentally different operational paradigm. It configures the execution problem as a closed-loop control system, where an autonomous agent learns a dynamic policy to achieve a specific objective within a complex, stochastic environment. This agent’s purpose is to optimize the entire sequence of decisions that constitute the trade execution lifecycle.

Its function extends far beyond the simple prediction of market impact based on historical data. The RL agent actively learns from the consequences of its actions, adapting its strategy in response to observed market conditions, fill rates, and the very impact it generates.

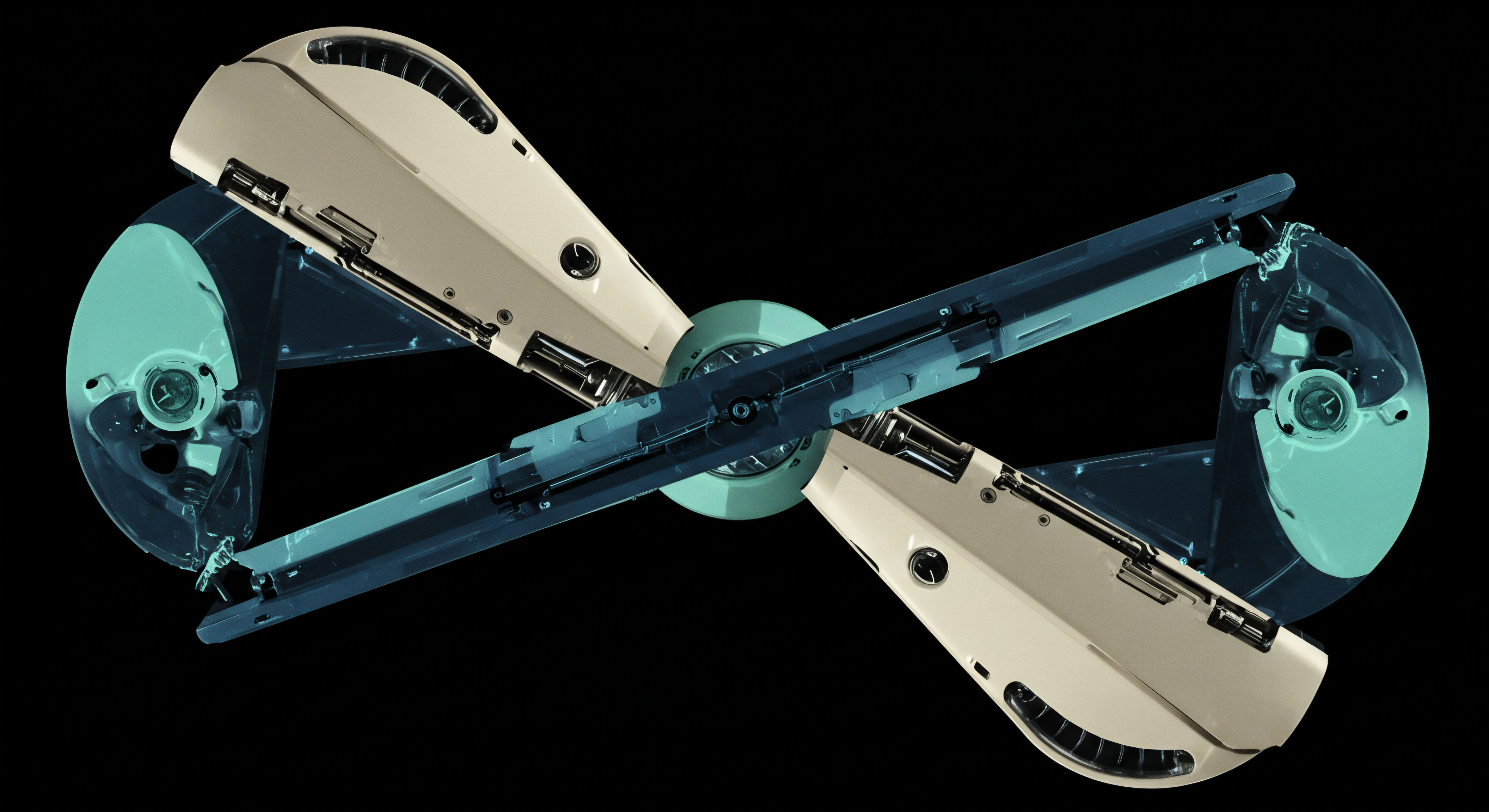

Reinforcement Learning transforms block execution from a pre-scripted process into a dynamic, adaptive system that learns to navigate market microstructure in real time.

The lifecycle in question encompasses three distinct phases, each of which presents a unique optimization surface for an RL agent.

- Pre-Trade Strategy Formulation The initial phase involves defining the high-level parameters of the execution. An RL framework can be trained to suggest optimal execution horizons or aggression levels based on the security’s volatility profile, the prevailing liquidity conditions, and the size of the order relative to average daily volume. It learns from vast datasets of prior executions which initial conditions correlate with superior outcomes.

- Intra-Trade Dynamic Control This is the core of the RL agent’s operational domain. During the execution window, the agent makes a continuous stream of decisions. At each discrete time step, it observes the state of the market ▴ liquidity in the order book, recent price action, fill confirmations ▴ and takes an action. This action could be to submit a child order of a specific size to a particular venue at a certain price, or it could be to wait, strategically withholding liquidity to allow the market to recover. Each action’s outcome informs the next decision, creating an adaptive pathway through the execution timeline.

- Post-Trade Policy Refinement After the parent order is filled, the execution data is fed back into the training process. The agent’s performance is measured against a defined objective, typically implementation shortfall (the difference between the decision price and the final average execution price). This feedback loop allows the agent’s underlying neural network to update its parameters, refining its policy to perform better in similar future scenarios. The system perpetually learns and improves its execution logic.

This capacity for continuous, data-driven policy improvement is what sets the RL approach apart. Traditional algorithms operate on a fixed model of the world. An RL agent builds and refines its own model of market dynamics, learning the subtle, second-order effects of its behavior. It can, for instance, learn to recognize patterns of predatory algorithmic activity and adjust its own placement strategy to avoid being detected.

It can learn to opportunistically source liquidity during periods of high volume while remaining passive when its own activity might signal its intent. The objective is a holistic optimization of the execution path, a task for which the adaptive, sequential decision-making framework of RL is uniquely suited.

Strategy

Implementing a Reinforcement Learning agent for block execution requires the construction of a precise mathematical framework. This framework translates the abstract goal of “optimal execution” into a solvable control problem. The core components are the agent, the environment, the state representation, the action space, and the reward function. The strategic sophistication of the entire system is encoded within the design of these elements.

The Core RL Components in a Trading Context

The system operates as a continuous cycle. The agent observes the market environment’s current state, takes an action based on its learned policy, receives a reward, and observes the new state. This loop repeats until the execution is complete.

- The Agent is the decision-making algorithm, typically a deep neural network, that embodies the execution policy. This policy is a probabilistic map, prescribing the likelihood of taking any possible action given the current state.

- The Environment is the market itself, a complex and non-stationary system. It includes the limit order book, the public feed of executed trades, and the actions of all other market participants. The agent’s actions directly influence the environment, a critical feedback mechanism that RL is designed to handle.

- The State Representation is the set of data points the agent uses to make decisions. This is a critical design choice, as it defines the agent’s perception of the market. An effective state must be comprehensive yet concise, capturing the information needed for effective decision-making without creating an unmanageably large input space for the neural network.

- The Action Space defines the universe of possible moves the agent can make at any time step. A well-designed action space provides the agent with sufficient flexibility to navigate market conditions without being so large as to make the learning process intractable.

- The Reward Function is the scalar feedback signal that guides the learning process. It is the quantitative expression of the execution goal. The agent’s objective is to learn a policy that maximizes the cumulative reward over the entire execution horizon.

Designing the State and Action Spaces

The performance of an RL agent is heavily dependent on the quality of its state and action representations. These must be engineered with a deep understanding of market microstructure.

State-Space Features

The state is a vector of normalized numerical values that should include:

- Inventory Information The percentage of the initial order remaining to be executed and the time remaining in the execution window.

- Market Microstructure Data Key features from the limit order book, such as the bid-ask spread, the volume imbalance between the bid and ask sides, and the depth of liquidity at several price levels.

- Recent Market Activity Price volatility over a short-term lookback window, the volume of recent trades, and the agent’s own recent fill rates.

- Execution History The agent’s average execution price thus far and the market’s price movement since the start of the order.

The design of the reward function is the most critical element, as it mathematically defines the strategic objective for the autonomous agent.

The Central Role of the Reward Function

Crafting the reward function is where the strategic objectives of the trading desk are encoded into the agent’s behavior. A simple function might only penalize slippage. A more sophisticated function balances multiple, often competing, goals. For instance, a reward function might grant a positive reward for executing shares at a price better than the current mid-price but apply a penalty proportional to the adverse price movement caused by the trade.

This presents a complex trade-off ▴ executing a large quantity now might secure a good price but risks pushing the market away, making subsequent fills more expensive. This is the very dilemma the RL agent must learn to resolve. An improperly specified reward function can lead to catastrophic failures, such as the agent learning to withhold its order entirely to avoid any impact, thereby failing to execute. The intellectual grappling involved in this specification is immense, as one must quantify the value of speed versus cost, of certainty versus opportunity, in a way the machine can optimize.

| Paradigm | Methodology | Market Responsiveness | Optimization Goal | Primary Weakness |

|---|---|---|---|---|

| Static Scheduling | Executes fixed quantities at fixed time intervals (e.g. TWAP, VWAP). | None. Follows a pre-set schedule regardless of market conditions. | Match a benchmark price (TWAP or VWAP). | Fails to adapt to liquidity opportunities or risks. |

| Predictive Models | Uses a market impact model to create an optimal static schedule upfront. | Low. The schedule is fixed pre-trade based on historical data. | Minimize predicted implementation shortfall. | The underlying impact model may be inaccurate or outdated. |

| Reinforcement Learning | Learns a dynamic policy to choose actions based on real-time market state. | High. Actions are a direct function of live market feedback. | Maximize a cumulative reward function (e.g. minimize total cost). | Requires extensive training data and careful reward function design. |

Execution

The operational deployment of a Reinforcement Learning execution system is a significant engineering undertaking that moves from theoretical models to live trading infrastructure. This process requires a robust data pipeline, a high-fidelity simulation environment for training, and a carefully architected production system with stringent risk management overlays.

The Data and Simulation Foundation

An RL agent learns from experience, and in the context of financial markets, this experience must be generated in a simulated environment before the agent is exposed to real capital. The quality of this simulation is paramount to the agent’s ultimate performance. A simplistic backtester that ignores the second-order effects of the agent’s own trades will produce a policy that is dangerously overfitted and naive to the realities of market impact and information leakage.

A high-fidelity market simulator must be built, one that can reconstruct the limit order book from historical data and model how the agent’s orders would have affected the book and subsequent trades. This requires tick-by-tick, full-depth order book data, which is a massive dataset. The simulation environment becomes the agent’s training ground, where it can execute millions of orders across thousands of different historical market scenarios to learn a robust policy.

| Stage | Process | Input Data | Output | Key Considerations |

|---|---|---|---|---|

| Ingestion | Collection and storage of raw market data. | Tick-by-tick L2 order book data, trade data, news feeds. | Time-series database of market events. | Data completeness, timestamp accuracy (nanoseconds), storage volume. |

| Preprocessing | Cleaning, normalizing, and structuring data. | Raw market events. | Cleaned, sessionized data logs. | Handling of data gaps, exchange-specific anomalies, order book reconstruction. |

| Feature Engineering | Creation of the state vector from processed data. | Cleaned data logs. | Normalized feature vectors for training. | Feature selection, normalization techniques, computational efficiency. |

| Simulation | Training the agent in a simulated market environment. | Feature vectors and historical event logs. | A trained neural network policy. | Simulator fidelity, modeling of market impact, computational cost of training. |

Action Space Granularity and Control

The “action” an agent takes is a multi-dimensional decision. A production-grade system requires a discrete yet expressive action space that gives the agent meaningful control over its execution footprint.

- Order Sizing The agent typically does not choose a raw share quantity. Instead, it might select from a discrete set of options, such as “execute 2% of remaining volume,” “execute 5%,” or “execute 10%.” This prevents the agent from making erratic, large-scale order submissions.

- Pricing and Aggression The agent must decide how aggressively to pursue liquidity. The action space could include options like ▴ “place a passive limit order at the best bid,” “place a mid-price peg,” or “cross the spread and take liquidity with a market order.” Each choice carries a different trade-off between impact and certainty of execution.

- Venue Selection In a fragmented market, the agent could be empowered to choose where to route its order. This could involve selecting a specific lit exchange or a dark pool, a decision that depends on the desired trade-off between transparency, fees, and potential for price improvement.

- The Null Action A critical component of the action space is the ability to do nothing. The decision to wait and observe the market is a strategic one, and the agent must learn when it is optimal to be patient.

System Integration and Risk Overlays

The trained RL agent does not operate in a vacuum. It must be integrated into the firm’s existing Order Management System (OMS) and Execution Management System (EMS). The agent’s desired actions are translated into FIX protocol messages that are sent to the execution venues. This entire process must be governed by a separate, hard-coded risk management layer.

This layer acts as a final check on the agent’s decisions, ensuring they do not violate pre-defined limits. These are absolute rules, not learned policies.

The live RL agent operates as a sophisticated guidance system, but it is always constrained by a non-negotiable, rules-based risk management framework.

This risk framework includes constraints such as:

- Maximum Order Size No single child order can exceed a certain number of shares or a percentage of the parent order.

- Price Deviation Orders cannot be placed at prices that are unreasonably far from the current market price (the NBBO).

- Kill Switch A human trader must always have the ability to immediately halt the algorithm, cancel all open orders, and take manual control if the agent behaves erratically or if market conditions become unprecedented.

The execution of an RL strategy is therefore a synthesis of machine intelligence and human oversight. The agent provides a level of micro-scale, adaptive decision-making that is impossible for a human to replicate, while the human trader provides the high-level strategic context and ultimate risk control. The system is designed to optimize the execution lifecycle within a safety envelope defined by human expertise.

References

- Almgren, Robert, and Neil Chriss. “Optimal execution of portfolio transactions.” Journal of Risk, vol. 3, no. 2, 2001, pp. 5-40.

- Bertsekas, Dimitri P. Dynamic Programming and Optimal Control, Vol. I. 4th ed. Athena Scientific, 2017.

- Cartea, Álvaro, Sebastian Jaimungal, and Jaimungal Penalva. Algorithmic and High-Frequency Trading. Cambridge University Press, 2015.

- Goodfellow, Ian, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT Press, 2016.

- Harris, Larry. Trading and Exchanges ▴ Market Microstructure for Practitioners. Oxford University Press, 2003.

- Hendricks, David, and Alex Lipton. “Deep Reinforcement Learning for Market Making in a Multi-agent Dealer Market.” SSRN Electronic Journal, 2019.

- Nevmyvaka, Yuriy, Yi-Hao Kao, and Feng-Tso Sun. “Reinforcement learning for optimized trade execution.” Proceedings of the 24th International Conference on Machine Learning, 2006, pp. 657-664.

- Sutton, Richard S. and Andrew G. Barto. Reinforcement Learning ▴ An Introduction. 2nd ed. MIT Press, 2018.

- Schulman, John, et al. “Proximal Policy Optimization Algorithms.” arXiv preprint arXiv:1707.06347, 2017.

- Lehalle, Charles-Albert, and Sophie Laruelle. Market Microstructure in Practice. 2nd ed. World Scientific Publishing, 2018.

Reflection

The integration of Reinforcement Learning into the block trading lifecycle represents a significant evolution in operational capability. It reframes the fundamental task from one of following a predetermined path to one of navigating a dynamic landscape. The system’s value is derived from its ability to learn and adapt to the complex, reflexive nature of modern financial markets, where every action elicits a reaction. An execution policy learned through this process is not merely a set of rules; it is a highly refined intuition, encoded in a neural network and honed across millions of simulated trades.

This prompts a critical examination of an institution’s existing execution framework. Is the current system built to react to market data, or is it designed to learn from it? The transition to an RL-based approach is a commitment to building a learning organization, where post-trade analysis becomes a direct, automated input into the continuous improvement of future execution strategy. The ultimate objective is an operational architecture that perpetually enhances its own capital efficiency and execution quality, creating a durable, systemic advantage.

Glossary

Reinforcement Learning

Dynamic Policy

Market Conditions

Historical Data

Optimal Execution

Order Book

Implementation Shortfall

Neural Network

State Representation

Reward Function

Limit Order Book

Action Space

Market Microstructure

Limit Order

High-Fidelity Simulation

Market Impact