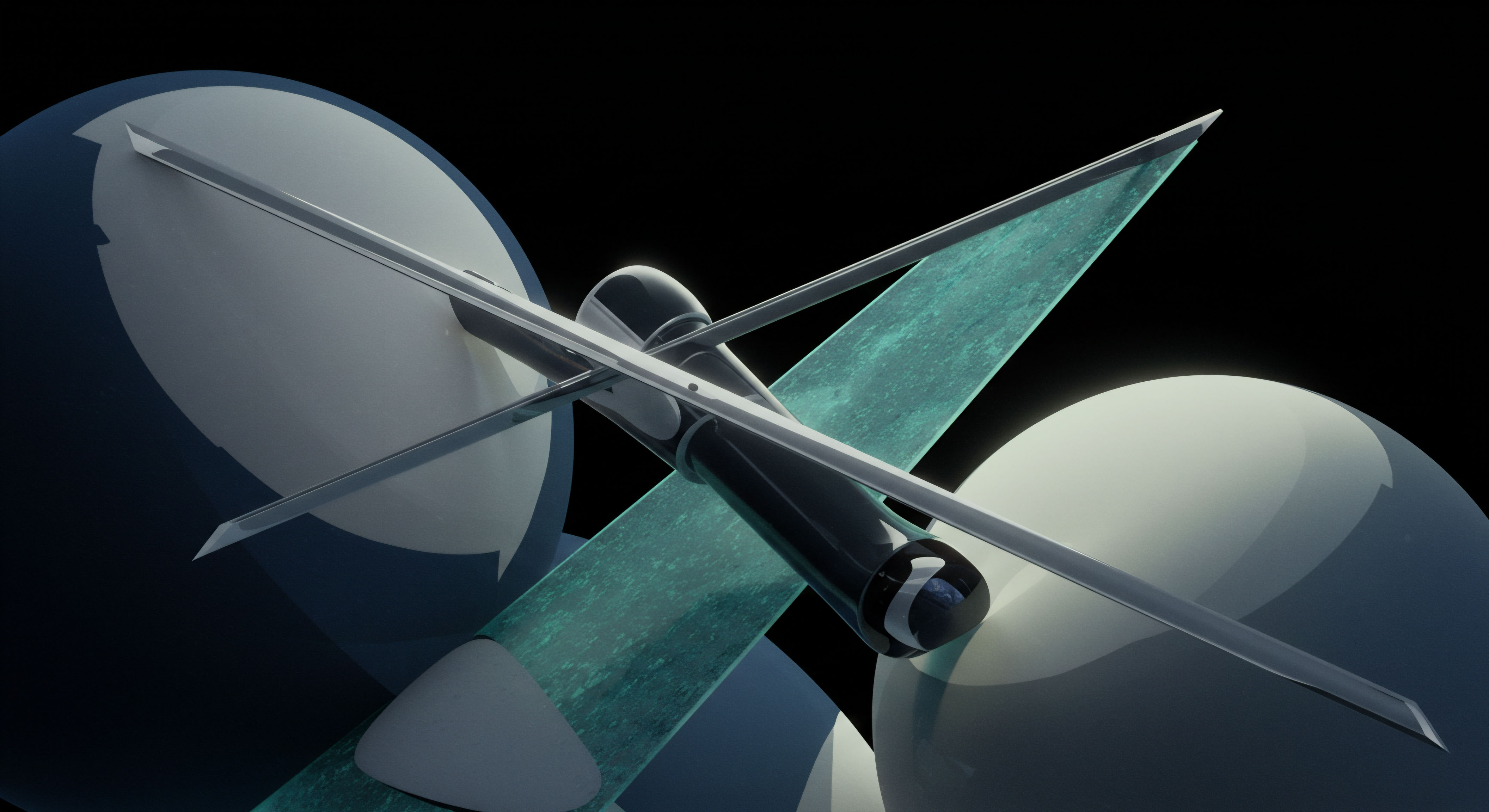

Concept

The core challenge for any quantitative trading system is one of signal integrity. The system’s primary function is to interpret a torrent of market data and isolate predictive patterns, or alpha signals, that indicate future price movements. These are the intentional outputs of a rigorous research and modeling process. An entirely different class of signal exists simultaneously within the same data stream ▴ unintentional information leakage.

This leakage represents the unintended trail of electronic footprints left by other market participants, often large institutions, as they execute their own strategies. The ability of a machine learning model to differentiate between these two signal classes forms the bedrock of its profitability and robustness.

An intentional alpha signal is a deliberately engineered construct. It is the result of a hypothesis about market behavior, tested and validated against historical data. It could be derived from a complex analysis of order book imbalances, a novel alternative dataset, or the subtle interplay of macroeconomic indicators.

Its value lies in its predictive power and its statistical edge, however slight. The system is designed to hunt for this specific signature in the noise of the market.

A system’s primary function is to interpret market data, isolating predictive alpha signals from the noise of unintentional information leakage.

Unintentional leakage is a byproduct of market participation. A large pension fund executing a billion-dollar order, for example, cannot do so instantaneously without massive market impact. It must break the order into smaller pieces, executing them over time. This activity, however carefully managed, creates patterns.

These patterns are not designed to be predictive in the same way as an alpha signal; they are artifacts of operational necessity. They are signals of presence and intent from another entity, and while they can be exploited for short-term profit, they represent a different kind of opportunity fraught with its own risks, like adverse selection.

Machine learning models provide the apparatus to make this distinction at scale and speed. They operate as a sophisticated filtering mechanism, trained to recognize the distinct structural characteristics of each signal type. A model can learn that a genuine alpha signal might have a complex, non-linear relationship with future returns and be preceded by a specific set of microstructural events. In contrast, it might learn that leakage is often characterized by a more simplistic pattern of persistent, one-sided order flow in a specific security, often accompanied by widening spreads as market makers react to the pressure.

The differentiation is achieved not by looking at a single data point, but by analyzing the context, the history, and the multi-dimensional signature of the market activity. This is a problem of pattern recognition in a high-dimensional, noisy, and adversarial environment.

Strategy

Developing a strategic framework to separate alpha from leakage requires a multi-layered approach that integrates data acquisition, feature engineering, and sophisticated model selection. The objective is to build a system that understands the genesis of a signal, allowing it to classify market events based on their likely origin ▴ either a proprietary analytical edge or the mechanical execution of a large external order.

Data as the Foundation of Differentiation

The entire strategy rests upon the breadth and granularity of the input data. A model cannot distinguish between signal types without the raw information to detect their subtle differences. The data strategy is therefore partitioned into several key domains:

- Core Market Data ▴ This includes high-resolution order book data (Level 2/Level 3), trade prints (tick data), and reference data. This provides the microstructural context for every market event, revealing the state of liquidity and the immediate impact of trading activity.

- Execution Data ▴ The system’s own order and execution records are a critical source. Analyzing the market’s reaction to the system’s own trades provides a feedback loop for understanding market impact and detecting the presence of other large players.

- Alternative Data ▴ This is a vast category that includes satellite imagery, credit card transactions, news sentiment analysis, and social media trends. These datasets are often the source of genuine alpha signals because they provide information orthogonal to price and volume, offering a view into economic activity before it is reflected in the market.

- Natural Language Data ▴ As researched in financial contexts, textual data from news feeds, regulatory filings, or even internal communications can be processed to identify information that could constitute leakage or a new alpha source.

Feature Engineering the Heart of the Matter

Raw data is insufficient. The strategy’s core intellectual property resides in its feature engineering ▴ the process of transforming raw data into predictive variables that expose the underlying character of a market event. The goal is to create features that are highly sensitive to one signal type while remaining neutral to the other.

The table below outlines a conceptual framework for engineering distinct feature sets for alpha and leakage.

| Feature Category | Alpha Signal Indicators (Hypothesis-Driven) | Leakage Indicators (Execution-Artifact-Driven) |

|---|---|---|

| Temporal Patterns | Non-linear decay of predictive power over a specific forecast horizon. Often linked to an external event or data release. | High autocorrelation in order flow (persistent buying/selling). Rhythmic patterns corresponding to common execution algorithms (e.g. VWAP). |

| Cross-Asset Relationships | Complex correlations across economically linked assets (e.g. a signal in a commodity futures contract predicting movement in an equities sector). | Anomalous volume in a single stock without corresponding activity in its sector or related derivatives. A sign of idiosyncratic pressure. |

| Order Book Dynamics | Changes in the shape of the order book that precede price moves, indicating a shift in informed trader sentiment. | Depletion of liquidity on one side of the book. A widening bid-ask spread in response to persistent, one-sided orders. |

| Alternative Data Linkage | A spike in a custom news sentiment score for a company, followed by abnormal returns. | No corresponding signal in any alternative data source, suggesting the activity is purely mechanical. |

What Is the Right Machine Learning Model for This Task?

The choice of model architecture depends on the specific nature of the problem and the data available. A multi-model approach is often the most robust solution.

- Supervised Learning Models ▴ Algorithms like Gradient Boosting Machines (XGBoost, LightGBM) and Support Vector Machines (SVM) are highly effective. These models are trained on a labeled dataset where historical events have been manually or heuristically classified as “alpha,” “leakage,” or “noise.” The model learns a function to map a new set of features to one of these classes. This requires a significant investment in creating a high-quality labeled dataset, which is a continuous and evolving process.

- Unsupervised Learning Models ▴ Autoencoders, a type of neural network, are particularly powerful here. The model is trained to reconstruct its input. When a new market event occurs, it is fed through the autoencoder. If the reconstruction error is high, it means the model has not seen this type of pattern before, flagging it as an anomaly. This is extremely useful for detecting new forms of leakage or previously unknown market dynamics without prior labeling.

- Deep Learning and Neural Networks ▴ For complex, high-dimensional data like the full order book, deep learning models such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs) can be applied. A CNN can treat the order book as an “image” to detect spatial patterns, while an RNN can model the temporal sequence of market events. These models can learn features automatically, reducing the burden of manual feature engineering but increasing the need for computational resources and interpretability tools.

A robust strategy relies on a multi-layered system using supervised models for known patterns and unsupervised models to detect novel anomalies.

The ultimate strategic goal is to create a feedback loop. The output of these models ▴ the classification of market events ▴ is fed back into the system. When the system identifies what it believes to be leakage, it can adjust its own execution strategy to either avoid the toxic order flow or attempt to profit from the short-term predictability it creates.

When it identifies a genuine alpha signal, it can act with higher confidence. This adaptive capability is what separates a static trading model from a dynamic, learning system.

Execution

The operational execution of a system designed to differentiate alpha signals from information leakage is a complex engineering challenge. It requires a robust technological architecture, a disciplined data processing pipeline, and a rigorous validation framework to ensure the models perform as intended in a live trading environment. The focus shifts from strategic concepts to the precise mechanics of implementation.

The Operational Playbook

Deploying such a system involves a structured, multi-stage process that moves from data ingestion to actionable trading decisions. This playbook ensures that each component is optimized for performance, accuracy, and reliability.

- Data Ingestion and Synchronization ▴ The first step is to build a high-throughput data pipeline capable of capturing and time-stamping market data from multiple feeds to microsecond precision. This often involves using specialized time-series databases like KDB+ or Arctic. All data sources, including market data and alternative data, must be synchronized to a common clock to ensure causal relationships are correctly inferred.

- Feature Computation Engine ▴ A dedicated computational layer is required to process the raw data and generate the feature sets defined in the strategy phase. This engine must operate in near real-time, calculating hundreds or thousands of features for every instrument. This is often implemented in a high-performance language like C++ or by leveraging optimized libraries like NumPy and pandas in Python.

- Model Inference and Scoring ▴ Once features are computed, they are fed into the trained machine learning models. The models output a score or a probability for each class (e.g. 85% probability of leakage, 10% alpha, 5% noise). This inference step must be extremely low-latency to be useful for short-term trading decisions.

- Decision Logic and Execution Gateway ▴ The model’s output is then consumed by a decision-making module. This module translates the scores into concrete actions. For example, a high leakage score might trigger a temporary reduction in order size or a switch to a more passive execution algorithm. A high alpha score would be routed to the primary strategy execution logic. This module interfaces directly with the firm’s Order Management System (OMS).

- Monitoring and Feedback Loop ▴ The system’s performance is continuously monitored. All predictions are stored alongside the actual market outcomes. This data is used to evaluate the model’s accuracy and forms the basis for periodic retraining. Tools like Alphalens can be used to analyze the predictive power of the generated signals over time.

Quantitative Modeling and Data Analysis

The core of the execution lies in the quantitative rigor of the models. The following table provides a simplified, hypothetical case study of how the system would process and classify two distinct market events in a fictional tech stock, “Innovate Corp” (ticker ▴ INVC).

| Feature | Event A Data | Event B Data | Interpretation |

|---|---|---|---|

| Order Flow Imbalance (1-min) | +0.85 (Strongly buy-sided) | +0.75 (Strongly buy-sided) | Both events show significant buying pressure. On its own, this feature is ambiguous. |

| Trade-to-Order Volume Ratio | 0.15 (Low) | 0.60 (High) | Event A has many small orders, while Event B has aggressive orders taking liquidity. |

| Bid-Ask Spread (% of Mid-Price) | 0.02% -> 0.08% | 0.02% -> 0.03% | The spread widened dramatically in Event A, suggesting market makers are repricing due to toxic flow. |

| News Sentiment Score (Tech Sector) | -0.05 (Neutral) | +0.92 (Highly Positive) | Event B coincides with a major positive news event for the sector. Event A has no external catalyst. |

| Cross-Asset Correlation (INVC vs. ETF) | 0.10 (Decoupled) | 0.85 (Strongly Correlated) | The buying in Event B is broad across the sector; the buying in Event A is isolated to INVC. |

| Model Output (Probability) | Leakage ▴ 92% Alpha ▴ 3% Noise ▴ 5% | Leakage ▴ 8% Alpha ▴ 89% Noise ▴ 3% | The model classifies Event A as leakage and Event B as a genuine alpha signal. |

How Does the System Architecture Support This Process?

The underlying technology must be designed for high performance and resilience. A typical architecture would consist of co-located servers at the exchange data center to minimize network latency. The software stack would likely involve a combination of C++ for the performance-critical data processing and model inference components, and Python for research, model training, and offline analysis.

The system must be built with redundancy and fail-safes to manage the risk of model failure or unexpected market events. This is a system where engineering excellence directly translates into financial performance and risk management.

References

- “Machine Learning and Signal Detection in Financial Industry Application.” Vertex AI Search, 24 Mar. 2023.

- Abe, Ken, et al. “An algorithm for detecting leaks of insider information of financial markets in investment consulting.” ResearchGate, Jan. 2021.

- Kolanovic, Marko, and Rajesh T. Krishnamachari. “The Evolution of Alpha in Finance ▴ Harnessing Human Insight and LLM Agents.” arXiv, 22 May 2025.

- Jansen, Stefan. “Financial Feature Engineering ▴ How to research Alpha Factors | Machine Learning for Trading.” GitHub Pages.

- “Alpha Signal Discovery from Alternative Data Using Python.” YouTube, 3 May 2025.

Reflection

The ability to construct a system that reliably distinguishes between engineered alpha and unintentional leakage is a significant operational achievement. It represents a maturation of a firm’s quantitative capabilities, moving beyond simple signal generation to a deeper understanding of the market’s information ecology. The true value of this system is not just in the immediate profitability of its classifications, but in the strategic intelligence it provides.

By observing and categorizing the behavior of other market participants, the system builds a dynamic map of the competitive landscape. This knowledge, when integrated into the firm’s broader strategic framework, becomes a source of durable competitive advantage, transforming the operational challenge of signal differentiation into a pillar of institutional intelligence.

Glossary

Information Leakage

Alpha Signals

Machine Learning

Alpha Signal

Order Book

Genuine Alpha Signal

Learning Models

Feature Engineering

Market Events

Market Data

Alternative Data

Genuine Alpha

Supervised Learning

Unsupervised Learning

Neural Networks