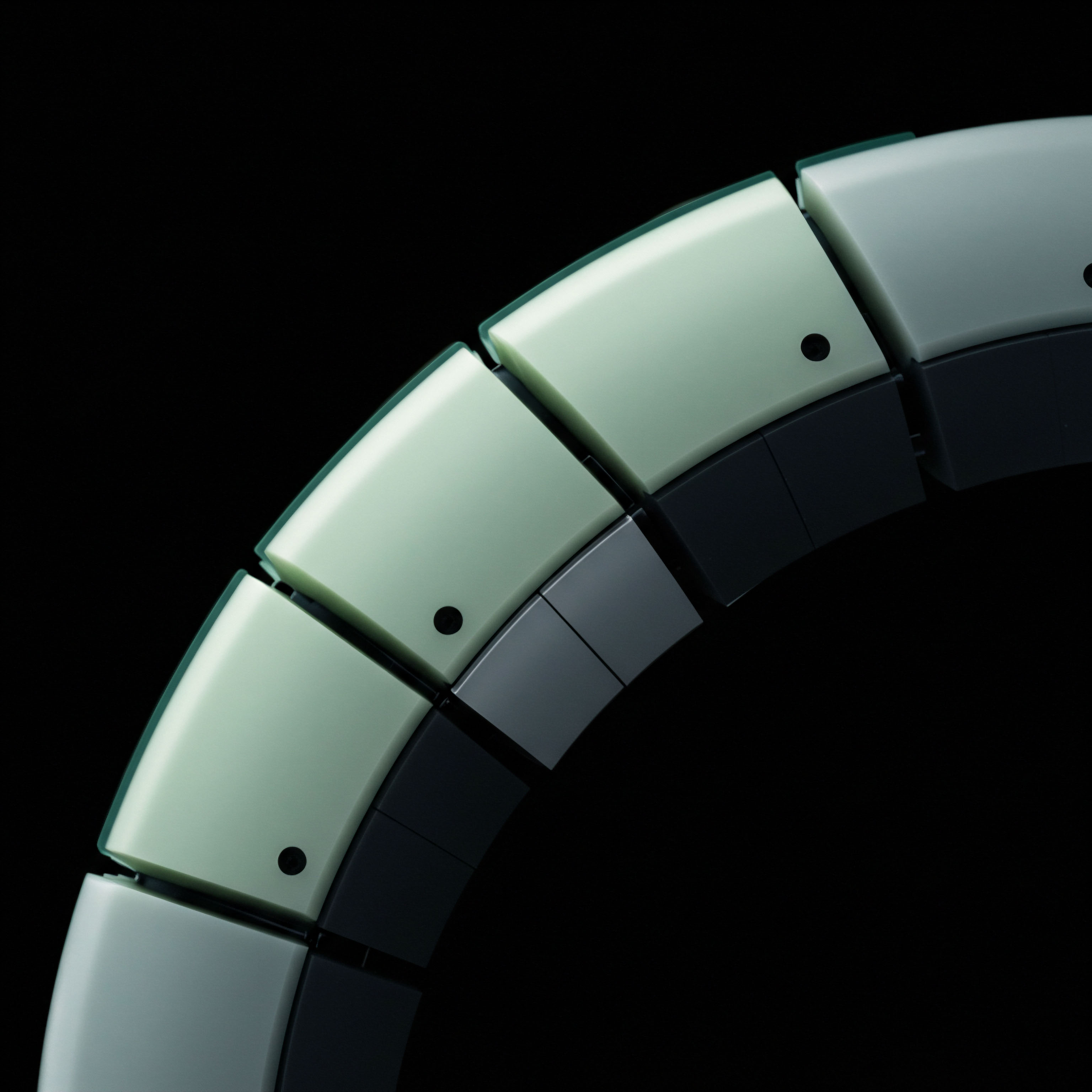

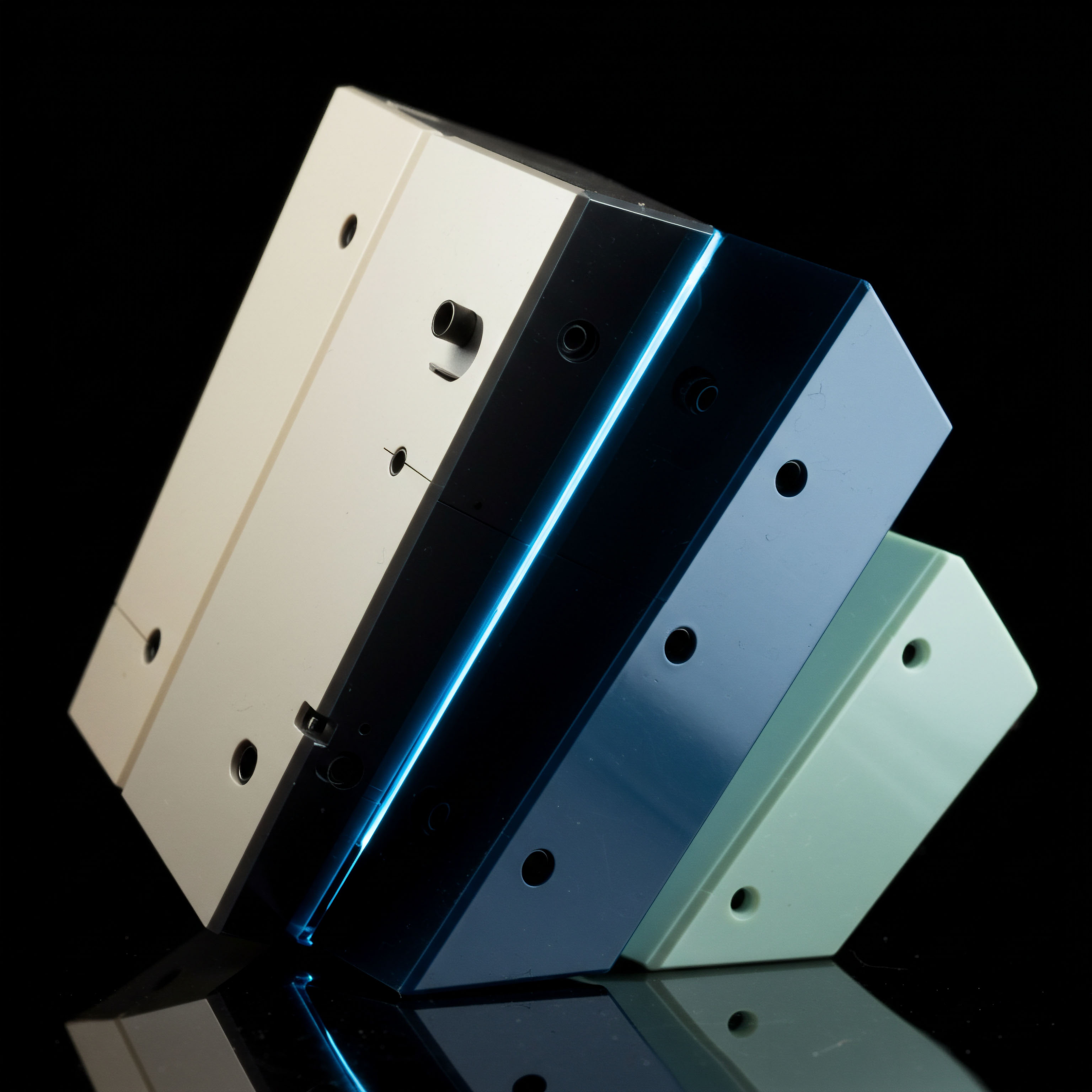

Concept

The Inevitable Collision of System and Sovereignty

The pursuit of a unified reporting architecture is a logical imperative for any global financial institution. It represents a drive towards systemic coherence, operational efficiency, and a singular, verifiable source of truth for risk and performance assessment. This endeavor, however, does not proceed in a vacuum. It advances directly into the complex and fragmented landscape of global regulation, where the sovereignty of nations and trading blocs imposes distinct, divergent, and often overlapping obligations.

The core challenge is an architectural one ▴ designing a single, elegant system capable of satisfying the multifarious demands of many masters. The friction arises not from a failure of technology, but from the fundamental nature of a globalized financial system that remains governed by localized mandates.

Each major regulatory framework functions as a unique operating system with its own specific protocols and data requirements. The Markets in Financial Instruments Directive II (MiFID II) in Europe, for example, mandates an exceptionally granular level of transaction reporting to promote market transparency. Concurrently, the principles of risk data aggregation under Basel III (specifically BCBS 239) demand that an institution can produce a comprehensive and accurate view of its exposures on a global scale.

Adding another layer of complexity, data privacy regimes like the General Data Protection Regulation (GDPR) impose strict rules on the residency and cross-border movement of personal data, directly impacting where and how reporting data can be processed and stored. These are not complementary directives; they are distinct and powerful forces that pull a firm’s data architecture in different directions.

A unified reporting system must reconcile the global ambition for data consistency with the local insistence on regulatory specificity.

This dynamic creates a state of persistent tension. A system optimized solely for MiFID II reporting might centralize European transaction data, while a structure designed for global risk aggregation under Basel III would pull that same data into a global repository. The constraints of GDPR may then prohibit that very transfer. The result within many institutions is a set of legacy systems that mirror this external fragmentation.

Different reporting solutions are built for different regulations, leading to data silos, duplicated effort, and a heightened risk of inconsistency. Answering a simple question about a firm’s total exposure to a specific counterparty can become a monumental task of data reconciliation across systems that were never designed to communicate. The impact transcends mere inefficiency; it strikes at the heart of effective risk management and strategic decision-making.

Defining the Architectural Problem Space

The challenge for the systems architect is to design a framework that is both centralized in principle and federated in practice. The architecture must possess a strong, coherent core ▴ a standardized data model and governance framework ▴ while also featuring adaptable, intelligent interfaces at its periphery capable of meeting specific jurisdictional requirements. This involves moving beyond the traditional approach of building separate reporting engines for each regulation.

Instead, the focus shifts to creating a single, resilient data pipeline that ingests, normalizes, and enriches data once, before distributing it to various reporting modules tailored for specific regulatory outputs. This conceptual shift is the foundation of a truly unified system.

This approach treats regulatory requirements as a series of distinct “output formats” rather than as fundamental drivers of the underlying data structure. The core system maintains a “golden source” of transactional and risk data, agnostic to any single regulation. When a report is required for a specific regulator, a dedicated module pulls the necessary data from the core, applies the jurisdiction-specific rules, formats it according to the required template, and submits it. This design prevents the core architecture from being compromised by the idiosyncrasies of any one regulatory regime, ensuring its stability and scalability over time.

It also allows for much more rapid adaptation; when a new regulation is introduced or an existing one is amended, only a new output module needs to be created or modified, leaving the core data pipeline untouched. This is the essence of building for resilience in a constantly evolving regulatory environment.

Strategy

From Siloed Compliance to a Coherent Data Fabric

The strategic response to regulatory fragmentation is the methodical construction of a unified data fabric. This represents a move away from a reactive, siloed posture ▴ where individual teams build standalone solutions for each new regulation ▴ towards a proactive, enterprise-wide strategy. A unified data fabric is a conceptual and architectural framework that provides a consistent, integrated view of all relevant data across the organization, regardless of where it is physically stored.

It treats data as a shared asset, governed by a common set of principles and accessible through a standardized set of services. This approach directly confronts the primary challenge of fragmented systems by creating a layer of coherence that sits above the underlying technical complexity.

Implementing this strategy begins with the establishment of a robust data governance program. This program defines the policies, standards, and procedures for managing data throughout its lifecycle. It establishes clear ownership and accountability for critical data elements and ensures that data quality is measured and maintained.

A central component of this governance framework is the development of a canonical data model. This model serves as a common language for the entire organization, providing a single, unambiguous definition for key business concepts like “trade,” “counterparty,” and “exposure.” All source systems are then mapped to this canonical model, creating a consistent foundation for all reporting and analytics.

A unified data fabric transforms regulatory reporting from a series of disjointed obligations into a streamlined, repeatable industrial process.

The architectural design of the data fabric must prioritize flexibility. A monolithic, centralized data warehouse can become a bottleneck and struggle to adapt to the varying data residency and privacy requirements imposed by different jurisdictions. A more effective approach is often a distributed or federated architecture, such as a data mesh. In a data mesh, data ownership and management are decentralized to the business domains that know the data best.

However, this is governed by a central set of standards and interoperability protocols that ensure all data products across the organization can be easily discovered, accessed, and combined. This allows the firm to maintain a unified view of its data while still allowing for local control and compliance with regulations like GDPR that mandate data localization.

Comparing Architectural Approaches

The choice of architecture has profound implications for a firm’s ability to navigate the global regulatory environment. The traditional, siloed approach creates significant operational friction, while a unified fabric provides a foundation for agility and resilience.

| Attribute | Siloed Reporting Architecture | Unified Data Fabric Architecture |

|---|---|---|

| Data Consistency | Low. Data is duplicated and transformed independently for each report, leading to discrepancies. | High. Data is sourced and normalized once against a canonical model, ensuring a single version of the truth. |

| Adaptability to Change | Slow and costly. New regulations require building new data pipelines and reporting engines from scratch. | Rapid and efficient. New requirements are met by creating new reporting modules that consume data from the existing fabric. |

| Operational Efficiency | Low. Significant manual effort is required for data reconciliation and report validation. | High. Automation is maximized through standardized processes for data ingestion, quality control, and report generation. |

| Risk Management | Fragmented. It is difficult to get a timely, enterprise-wide view of risk exposures. | Holistic. The unified data view enables comprehensive and near-real-time risk aggregation and analysis. |

| Cost of Ownership | High. Maintenance of multiple redundant systems and processes leads to escalating costs. | Lower over time. Initial investment is offset by reduced maintenance, lower compliance costs, and economies of scale. |

The Role of the Regulatory Rules Engine

A critical component of the unified strategy is the implementation of a centralized regulatory rules engine. This is a specialized software component responsible for encapsulating the logic for each specific regulation. The rules engine consumes data from the unified fabric and applies the complex validation, transformation, and formatting rules required for a given report. This decouples the regulatory logic from the core data processing pipeline, which is a key architectural principle for achieving agility.

- Centralized Logic ▴ Instead of having regulatory rules scattered across various applications and spreadsheets, they are all managed in a single, auditable repository. This dramatically simplifies the process of updating rules in response to regulatory changes.

- Automation ▴ The engine can automatically determine which transactions are reportable under which jurisdictions and apply the correct set of rules. This reduces the reliance on manual intervention and the associated risk of human error.

- Traceability ▴ Every data point in a final report can be traced back through the rules engine to its source in the data fabric. This provides a clear and defensible audit trail for regulators, demonstrating data lineage and control.

By combining a unified data fabric with a powerful rules engine, an institution can create a highly automated and adaptable reporting ecosystem. This system is designed to absorb the impact of regulatory change without requiring a fundamental re-engineering of its core components. It transforms regulatory compliance from a source of systemic risk and operational drag into a well-defined, well-controlled industrial process.

Execution

A Phased Implementation Protocol

The execution of a unified reporting architecture is a complex, multi-stage undertaking that requires disciplined project management and a clear architectural vision. It is not a single technology project but a fundamental transformation of how the organization manages and utilizes its data. A phased approach is essential to manage risk, demonstrate value incrementally, and build momentum for the program over time. The process moves from establishing a solid foundation of governance and data management to the incremental rollout of reporting capabilities across different regulatory regimes.

- Establish The Governance Framework ▴ The initial phase is entirely non-technical. It involves forming a cross-functional steering committee with representatives from business, technology, compliance, and risk. This group is responsible for defining the overall data strategy, establishing the canonical data model, and setting the policies for data quality, ownership, and access control. Without this foundational agreement, any technology implementation is destined to fail.

- Develop The Core Data Fabric ▴ This phase focuses on building the central data pipeline. It involves selecting the appropriate technology stack for data ingestion, storage, and processing. The team will build connectors to key source systems and implement the initial data quality checks and normalization routines to map source data to the canonical model. The goal of this phase is to create a trusted, “golden source” repository for a limited set of critical data elements.

- Onboard The First Regulatory Module ▴ With the core fabric in place, the team can now focus on delivering the first regulatory report. A high-volume, complex report such as MiFID II transaction reporting is often a good candidate as it demonstrates the power of the new architecture. The team will build the specific rules, transformations, and formatting logic for this regulation within the rules engine and connect it to the data fabric. Successful delivery of this first module is a critical milestone that proves the viability of the concept.

- Iterate And Expand ▴ Following the successful implementation of the first module, the program moves into an iterative cycle of expansion. New source systems are progressively connected to the fabric, enriching the central data repository. Additional regulatory reporting modules are built and deployed for other jurisdictions and regimes (e.g. EMIR, SFTR, Dodd-Frank). With each iteration, the value of the unified architecture grows, and the cost of delivering new reports decreases as more reusable data and components become available.

Jurisdictional Data Mapping a Practical Example

The core of the execution challenge lies in mapping data from a single transaction into the divergent formats required by different regulators. A unified system must be able to handle these variations systematically. Consider a simple interest rate swap traded between a US entity and an EU entity. This single trade is reportable under both US (Dodd-Frank) and EU (EMIR) regulations, which have different field requirements and data standards.

The system’s intelligence is demonstrated by its ability to translate a single internal data representation into multiple, compliant regulatory dialects.

The table below illustrates how the unified architecture would take a standardized set of data elements from its canonical model and map them to the specific fields required by each regulator. The rules engine would perform these transformations automatically based on the jurisdictions of the counterparties involved.

| Canonical Data Element | Sample Value | EMIR (EU) Report Field | Dodd-Frank (US) Report Field |

|---|---|---|---|

| Internal Trade ID | SWP-2025-98765 | Trade ID | Unique Swap Identifier (USI) |

| Execution Timestamp | 2025-08-15T10:30:00.123Z | Execution Timestamp (UTC) | Execution Timestamp (Local Time) |

| Counterparty A ID | 5493001B3S4B9X2D1F07 | ID of the Counterparty (LEI) | Counterparty ID 1 (LEI) |

| Counterparty B ID | HWUPKR0MPOU8FGXBT395 | ID of the Other Counterparty (LEI) | Counterparty ID 2 (LEI) |

| Product Classification | InterestRate:Swap:FixedFloat | Product ID Type (ISIN/AII) | Taxonomy (CFTC Product Code) |

| Notional Amount | 10000000 | Notional | Notional Amount |

| Venue of Execution | OTF-LON | Venue of Execution (MIC) | (Not explicitly required in same format) |

The Supporting Technology Stack

A modern, unified reporting architecture is supported by a carefully selected set of technologies. While the specific products may vary, the functional components are consistent across most successful implementations. The goal is to create a scalable, resilient, and auditable platform that can handle large volumes of data in near-real-time.

- Data Ingestion and Streaming ▴ Technologies like Apache Kafka are essential for capturing trade and risk events as they happen and feeding them into the data fabric in a reliable and ordered manner.

- Data Lake / Lakehouse ▴ Cloud-based storage solutions like Amazon S3 or Google Cloud Storage provide a scalable and cost-effective repository for raw and processed data. Platforms like Databricks or Snowflake overlay this with powerful processing and querying capabilities, forming a “data lakehouse.”

- Data Transformation and Quality ▴ Tools such as dbt (Data Build Tool) or Apache Spark are used to implement the complex business logic required to clean, enrich, and transform raw data into the canonical model. Data quality frameworks are integrated into these pipelines to automatically validate data at each stage.

- Regulatory Rules Engine ▴ This can be a commercial off-the-shelf (COTS) product specifically designed for regulatory reporting or a custom-built application using a business rules management system (BRMS) like Drools.

- Reporting and Analytics Layer ▴ Business intelligence tools like Tableau or Power BI are used to create dashboards for monitoring the reporting process, tracking data quality metrics, and providing insights to compliance and risk teams.

References

- Crisil. “Navigating regulatory compliance with unified data platforms in capital markets.” Crisil, 2023.

- EY. “Regulatory reporting technology and architecture ▴ consolidated financial and regulatory data approach.” Ernst & Young, 13 May 2025.

- BIS Innovation Hub. “Challenges of Regulatory Reporting.” Bank for International Settlements, 5 October 2021.

- Nagri, Idris. “The Challenges of Regulatory Reporting.” The Global Treasurer, 22 January 2019.

- Tilley, Sean. “The Global Regulatory Convergence ▴ A Catalyst for Smarter Compliance.” Insurance Edge, 13 August 2025.

- Flagright. “Overcoming Compliance Data Fragmentation.” Flagright, 26 September 2023.

- Safebooks AI. “Overcoming Data Fragmentation in Financial Data Governance.” Safebooks AI, 2024.

- Grygo, Eugene. “Why It’s Time to End Regulatory Fragmentation.” FTF News, 30 July 2025.

- International Swaps and Derivatives Association. “Regulatory Driven Market Fragmentation.” ISDA, January 2019.

- Eurofi. “Global financial and regulatory fragmentation.” Eurofi, 2023.

- Deloitte. “Banking Regulation Strategy.” Deloitte, 2024.

- ASEAN Business Partners. “Navigating Regulatory & Compliance in the Banking Industry.” ASEAN Business Partners, 2024.

Reflection

Beyond Compliance a Systemic Advantage

Constructing a unified reporting architecture is a formidable undertaking, demanding significant investment in technology, process, and people. The primary motivation is often defensive ▴ to manage the escalating cost and complexity of global compliance. Yet, viewing this endeavor solely through the lens of regulatory obligation is to miss its profound strategic value.

The true outcome of this architectural transformation is the creation of a high-fidelity, enterprise-wide data asset. This asset becomes the central nervous system of the institution, providing a level of clarity and insight that is simply unattainable with fragmented, legacy systems.

The ability to aggregate risk exposures accurately in near-real-time, to understand client activity holistically across all products and regions, and to model the impact of market events with confidence provides a distinct competitive advantage. The architecture built to satisfy the regulator becomes the engine that drives smarter business decisions. The question for institutional leaders, therefore, shifts from “How do we solve our regulatory reporting problem?” to “What strategic opportunities are created once we have a single, trusted view of our entire business?”. The answer to that question will define the winners in the next decade of global finance.

Glossary

Unified Reporting Architecture

Basel Iii

Bcbs 239

Gdpr

Mifid Ii

Data Model

Regulatory Fragmentation

Unified Data Fabric

Data Governance

Data Quality

Canonical Data Model

Canonical Model

Data Fabric

Regulatory Rules

Rules Engine

Data Lineage

Reporting Architecture

Regulatory Reporting

Dodd-Frank

Emir