Concept

The Uncalibrated Signal

An algorithmic trading system operates on a representation of the market, a numerical abstraction of complex human behavior. This abstraction, in its raw form, is a cacophony of disparate scales and magnitudes. A security’s price, measured in hundreds of dollars, exists in the same dataset as its volume, counted in the millions, and a momentum indicator, oscillating within a fixed range of 0 to 100. For a quantitative model, this is an incoherent environment.

The model, devoid of context, will assign importance based on magnitude. Consequently, the million-share volume block will invariably drown out the subtle, predictive shift in the momentum indicator. The performance of a strategy is therefore contingent on the coherence of the data environment presented to the algorithm. Data normalization is the discipline of creating this coherence.

It is the rigorous process of translating a chaotic stream of market information into a calibrated, internally consistent language that a trading algorithm can interpret. This process establishes a stable frame of reference, ensuring that the analytical power of the model is directed at genuine market dynamics, not spurious numerical artifacts.

A Foundation for Comparative Analysis

Algorithmic strategies, particularly those employing machine learning or statistical arbitrage, derive their edge from identifying and exploiting relationships between different financial instruments or data series. A strategy might seek to model the relationship between a lead equity index and a basket of its constituent stocks, or between a currency’s spot price and its volatility index. Such analysis is impossible without a common measurement basis. Comparing the raw price series of a $2,000 stock with that of a $50 stock is a flawed premise; their scales are fundamentally incompatible.

Normalization re-casts these disparate data series into a shared, dimensionless space. Within this space, a 1% move in the first stock becomes directly comparable to a 1% move in the second. This act of rescaling is what enables the algorithm to perform meaningful cross-sectional analysis, identify subtle correlations, and ultimately detect the faint signals of market inefficiency upon which sophisticated strategies are built. It transforms data from a collection of isolated numbers into an interconnected system of relative values.

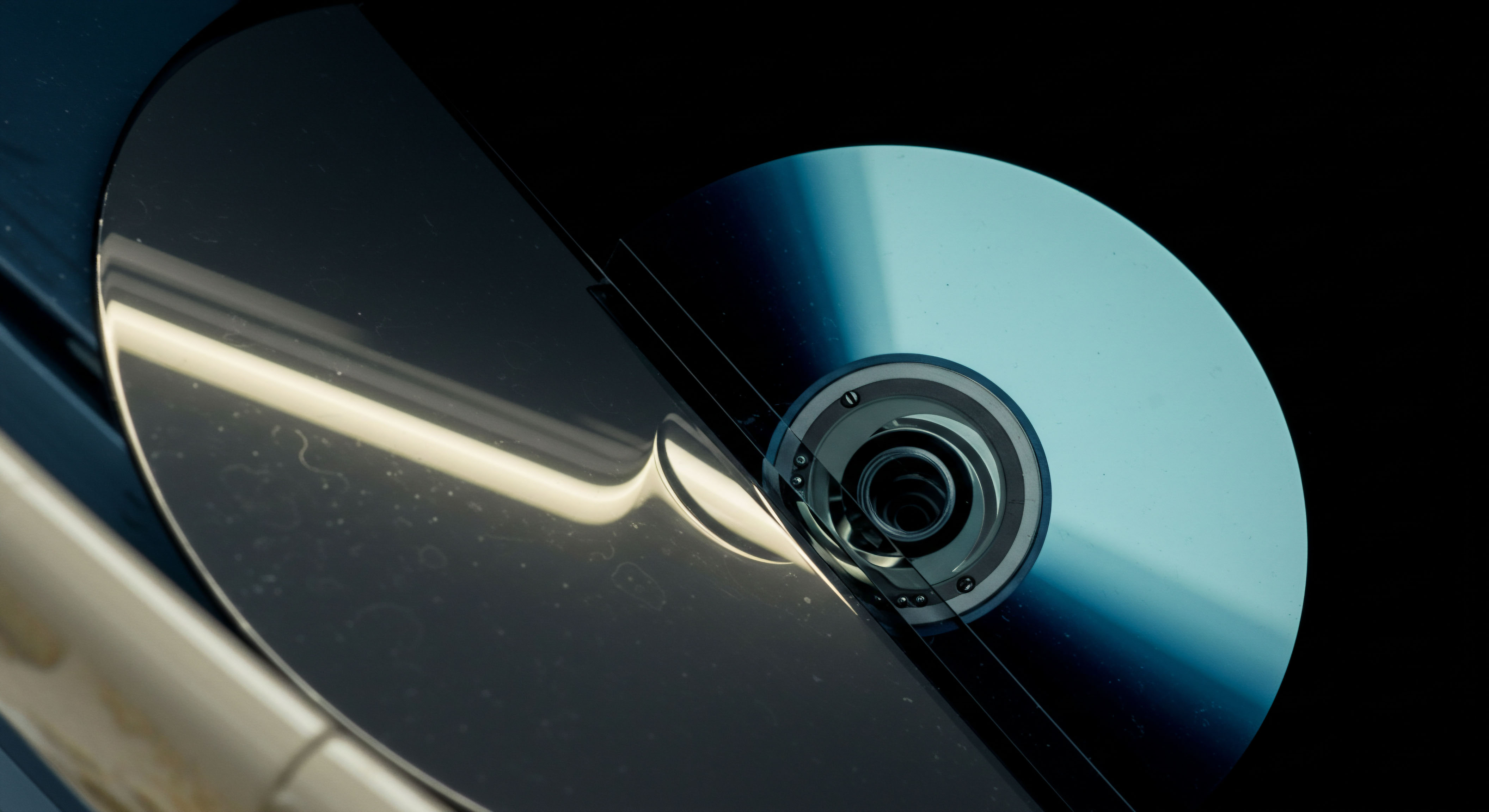

Data normalization transforms raw, multi-scaled market data into a unified operational landscape for an algorithm.

Mitigating the Influence of Distributional Anomalies

Financial market data is notoriously non-stationary, characterized by periods of calm interspersed with violent, short-lived volatility spikes. These outlier events, such as flash crashes or earnings surprises, can severely distort the statistical properties of a dataset. An algorithm trained on raw data containing such anomalies will develop a skewed perception of market behavior. Its parameters will be biased by extreme, infrequent events, leading to poor performance during more typical market regimes.

Data normalization techniques provide a mechanism for managing the impact of these outliers. Methods like Z-score standardization quantify each data point in terms of its deviation from the mean, effectively measuring its statistical significance. Other approaches, such as robust scaling, use medians and interquartile ranges to establish a baseline that is inherently less sensitive to extreme values. By systematically rescaling data, these techniques contain the distorting influence of outliers, ensuring that the trading model learns from the persistent, underlying structure of the market rather than being misled by its ephemeral shocks. This produces a more resilient and adaptive trading strategy, one that is calibrated to the rule, not the exception.

Strategy

Selecting the Appropriate Calibration Protocol

The choice of a data normalization method is a pivotal strategic decision, directly influencing how a trading algorithm perceives and reacts to market information. There is no universally superior technique; the optimal choice is contingent upon the statistical profile of the input data and the specific logic of the trading strategy. The three principal protocols for consideration are Min-Max Scaling, Z-Score Standardization, and Robust Scaling. Each imposes a different structural interpretation on the data, with distinct consequences for outlier sensitivity, data distribution, and the preservation of relative relationships.

A system architect must therefore diagnose the characteristics of the strategy’s data inputs ▴ such as the presence of fixed boundaries or the prevalence of extreme outliers ▴ and align the normalization protocol accordingly. This decision shapes the feature space in which the model operates, defining the very nature of the patterns it is capable of detecting.

Min-Max Scaling

This technique rescales data to a fixed, predefined range, most commonly. It is a linear transformation that preserves the relative ordering and proportional distances between data points. Its primary strategic application is for data series that are known to operate within a bounded range. Technical indicators like the Relative Strength Index (RSI) or the Stochastic Oscillator, which are mathematically constrained between 0 and 100, are ideal candidates.

By scaling these indicators to , the algorithm can interpret their values as a consistent percentage of their total possible range, simplifying the logic for defining overbought or oversold conditions. The significant vulnerability of Min-Max scaling, however, is its acute sensitivity to outliers. A single new maximum or minimum value introduced into the dataset will compress the rest of the data into a narrower portion of the range, altering the interpretation of all subsequent data points. This makes it less suitable for unbounded data series like price or volume, where extreme events can cause radical and undesirable rescaling of the entire dataset.

Z-Score Standardization

Z-Score standardization reconstructs the dataset based on its statistical properties, specifically its mean and standard deviation. Each data point is transformed into a “Z-score” that represents the number of standard deviations it is from the dataset’s mean. This centers the data around a mean of 0 and scales it to a standard deviation of 1. The strategic advantage of this method is its utility for algorithms that assume a normal (Gaussian) distribution of inputs, a common baseline for many statistical models.

It excels in comparing values from different datasets that have different means and standard deviations. For instance, it allows a volatility reading from one asset to be directly compared to a volume surge in another by placing both on the common scale of statistical significance. The protocol’s primary limitation is its vulnerability to outliers, which is a direct consequence of its reliance on the mean and standard deviation. Both of these statistical measures are themselves highly sensitive to extreme values. An outlier will pull the mean and inflate the standard deviation, thereby distorting the Z-scores of all other data points in the set.

The strategic selection of a normalization method aligns the data’s structure with the algorithm’s analytical assumptions.

Robust Scaling

This protocol was specifically designed to address the weaknesses of Z-score standardization in the presence of outliers. Instead of using the mean and standard deviation, Robust Scaling uses the median and the Interquartile Range (IQR), which is the range between the 25th and 75th percentiles of the data. The median is, by definition, resistant to the influence of extreme values, and the IQR provides a measure of statistical dispersion that is similarly insensitive to outliers at the tails of the distribution. The resulting scaled data is centered around the median and is more representative of the bulk of the data, with outliers having a much-reduced impact on the overall scaling.

This makes Robust Scaling the superior strategic choice for datasets known to contain significant anomalies or for strategies that must maintain consistent performance through periods of high market stress and unpredictable events. It is particularly well-suited for mean-reversion strategies, where the identification of a true central tendency, undisrupted by outliers, is the core of the trading logic.

Comparative Protocol Analysis

A disciplined evaluation of normalization protocols is essential for the construction of a resilient trading system. The following table provides a strategic comparison of the primary methods, offering a clear framework for selecting the appropriate technique based on specific data characteristics and algorithmic requirements.

| Protocol | Mechanism | Optimal Use Case | Outlier Sensitivity | Distributional Assumption |

|---|---|---|---|---|

| Min-Max Scaling | Linearly scales data to a fixed range (e.g. ). | Bounded indicators like RSI or Stochastics. | Very High. New min/max values rescale the entire dataset. | None. Preserves the original distribution’s shape. |

| Z-Score Standardization | Rescales data based on mean and standard deviation. | Comparing features with different units for models assuming normality. | High. Mean and standard deviation are both sensitive to outliers. | Assumes a Gaussian (normal) distribution for maximal effectiveness. |

| Robust Scaling | Rescales data based on median and Interquartile Range (IQR). | Unbounded data with known or expected outliers (e.g. price returns). | Low. Median and IQR are inherently resistant to extreme values. | None. Effective for skewed or non-normal distributions. |

Lookahead Bias and the Normalization Horizon

A critical and often overlooked aspect of implementing data normalization is the temporal dimension. How the normalization parameters (e.g. the mean and standard deviation for Z-score, or the min and max for Min-Max scaling) are calculated can introduce a subtle but fatal flaw into a backtest ▴ lookahead bias. If one calculates the normalization parameters using the entire historical dataset and then applies them to that same dataset for backtesting, the model at the beginning of the period is being given information from the future.

The minimum and maximum values for the whole period, for example, are not known until the period is over. This contaminates the backtest, producing unrealistically optimistic performance metrics that will not be replicable in live trading.

To execute normalization correctly and maintain the integrity of a simulation, the parameters must be calculated using only data that would have been available at that point in time. This is typically achieved through one of two methods:

- Rolling Window Normalization ▴ The normalization parameters are calculated using a fixed-length rolling window of past data (e.g. the last 252 trading days). For each new data point, the window slides forward, and the parameters are recalculated. This method is adaptive and responds to recent market dynamics.

- Expanding Window Normalization ▴ The parameters are calculated using all available historical data up to the current point. The window grows over time. This method is more stable but less responsive to recent changes in market regime.

The choice between a rolling and expanding window is another strategic decision. A rolling window is often preferred for strategies that seek to adapt to changing market conditions, while an expanding window may be suitable for modeling more stable, long-term relationships. The failure to implement such a temporally-correct normalization scheme is a fundamental error in system design that invalidates any subsequent performance analysis.

Execution

Operationalizing the Z Score Protocol

The implementation of a normalization protocol is a precise, procedural task. To illustrate, we will detail the operational steps of applying Z-Score standardization to a raw market data feed. This protocol is chosen for its widespread use in statistical models. The objective is to transform multiple data series, each with its own native scale and distribution, into a unified format where each value represents its statistical significance relative to its recent history.

This process is fundamental to any multi-factor model where inputs like price velocity, trading volume, and market volatility must be synthesized into a single trading signal. The execution requires a disciplined application of a rolling statistical calculation to avoid lookahead bias and ensure the model is only ever operating on information that would have been available in a live environment.

Consider a trading model that uses three features ▴ the 20-day simple moving average (SMA) of the closing price, the 20-day SMA of trading volume, and the 14-day Relative Strength Index (RSI). Each feature has a different scale. The price SMA is in dollars, the volume SMA is in millions of shares, and the RSI is an index value between 0 and 100. The following table demonstrates the raw data for a hypothetical security over a five-day period, which will serve as our input.

Input Data before Normalization

| Day | Price SMA (USD) | Volume SMA (Shares) | RSI (Index) |

|---|---|---|---|

| 1 | 150.25 | 2,500,000 | 55.2 |

| 2 | 151.00 | 2,800,000 | 60.1 |

| 3 | 149.80 | 2,300,000 | 48.5 |

| 4 | 152.50 | 3,500,000 | 68.3 |

| 5 | 153.10 | 3,900,000 | 71.0 |

Procedural Application of Z Score

To correctly apply Z-Score standardization without lookahead bias, we use a rolling window. For this example, we assume a 50-day rolling window to calculate the mean and standard deviation. The Z-score for a data point x is calculated as ▴ Z = (x – μ) / σ, where μ is the mean of the previous 50 days and σ is the standard deviation of the previous 50 days.

- Establish the Lookback Period ▴ Define a rolling window, for instance, 50 periods. This is a critical parameter to be optimized during strategy development.

- Calculate Rolling Statistics ▴ For each time step (e.g. each day), calculate the mean (μ) and standard deviation (σ) for each feature using the data from the preceding 50 periods.

- Apply the Transformation ▴ For the current data point of each feature, apply the Z-score formula using the rolling statistics calculated in the previous step. This ensures no future information is used.

- Integrate into the Model ▴ The resulting Z-scores, now centered around 0 and scaled by standard deviation, are the inputs for the algorithmic model. A Z-score of +2.5 in volume is now directly comparable to a Z-score of -1.5 in RSI.

This disciplined, period-by-period calculation is the only valid method for applying normalization within a historical simulation. It faithfully replicates the flow of information that would occur in a live trading environment, where decisions must be made based solely on past data.

Quantifying the Performance Impact

The ultimate measure of a normalization protocol’s effectiveness is its impact on strategy performance. An uncalibrated model, susceptible to magnitude bias and outlier distortion, will almost certainly underperform a properly calibrated equivalent. The difference becomes evident when key performance indicators (KPIs) are compared across backtests run with raw data versus normalized data. The following table presents hypothetical backtest results for a multi-factor quantitative strategy over a five-year period, illustrating the performance differential created by the application of different normalization techniques.

Systematic normalization is not a minor data-cleaning step; it is a core determinant of a strategy’s profitability and robustness.

Hypothetical Backtest Performance Comparison (5-Year Period)

| Performance Metric | No Normalization | Z-Score Standardization | Robust Scaling |

|---|---|---|---|

| Cumulative Return | 35.4% | 72.8% | 81.2% |

| Annualized Return | 6.25% | 11.55% | 12.62% |

| Annualized Volatility | 18.5% | 15.2% | 14.8% |

| Sharpe Ratio | 0.34 | 0.76 | 0.85 |

| Maximum Drawdown | -35.2% | -22.1% | -19.8% |

| Sortino Ratio | 0.48 | 1.08 | 1.22 |

The results are unequivocal. The strategy with no normalization yields a low Sharpe ratio and a severe maximum drawdown, indicating poor risk-adjusted returns. Its performance is likely dominated by a single high-magnitude feature (e.g. volume) and is periodically destabilized by outlier events. The application of Z-Score Standardization provides a substantial improvement across all metrics, nearly doubling the Sharpe Ratio and significantly reducing the maximum drawdown.

This demonstrates the power of creating a coherent feature space. The strategy employing Robust Scaling shows a further incremental improvement, particularly in the risk metrics (Sharpe and Sortino Ratios, Maximum Drawdown). This suggests that for this particular dataset, the presence of outliers was a meaningful source of instability, and the use of an outlier-resistant protocol provided a tangible performance edge. The execution of a sound normalization protocol is a direct driver of alpha and risk mitigation.

References

- Patel, J. Shah, S. Thakkar, P. & Kotecha, K. (2015). “Predicting stock and stock price index movement using Trend Deterministic Data Preparation and machine learning techniques.” Expert Systems with Applications, 42(1), 259-268.

- Al Shalabi, L. & Shaaban, Z. (2006). “Normalization as a preprocessing engine for data mining and the approach of preference matrix.” International Conference on Dependability of Computer Systems, 207-214.

- Singh, D. & Singh, B. (2020). “Investigating the impact of data normalization on classification performance.” Applied Soft Computing, 97, 105524.

- Ecer, F. & Boyraz, S. (2021). “A new normalization method for multi-criteria decision-making ▴ Vector-based normalization.” Technological and Economic Development of Economy, 27(4), 843-866.

- Adekitan, A. I. & Salau, O. (2019). “The impact of data normalization on classification and regression algorithms.” International Journal of Information Technology and Computer Science, 11(8), 27-35.

- Prado, M. L. D. (2018). Advances in financial machine learning. John Wiley & Sons.

- Tukey, J. W. (1977). Exploratory Data Analysis. Addison-Wesley.

- Hastie, T. Tibshirani, R. & Friedman, J. (2009). The Elements of Statistical Learning ▴ Data Mining, Inference, and Prediction. Springer Science & Business Media.

- Box, G. E. & Cox, D. R. (1964). “An analysis of transformations.” Journal of the Royal Statistical Society ▴ Series B (Methodological), 26(2), 211-243.

- Chan, E. P. (2013). Algorithmic Trading ▴ Winning Strategies and Their Rationale. John Wiley & Sons.

Reflection

The Calibrated System

The rigorous application of data normalization is a foundational layer in the construction of an institutional-grade trading system. It reflects a core principle of quantitative analysis ▴ that the integrity of a model’s inputs dictates the quality of its outputs. An algorithm, however sophisticated, cannot produce a coherent signal from incoherent data. Viewing normalization as a mere preparatory task is to misunderstand its strategic importance.

It is an act of system calibration, of defining the very reality in which the strategy will operate. The choice of protocol and its careful, temporally-correct implementation is a primary determinant of a strategy’s robustness, its adaptability, and its ultimate capacity to generate persistent, risk-adjusted returns. The performance metrics are not simply numbers; they are the emergent properties of a well-calibrated system operating in a complex environment. The true edge lies not in a single parameter or rule, but in the architectural soundness of the entire signal processing chain, beginning with the first moment of data contact.

Glossary

Algorithmic Trading

Data Normalization

Statistical Arbitrage

Z-Score Standardization

Robust Scaling

Min-Max Scaling

Standard Deviation

Extreme Values

Lookahead Bias

Backtesting

Rolling Window

Maximum Drawdown