Concept

The Asymmetry of Trust in Data Aggregation

The discourse surrounding data security often revolves around erecting impenetrable perimeters. Yet, the foundational challenge in any data-driven process, particularly in sensitive operations like a Request for Proposal (RFP), is not merely about deflecting external threats. It is about managing the inherent vulnerability that arises when one entity must entrust its sensitive information to another. This transfer of data creates an immediate asymmetry of risk.

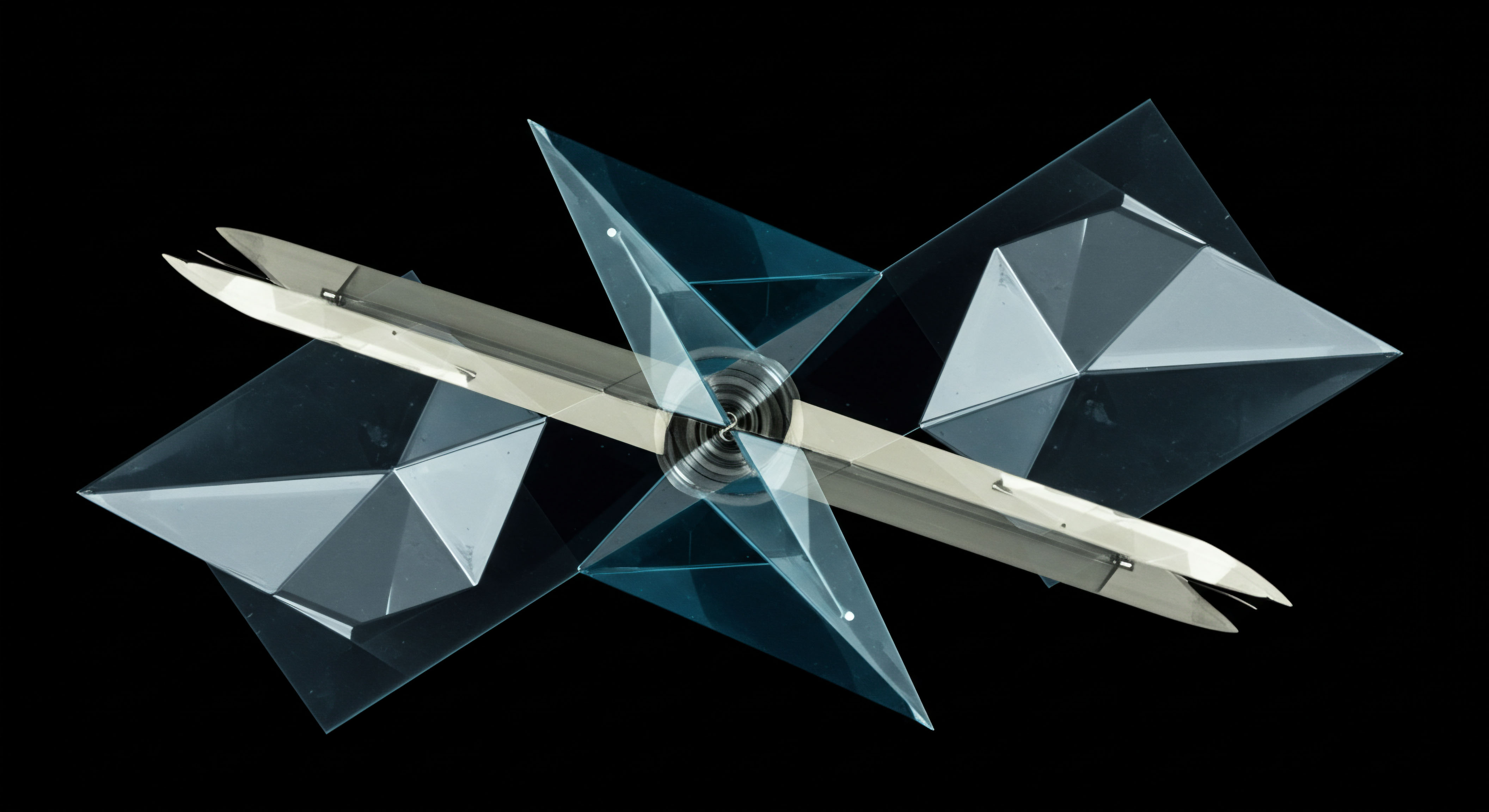

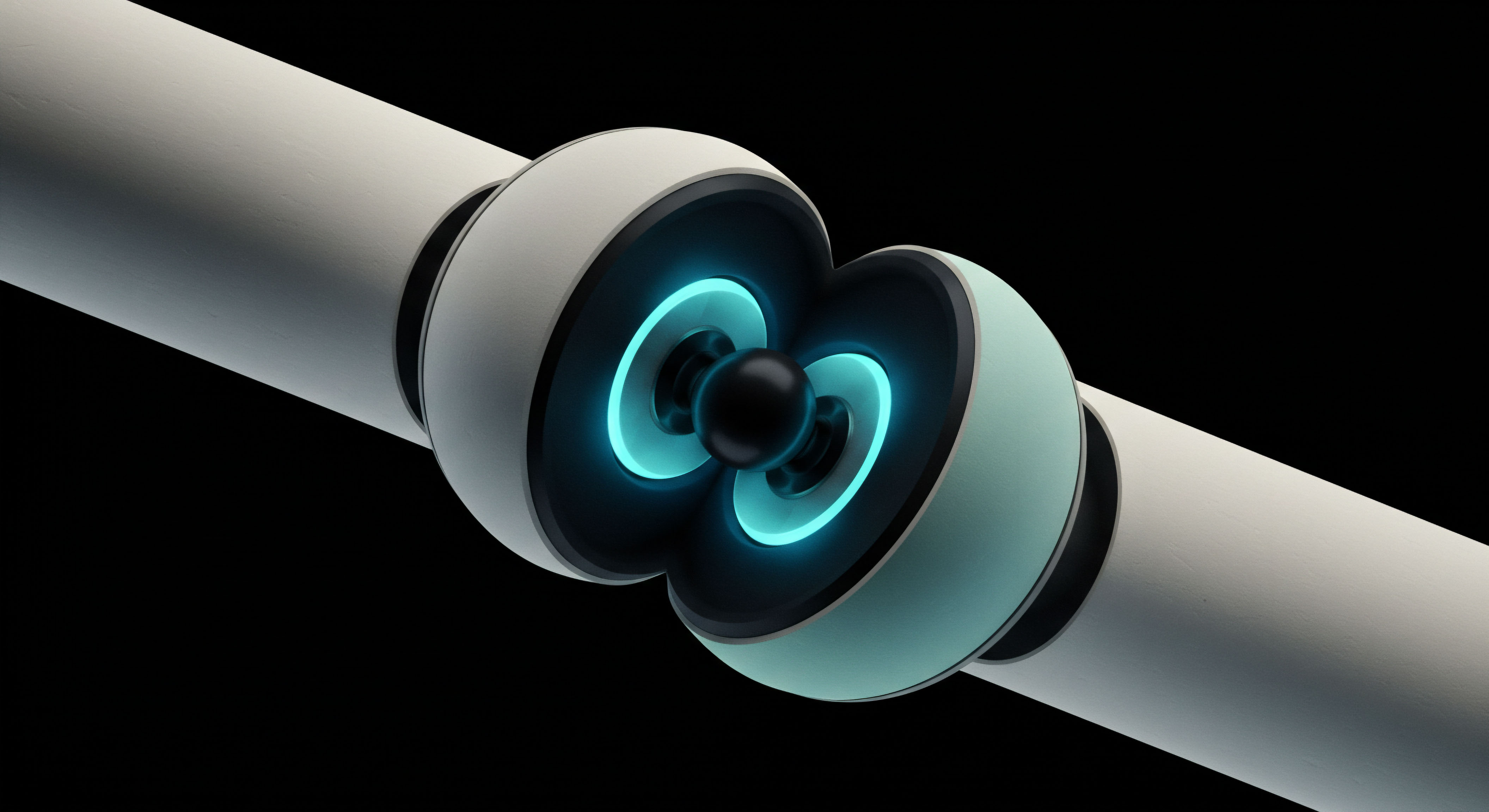

The core architectural divergence between local and global differential privacy is a direct response to this fundamental problem, offering two philosophically distinct models for mediating trust and ensuring data security. It is a choice between where the protective veil is placed ▴ at the source of the data itself, or around the centralized repository where it ultimately resides.

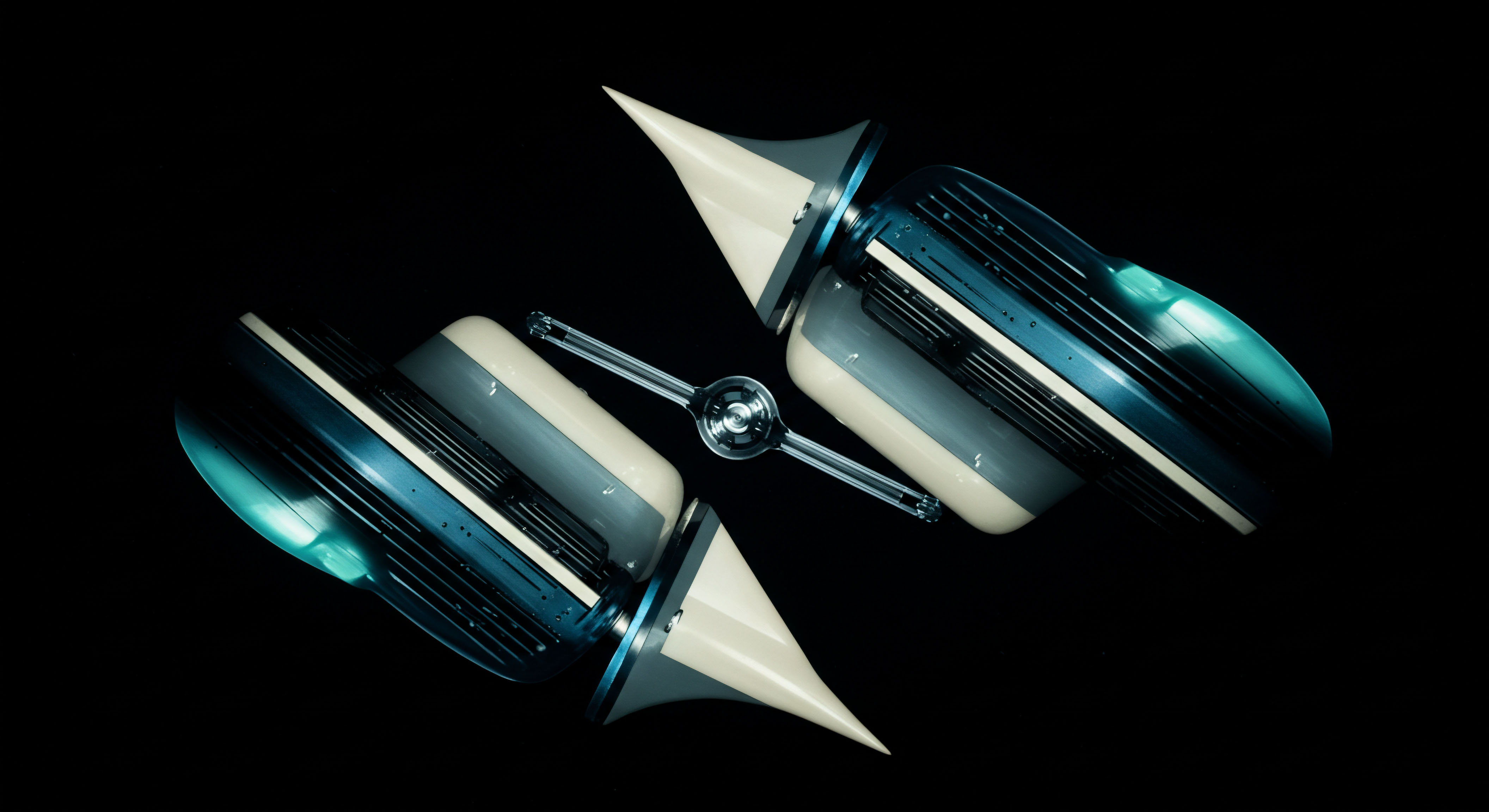

Global differential privacy operates on the principle of a trusted central curator. In this model, individual participants transmit their unaltered, high-fidelity data to a central aggregator. This aggregator, possessing the complete, sensitive dataset, assumes the responsibility of applying privacy-preserving mechanisms. Noise is mathematically introduced into the results of queries run against the database, not into the database itself.

The privacy guarantee, therefore, protects individuals from being identified or having their information inferred from the published outputs of the analysis. The entire system hinges on the absolute integrity and security of this central aggregator. A breach of this central entity compromises the entire dataset in its raw form. For an RFP, this is equivalent to all suppliers submitting their precise, confidential bids to a buyer, trusting that the buyer will secure that information and only use it for its stated purpose.

Local differential privacy fundamentally reallocates the responsibility for data protection from a central aggregator to the individual data owner.

In stark contrast, local differential privacy embodies a “trust-no-one” framework. It eliminates the need for a trusted central curator by shifting the act of data perturbation to the periphery, to the individual data owner. Before any information is transmitted, each participant applies a randomization algorithm ▴ a carefully calibrated injection of noise ▴ directly to their own data. The central aggregator, therefore, never receives the true, sensitive information from any single participant.

It only ever collects a stream of already-anonymized data points. While the privacy of each individual is robustly protected from the moment the data leaves their control, this approach comes at the cost of data utility. Aggregating these noisy inputs yields insights that are statistically useful but inherently less precise than those derived from a clean, centralized dataset. In an RFP context, this would be akin to each supplier adding a random, mathematically defined variance to their bid price before submission, allowing the buyer to understand the general price distribution without ever knowing any single supplier’s exact offer.

Strategy

Calibrating Privacy Guarantees against Data Utility

Choosing between local and global differential privacy is a strategic decision that balances the level of trust required against the desired precision of the resulting analysis. This decision has profound implications for the design of secure data systems, especially within the high-stakes environment of corporate procurement and RFPs. The selection of a model is not a purely technical choice; it is a strategic one that reflects the organization’s security posture, its relationship with its partners, and the specific goals of the data analysis.

The Global Model a Centralized Bastion of Trust

The strategic advantage of the global model is its superior accuracy. Because noise is added only once to the final, aggregated query result, the “privacy budget” (epsilon, ε) is spent very efficiently. A small amount of noise can provide a strong mathematical guarantee of privacy for the entire dataset, allowing for highly accurate and granular insights. This makes it the preferred model when the integrity of the results is paramount and a trusted central authority can be established and secured.

In an RFP setting, a global model would allow a buyer to conduct sophisticated analyses. For instance, they could accurately calculate the average bid price for a specific service line, identify correlations between pricing and vendor-provided metrics, or perform clustering to identify different tiers of suppliers. However, this power comes with a significant strategic liability. Suppliers must place complete trust in the buyer’s systems and integrity.

The risk of a data breach, whether malicious or accidental, is concentrated and catastrophic. A leak would expose the sensitive, un-noised commercial strategies of every participating supplier, causing irreparable damage to relationships and market standing.

- Trust Requirement High. Participants must trust the central aggregator completely with their raw data.

- Data Accuracy High. Minimal noise is introduced at the final stage of analysis, preserving data utility.

- Security Focus Perimeter defense. The primary strategic effort is in securing the central database and the aggregator’s infrastructure.

- RFP Application Ideal for internal analysis by a procurement department that has strong data governance and needs to perform detailed comparisons between bids.

The Local Model a Distributed Defense-in-Depth

The local model prioritizes individual privacy and minimizes trust above all else. Its strategic strength lies in its resilience to breaches of the central aggregator. Since the aggregator never holds the true data, a compromise of its systems yields only a collection of noisy, already-anonymized information.

This model is strategically sound when dealing with highly sensitive data, untrusted environments, or a user base that is unwilling to share raw information. Apple, for example, uses a form of local DP to gather usage statistics from iOS devices without accessing users’ personal data.

For RFPs, a local model could be used to gather market intelligence without exposing individual suppliers. A buyer could solicit “noisy” bids to understand the general price range for a product or service before initiating a formal, high-trust RFP process. This allows for preliminary market analysis without creating a high-risk repository of sensitive commercial data. The strategic trade-off is a significant loss in accuracy.

The amount of noise required at the individual level to provide a meaningful privacy guarantee is substantial. When these noisy data points are aggregated, the resulting statistics are approximations, lacking the precision of the global model.

| Strategic Factor | Global Differential Privacy | Local Differential Privacy |

|---|---|---|

| Primary Goal | Maximize analytical accuracy while providing a privacy guarantee on the output. | Maximize individual privacy by eliminating the need for a trusted third party. |

| Trust Locus | Placed entirely in the central data aggregator (the RFP issuer). | Distributed; no trust in the aggregator is required by the participants (suppliers). |

| Point of Noise Injection | Applied to the query result after data aggregation. | Applied to individual data points before transmission to the aggregator. |

| Impact on Data Utility | Low impact; results are highly accurate. | High impact; aggregated results are statistical approximations. |

| Vulnerability Profile | Single point of catastrophic failure at the central database. | Resilient to breaches of the central aggregator; vulnerability is at the individual level. |

Execution

Operationalizing Privacy Architectures in RFP Systems

The implementation of a differential privacy framework within an RFP or procurement system requires a deep understanding of the underlying mechanisms and their operational consequences. The architectural choice dictates not only the flow of data but also the nature of the algorithms used, the user experience for participating suppliers, and the types of analysis that can be reliably performed by the procurement entity.

Executing a Global Differential Privacy Framework

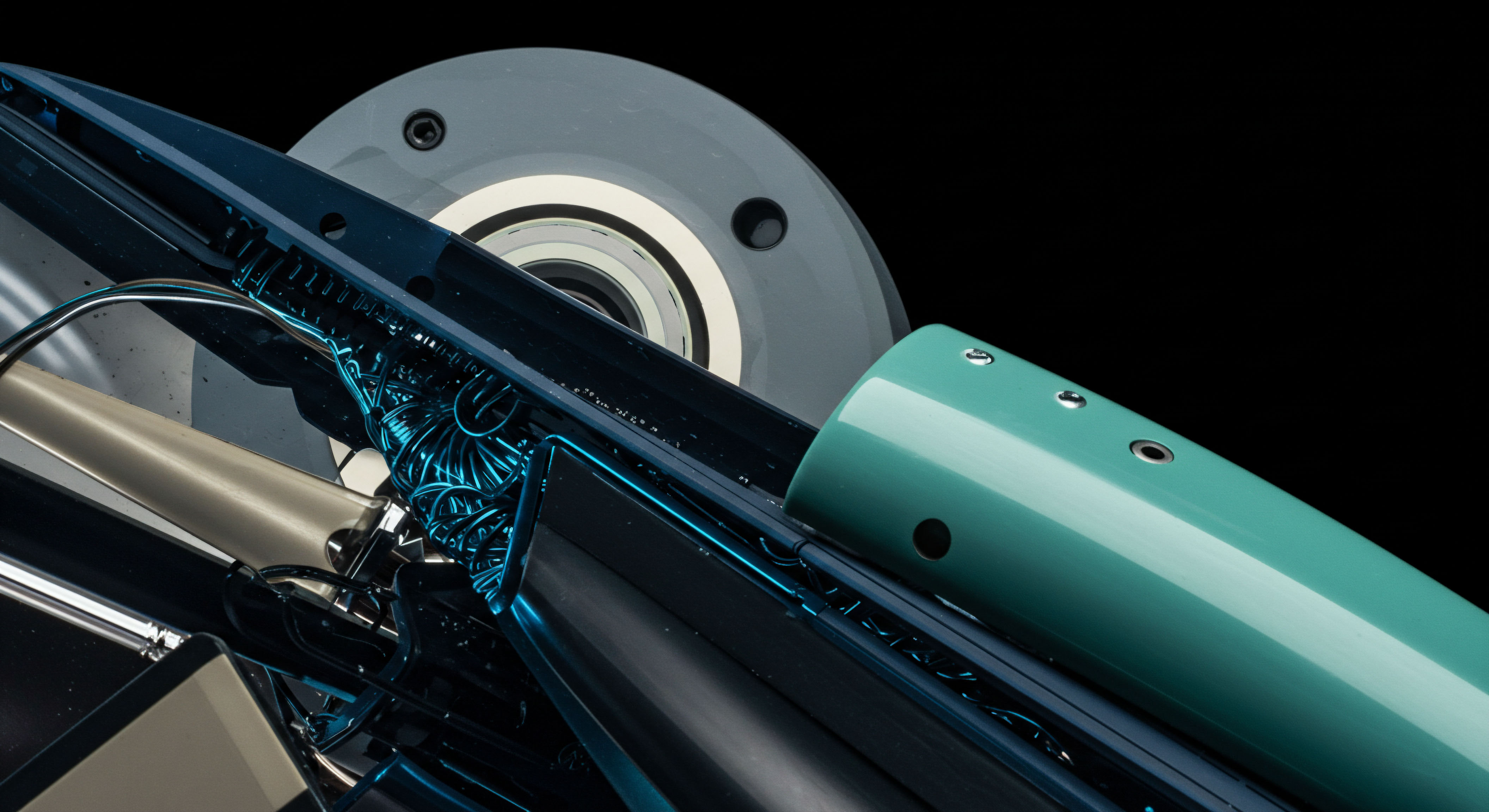

Implementing global DP is an exercise in centralized control and robust data engineering. The core of the system is a secure data vault where raw RFP submissions are stored. Access to this vault must be strictly controlled and logged. The execution flow is sequential and methodical.

- Secure Ingestion Suppliers submit their complete, un-privatized proposal data through secure, encrypted channels. The system must ensure the integrity and confidentiality of this data in transit and at rest.

- Query Formulation The procurement analyst formulates a specific question to ask of the dataset. For example, “What is the average proposed cost for vendors with more than five years of experience?”

- Differentially Private Mechanism Application A middleware layer intercepts the query. Instead of running the query directly on the database, it runs a differentially private version. For a numeric query like an average, this typically involves calculating the true average and then adding a calibrated amount of noise drawn from a Laplace or Gaussian distribution. The amount of noise is determined by the query’s sensitivity and the allocated privacy budget (epsilon).

- Result Delivery The noisy result is returned to the analyst. The raw data is never exposed during this process. The analyst receives a figure that is statistically close to the true average but contains enough plausible deniability to protect any individual contributor.

The successful execution of a global differential privacy model is contingent upon establishing an unimpeachable chain of trust with all data contributors.

Executing a Local Differential Privacy Framework

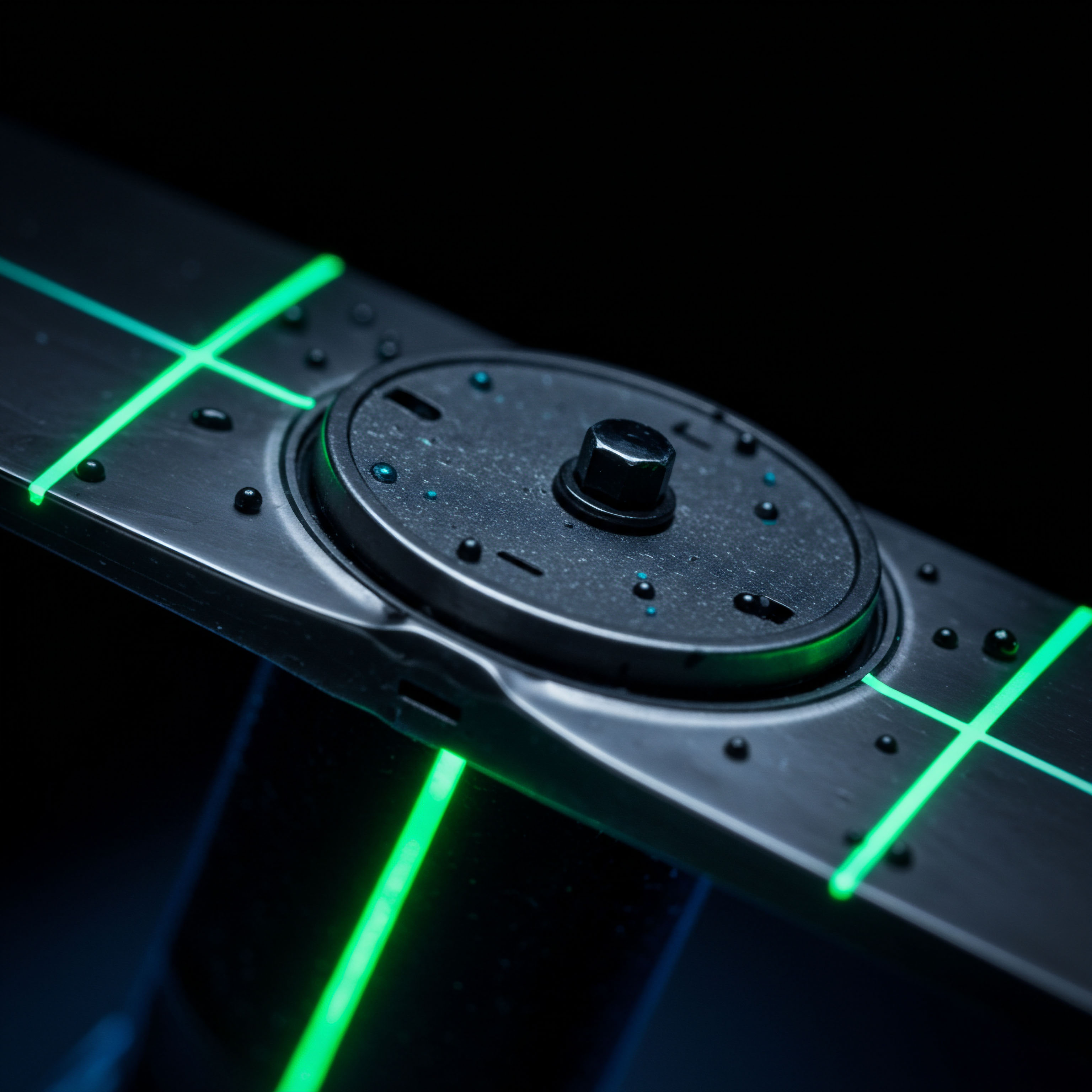

Implementing local DP decentralizes the privacy-preserving mechanism, shifting the operational burden to the client-side. This requires a different technological approach, often involving providing suppliers with specific tools or software to perturb their data before submission.

The most common mechanism for local DP with categorical or numerical data is Randomized Response. For a sensitive numerical value like a bid price, the process might be executed as follows:

- Client-Side Perturbation Before submitting a bid, the supplier’s system uses a pre-agreed algorithm. It might, for instance, add or subtract a random value drawn from a known distribution to their true bid price. This “noisy” price is what is submitted.

- Data Aggregation The buyer’s system receives a collection of these noisy prices. No individual price is the true price.

- Statistical Reconstruction The buyer, knowing the statistical properties of the noise that was added at the client-side, can perform a corrective analysis. By aggregating a large number of noisy bids, the buyer can compute an estimated average and distribution of the true prices, as the random noise tends to cancel out at scale. The result is an approximation of the market, not a precise calculation.

| Component | Global DP Execution | Local DP Execution |

|---|---|---|

| Core Algorithm | Laplace or Gaussian mechanism applied to query outputs. | Randomized Response or other perturbation techniques applied to individual data. |

| Implementation Point | Server-side, within a trusted data aggregator. | Client-side, on the user’s (supplier’s) device or system before submission. |

| Required Scale | Can provide useful results with a smaller number of participants. | Requires a large number of participants for the noise to aggregate out meaningfully. |

| Analytical Capability | Enables complex, high-accuracy queries (e.g. multi-dimensional analysis). | Primarily suited for simple, large-scale statistical estimations (e.g. histograms, means). |

The choice of execution model for RFP data security is therefore a direct function of the strategic goal. If the objective is to perform a high-fidelity, auditable comparison of a small number of trusted vendors, a global model provides the necessary accuracy. If the goal is to conduct a broad, low-risk market survey across a wide and potentially untrusted pool of suppliers, a local model provides robust privacy protection, albeit at the expense of analytical precision.

References

- Dwork, C. & Roth, A. (2014). The Algorithmic Foundations of Differential Privacy. Foundations and Trends in Theoretical Computer Science, 9 (3-4), 211 ▴ 407.

- Kasiviswanathan, S. P. Lee, H. K. Nissim, K. Raskhodnikova, S. & Smith, A. (2011). What can we learn privately? In SIAM Journal on Computing, 40 (3), 793-826.

- Chaudhuri, K. & Mishra, N. (2006). A new approach to privacy-preserving clustering. In Proceedings of the 22nd international conference on Data engineering.

- Erlingsson, Ú. Pihur, V. & Korolova, A. (2014). RAPPOR ▴ Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 21st ACM conference on Computer and communications security (pp. 1054-1067).

- Desfontaines, D. (2019). Local vs. central differential privacy. Blog post.

- OpenMined. (n.d.). Local vs Global Differential Privacy. Blog post.

- Vadhan, S. (2017). The Complexity of Differential Privacy. In Tutorials on the Foundations of Cryptography. Springer.

- Kairouz, P. Oh, S. & Viswanath, P. (2016). Extremal mechanisms for local differential privacy. In Advances in neural information processing systems, 29.

Reflection

Privacy as an Architectural Primitive

Ultimately, the integration of differential privacy into a data-handling framework like an RFP system forces a re-evaluation of how we perceive security. It moves the conversation from a reactive posture of building walls to a proactive one of architectural design. The choice between a local and global model is not merely a technical toggle but a declaration of the system’s core philosophy on trust, risk, and the intrinsic value of data. Considering these models compels an organization to look inward at its own operational integrity and outward at the nature of its relationships with its partners.

The resulting system, regardless of the specific model chosen, is one where privacy is not an afterthought or a feature, but a fundamental, structural component of its design. This is the new frontier of data security ▴ not just protecting data, but designing systems that are inherently respectful of the privacy of those who provide it.

Glossary

Data Security

Global Differential Privacy

Privacy-Preserving Mechanisms

Differential Privacy

Central Aggregator

Local Differential Privacy

Data Perturbation

Data Utility

Bid Price

Global Differential

Privacy Budget

Global Model

Local Model

Differential Privacy Framework

Epsilon

Randomized Response

Data Aggregation