Concept

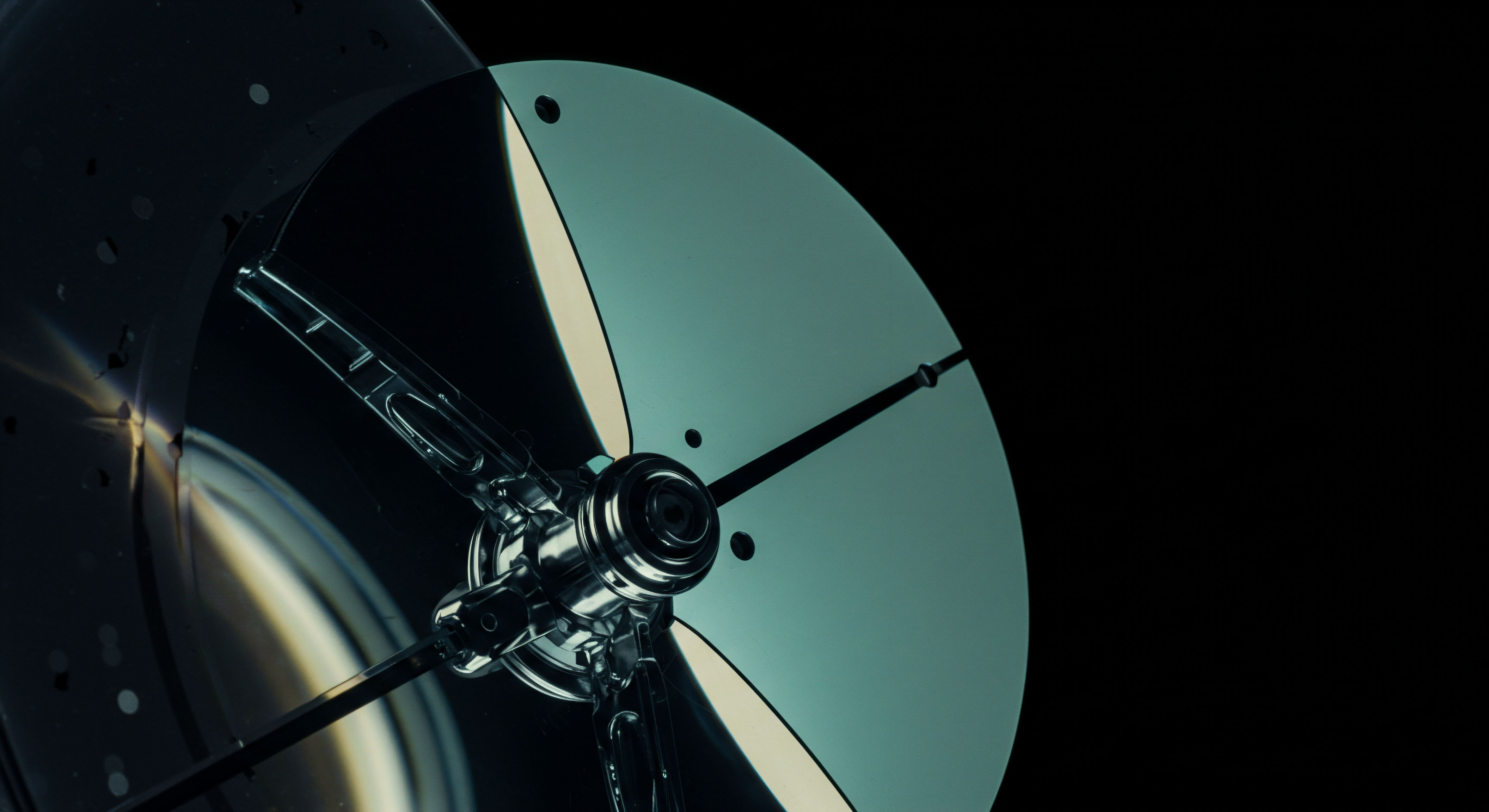

The Chasm between the Simulation and the Arena

A machine learning model’s performance in a research setting is a sterile, controlled experiment. The data is historical, finite, and often meticulously cleaned. Its behavior is predictable because the environment is a closed loop, a digital ghost of markets past. Deploying that same model into the live crypto derivatives market is akin to moving a laboratory specimen into an active, hostile ecosystem.

The transition reveals a profound chasm between the simulated perfection of the backtest and the chaotic, adversarial reality of live production trading. The core challenges are systemic, stemming from the fundamental nature of financial markets as complex adaptive systems where every action creates a reaction.

The primary obstacle in deploying trading models is the environmental shift from a static historical analysis to a dynamic, live market that actively responds to the model’s presence.

In research, data is a fixed asset. In production, it is a volatile, high-velocity stream, rife with inconsistencies and subject to abrupt regime shifts. Crypto markets, in particular, exhibit extreme non-stationarity; the statistical properties of market data, such as mean, variance, and correlation, change over time in unpredictable ways.

A model trained on a period of low volatility may fail catastrophically when a sudden geopolitical event or protocol exploit triggers a market panic. This phenomenon, known as concept drift, is one of the most persistent threats to model viability, where the statistical relationship between input variables and the target variable changes over time.

Foundational Pressures on Production Models

Beyond the data itself, the operational pressures of a live environment introduce complexities that are frequently abstracted away during research. Latency, the time delay in data transmission and order execution, becomes a critical variable. A strategy that appears profitable in a backtest, where execution is assumed to be instantaneous, can be rendered useless by microsecond delays in the real world.

This is particularly acute in derivatives trading, where the pricing of options and futures is highly sensitive to the speed of underlying asset price updates. The infrastructure that supports the model must be engineered for high availability and low latency, a significant technical hurdle involving specialized hardware and network architecture.

Furthermore, a model in production does not operate in a vacuum. It becomes part of a feedback loop with the market itself. Large orders generated by a model can create market impact, moving prices in an unfavorable direction and eroding the very alpha the model was designed to capture. This self-defeating prophecy is almost impossible to simulate accurately in a research environment.

The model’s predictions influence its own inputs, a recursive challenge that requires sophisticated execution algorithms and risk management overlays to mitigate. Security and compliance also become paramount, as the model interacts with exchange APIs and handles sensitive account information, introducing a surface area for technical and operational risk that is absent in an offline setting.

Strategy

A Systemic Framework for Production Readiness

Bridging the gap from research to production requires a strategic framework that treats the model as one component within a larger, integrated trading system. This approach, often encapsulated by the principles of MLOps (Machine Learning Operations), must be adapted for the unique demands of quantitative crypto trading. The focus shifts from optimizing a single model’s predictive accuracy to building a resilient, observable, and continuously adaptive operational pipeline. This system must be designed to manage the entire lifecycle of a model, from data ingestion and feature engineering to deployment, monitoring, and iterative retraining.

A robust data strategy is the bedrock of this framework. The fragmented and often unreliable nature of crypto data necessitates a sophisticated ingestion and validation process. A production system cannot rely on a single data source; it requires a multi-vendor, multi-exchange pipeline to ensure redundancy and cross-verification. This involves processing not just Level 1 (top-of-book) data, but also Level 2 (full order book depth) and Level 3 (full order and trade history) data, which are essential for understanding market microstructure and liquidity dynamics.

Effective strategy treats model deployment not as a singular event, but as a continuous lifecycle of adaptation and monitoring engineered for resilience.

Data Sources and Their Production Implications

The choice of data inputs has direct consequences for the complexity and robustness of the production system. Each type of data carries its own signature of quality issues and latency characteristics that must be managed strategically.

| Data Type | Primary Use Case in Crypto Derivatives | Key Production Challenge | Strategic Mitigation |

|---|---|---|---|

| Tick Data | High-frequency price prediction, volatility estimation | Massive volume, requires specialized time-series databases and low-latency processing. | Implement dedicated data handlers (e.g. using C++/Rust) and distributed data stores. |

| Order Book Data | Liquidity analysis, market impact modeling, short-term price pressure | High update frequency (snapshots vs. deltas), potential for exchange API inconsistencies. | Develop resilient order book reconstruction logic and cross-exchange validation checks. |

| On-Chain Data | Sentiment analysis, flow tracking, fundamental valuation metrics | Latency in block confirmation, node synchronization issues, data parsing complexity. | Run dedicated nodes, use specialized blockchain data providers, and build asynchronous processing pipelines. |

| Funding Rates | Basis trading, arbitrage strategy inputs | Inconsistent calculation times across exchanges, potential for data errors. | Aggregate data from multiple exchanges, apply smoothing algorithms, and set up anomaly alerts. |

Advanced Backtesting and Simulation Protocols

A pivotal strategic shift involves moving beyond simple backtesting to a more holistic simulation environment. A production-grade backtester must be an accurate replica of the live execution engine, accounting for the nuances of the crypto market structure.

- Market Impact Simulation ▴ The backtester must model how the model’s own hypothetical orders would affect the order book and subsequent prices. This prevents overestimation of profitability for liquidity-taking strategies.

- Fee and Slippage Modeling ▴ Precise modeling of exchange fees (maker vs. taker), funding rates, and realistic slippage based on order book depth is non-negotiable. These transaction costs are a primary source of divergence between backtest and live results.

- Latency Simulation ▴ The simulation should introduce realistic network and processing latencies between the signal generation event and the order placement event. This provides a more sober assessment of strategy performance.

- Walk-Forward Optimization ▴ Instead of a single train-test split on historical data, a walk-forward approach involves repeatedly training the model on one period and testing it on the subsequent period. This technique provides a more robust measure of how the model adapts to changing market conditions, helping to diagnose issues with concept drift before deployment.

This rigorous simulation process serves as the final gateway before a model is exposed to real capital. It provides a data-driven basis for setting risk limits and performance expectations, transforming the deployment process from a leap of faith into a calculated operational procedure.

Execution

The Operational Blueprint for Model Deployment

The execution phase translates strategy into a tangible, high-performance trading system. This is where the architectural decisions regarding software, hardware, and risk management protocols become concrete. The objective is to build a system that not only executes trades based on model signals but also provides a comprehensive framework for monitoring, intervention, and continuous improvement. A disciplined, process-oriented approach is essential to manage the inherent complexities and risks of live algorithmic trading in the crypto derivatives space.

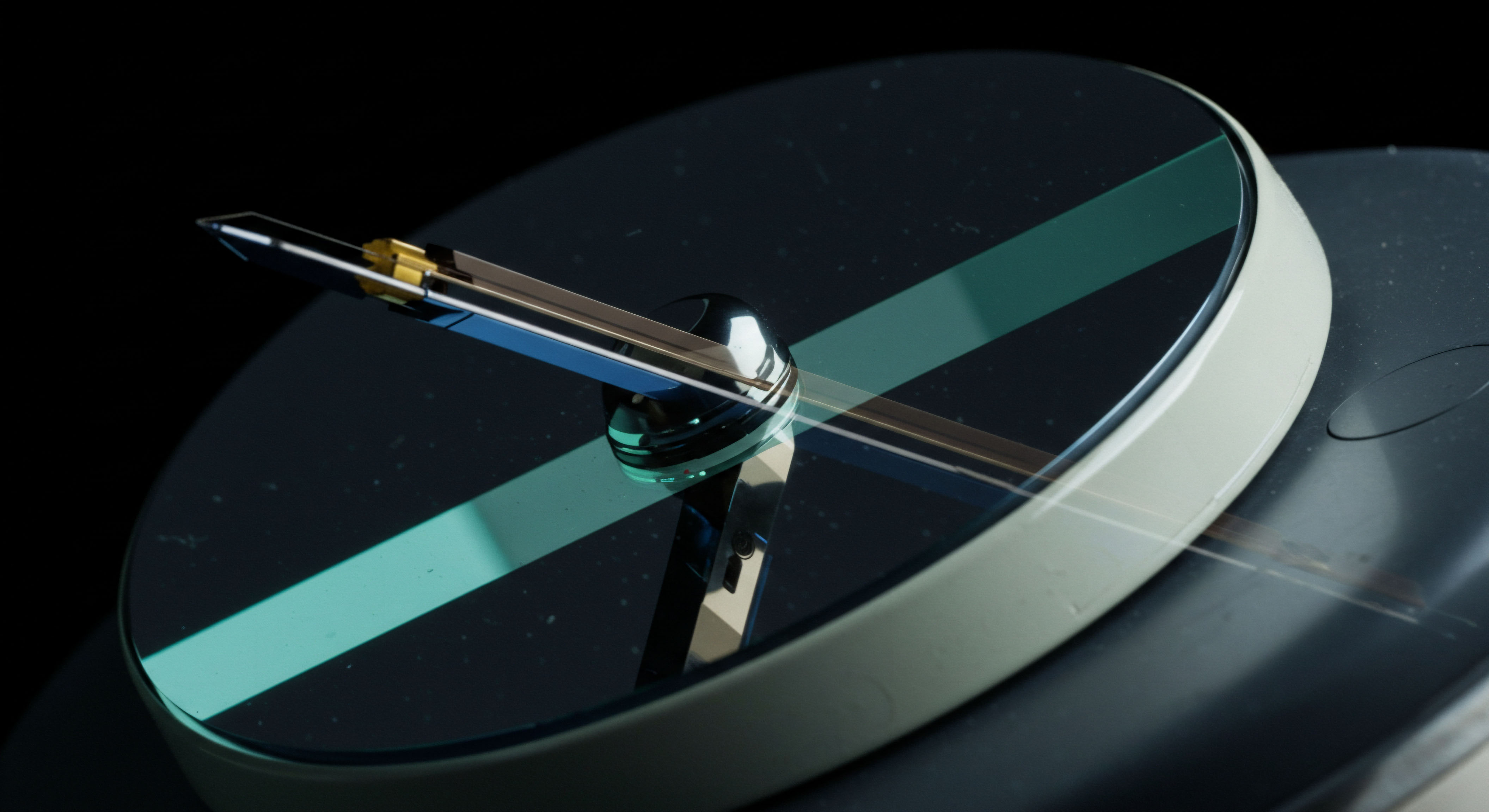

A Phased Deployment Pipeline

A model should never be deployed directly to a live production environment with significant capital. A phased approach allows for the incremental exposure of the model to real-world conditions, providing opportunities to identify and rectify issues with minimal financial impact. This process is a structured gauntlet designed to test every aspect of the model’s interaction with the live market.

- Shadow Trading (Paper Trading) ▴ The model runs on a live production server, receiving real-time market data and generating trading signals. However, instead of sending orders to the exchange, it records them in a local database. This phase is critical for verifying the data pipeline, signal generation logic, and basic system stability without any financial risk. It also allows for a direct comparison of hypothetical execution prices against actual market prices.

- Canary Deployment ▴ After successfully passing the shadow trading phase, the model is allocated a very small amount of capital. The goal here is to test the full execution loop, including order placement, management, and communication with the exchange APIs. This phase uncovers potential issues with API rate limits, error handling, and the reconciliation of account balances.

- Gradual Capital Allocation ▴ Assuming the canary deployment performs as expected, capital allocation is slowly increased over time. The scaling process is guided by a predefined set of key performance indicators (KPIs), such as Sharpe ratio, drawdown, and slippage metrics. Any significant deviation from the simulated performance triggers an automatic reduction in capital or a halt to the strategy pending review.

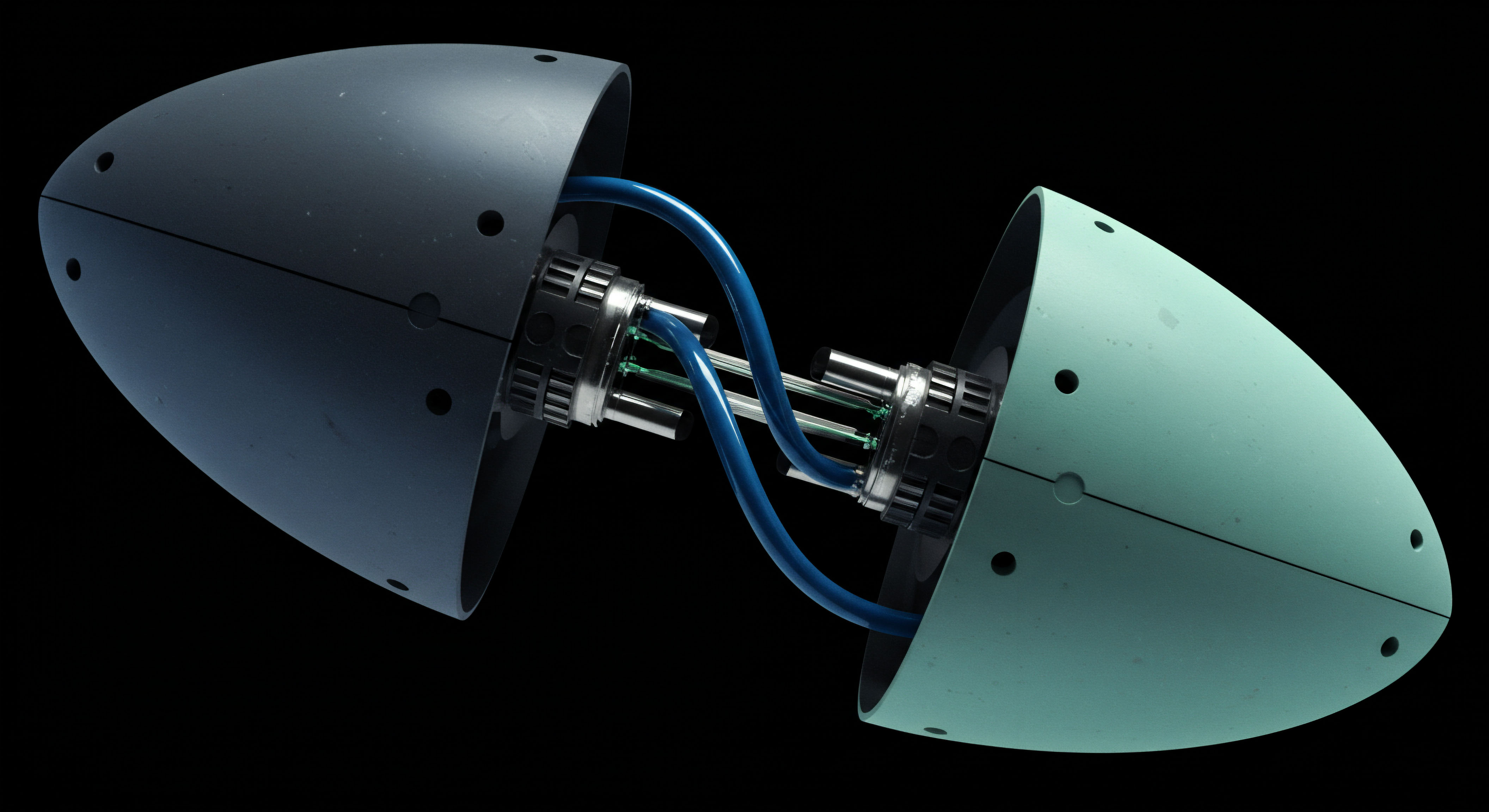

System Integration and Technological Architecture

The underlying technology stack is a critical determinant of a trading system’s performance and reliability. For crypto derivatives, where speed and uptime are paramount, the choice of infrastructure involves a trade-off between performance, cost, and maintainability.

The robustness of a trading model in production is a direct function of the resilience and observability of its underlying technological architecture.

| Architectural Component | On-Premise / Co-located Solution | Cloud-Based Solution (e.g. AWS, GCP) | Key Consideration for Crypto Trading |

|---|---|---|---|

| Data Ingestion | Direct cross-connects to exchange gateways, dedicated servers. | Utilizes cloud provider’s network, virtual machines. | Co-location offers the lowest latency, which is critical for high-frequency strategies. |

| Model Inference | Optimized hardware (e.g. GPUs), C++/Rust inference engine. | Managed ML services (e.g. SageMaker), scalable compute instances. | Cloud offers flexibility and scalability for complex models, while on-premise provides raw performance. |

| Order Execution | Low-latency messaging protocols (e.g. FIX, custom binary). | API calls over the public internet or dedicated cloud interconnects. | Security of API keys and minimizing network hops to the exchange are paramount. |

| Monitoring & Logging | Centralized logging servers (e.g. ELK stack). | Managed services (e.g. CloudWatch, Datadog). | Real-time alerting on performance degradation and system errors is non-negotiable. |

Real-Time Monitoring and the Human Override

Even the most sophisticated automated system requires human oversight. A comprehensive monitoring dashboard is the primary interface between the quantitative trader and the live model. This system must provide an at-a-glance view of the system’s health and performance, tracking a wide array of metrics.

- Performance Metrics ▴ Real-time PnL, Sharpe ratio, slippage vs. benchmark, and order fill rates.

- Data Health Metrics ▴ Latency of market data feeds, missing data points, and alerts for anomalous data (e.g. sudden price spikes).

- System Health Metrics ▴ CPU and memory usage of trading servers, network connectivity status, and API error rates.

- Model Drift Metrics ▴ Tracking the statistical distribution of model inputs and outputs to detect deviations from the training data. A significant drift may indicate that the market regime has shifted and the model is no longer operating in its optimal environment.

Crucially, this system must be paired with a robust risk management overlay. This includes automated “kill switches” that can halt the strategy if certain loss limits are breached, as well as manual override capabilities that allow a human trader to intervene in exceptional market circumstances. This synthesis of automated execution and expert human oversight represents the pinnacle of a well-executed deployment.

References

- Arora, J. & Aggarwal, R. (2021). “Challenges in Deployment of Machine Learning Models.” International Journal of Computer Applications, 174(42), 23-27.

- Cont, R. (2001). “Empirical properties of asset returns ▴ stylized facts and statistical issues.” Quantitative Finance, 1(2), 223-236.

- De Prado, M. L. (2018). Advances in financial machine learning. John Wiley & Sons.

- Gomes, J. et al. (2022). “Machine Learning in Financial Markets ▴ A Survey.” Journal of the Brazilian Computer Society, 28(1), 1-35.

- Israel, R. Kelly, B. T. & Moskowitz, T. J. (2020). “Can Machines ‘Learn’ Finance?”. The Journal of Finance, 75(6), 3165-3211.

- Jansen, S. (2020). Machine Learning for Algorithmic Trading ▴ Predictive models to extract signals from market and alternative data for systematic trading strategies with Python. Packt Publishing Ltd.

- Kim, J. & Kim, Y. (2019). “A Survey on a Decade of Deep Learning in Finance ▴ From Methods to Applications.” IEEE Access, 7, 143576-143596.

- Ntakaris, A. et al. (2018). “Mid-price prediction in the limit order book ▴ A deep learning approach.” Proceedings of the 2nd ACM International Conference on AI in Finance.

Reflection

The System as the Strategy

The successful transition of a machine learning model from a research environment to the live arena of crypto derivatives trading is ultimately a test of systemic integrity. It reveals that the predictive power of the model itself is only one part of a much larger equation. The true differentiator is the operational framework within which the model exists ▴ the robust data pipelines, the rigorous simulation environments, the low-latency infrastructure, and the vigilant monitoring systems.

Viewing these components not as peripheral support but as the core of the strategy itself is the essential shift in perspective. The knowledge gained from this process is a critical input into the continuous evolution of an institution’s trading intelligence, where each deployment, successful or not, refines the operational playbook for the next generation of strategies.

Glossary

Crypto Derivatives

Machine Learning

Concept Drift

Low Latency

Risk Management

Mlops

Market Microstructure

Order Book

Backtesting

Slippage Modeling

Walk-Forward Optimization

Algorithmic Trading