Concept

The Foundational Dissonance in Enterprise Data

The primary challenge in mapping data fields between Customer Relationship Management (CRM), Enterprise Resource Planning (ERP), and Request for Proposal (RFP) software is not a technical limitation of connectors or APIs. It is a fundamental conflict of philosophies embedded in the very architecture of each system. These platforms are not simply databases; they are purpose-built operational systems, each with a distinct worldview. A CRM is engineered to model the fluid, dynamic, and often narrative-driven nature of human relationships.

An ERP system is designed to impose rigid, transactional order upon finite company resources. An RFP platform serves a procedural and comparative function, structuring the complex process of procurement and vendor selection. The attempt to force a direct, one-to-one mapping between their data fields is akin to demanding a direct translation between three different languages, each with its own unique grammar, context, and idiom. The difficulty arises because you are not just moving data; you are attempting to reconcile three disparate conceptual models of the business itself.

A CRM’s “Account” entity, for example, represents a complex web of interactions, history, contacts, and potential future value. It is a repository of institutional memory. The corresponding “Customer” entity in an ERP, conversely, is primarily a transactional record ▴ a collection of addresses, credit terms, and order histories. It is a ledger.

The “Company” field within an RFP system is different yet again; it is a static, formal identifier for a legal entity participating in a structured evaluation process. Mapping these three fields under a single “Company Name” umbrella without a sophisticated translation layer inevitably leads to data degradation. The richness of the CRM’s relationship context is lost, the ERP’s transactional precision is diluted, and the RFP’s procedural formality is compromised. This fundamental dissonance, this “semantic impedance mismatch,” is the root of all subsequent mapping challenges.

Semantic Heterogeneity the Core Systemic Conflict

The issue of data model heterogeneity is central to this integration challenge. Each system employs a schema that reflects its core function, leading to significant differences in how data is structured, defined, and related. A CRM might use a flexible, object-oriented model to capture the many-to-many relationships between contacts, opportunities, and accounts. An ERP relies on a highly structured relational model to ensure the integrity of financial and inventory transactions.

An RFP system often uses a document-centric or project-based model to manage proposal content, vendor responses, and scoring criteria. These are not arbitrary design choices; they are necessary for each system to perform its function effectively.

This heterogeneity manifests in several critical ways:

- Structural Differences ▴ One system might store an address as a single text block, while another breaks it into five distinct, validated fields (Street, City, State, Postal Code, Country). A simple mapping is impossible without a transformation protocol that can parse the single block or concatenate the five fields, with inherent risks of error in either direction.

- Semantic Variances ▴ The same term carries vastly different meanings across systems. “Status” in a CRM could be “Lead,” “Qualified,” or “Nurturing.” In an ERP, “Status” for an order could be “Pending,” “Shipped,” or “Invoiced.” In an RFP, a “Status” might be “Draft,” “Issued,” or “Awarded.” Mapping these requires a clear understanding of the business process and the creation of a semantic bridge ▴ a set of rules that translates the meaning from one context to another.

- Data Granularity ▴ An ERP system might track product sales with extreme granularity, down to the individual SKU, warehouse location, and shipment batch. A CRM, focused on the sales opportunity, might only track the total deal value and the product family. Forcing the ERP’s granular data into the CRM’s high-level structure, or vice versa, results in a loss of critical information or the creation of meaningless, aggregated data points.

Addressing these challenges requires moving beyond simple field-to-field connections and toward a holistic data strategy. It necessitates the development of a common language, or a “canonical data model,” that can act as a universal translator, preserving the meaning and integrity of data as it flows between these functionally disparate systems. Without this architectural foresight, any integration effort is doomed to create a brittle, unreliable data infrastructure that undermines the very purpose of the systems it seeks to connect.

Strategy

Establishing a Unified Data Governance Framework

A successful data mapping initiative between CRM, ERP, and RFP systems depends on a robust governance framework that precedes any technical implementation. This framework acts as the constitutional authority for all data-related decisions, ensuring consistency, quality, and semantic integrity across the enterprise. The primary objective is to create a single source of truth, not by forcing one system’s schema upon the others, but by establishing a consensus on what data means and how it should be managed throughout its lifecycle.

This involves creating a cross-functional data governance council, composed of stakeholders from sales (CRM), finance and operations (ERP), and procurement (RFP), as well as IT. This council is tasked with the critical responsibility of defining and maintaining the enterprise’s data dictionary and business glossary.

A unified data governance framework is the essential strategic foundation for ensuring data integrity across disparate enterprise systems.

The business glossary provides clear, unambiguous definitions for key business terms, resolving semantic discrepancies before they become technical problems. For instance, the council would formally define what constitutes a “Customer.” Is it a legal entity that has signed a contract? A prospect with an active opportunity in the CRM? Or any organization that has ever been issued an invoice?

The answer, which must be agreed upon by all stakeholders, will dictate the logic of the integration. The data dictionary, in turn, provides the technical specifications for these business terms, detailing the data type, format, validation rules, and source system for each critical data element. This strategic alignment is the bedrock upon which all successful integration architectures are built.

Integration Patterns a Comparative Analysis

Once a governance framework is in place, the organization can select an appropriate architectural pattern for the integration itself. There are several established models, each with distinct advantages and complexities. The choice of pattern is a strategic decision that depends on the organization’s scale, technical maturity, and long-term business objectives.

The three primary patterns to consider are:

- Point-to-Point Integration ▴ This is the most direct approach, where custom connections are built between each pair of systems. A connection is made between the CRM and ERP, another between the ERP and RFP system, and potentially a third between the CRM and RFP system. While seemingly simple for a small number of systems, this pattern quickly becomes unmanageable as the organization grows. Each new system requires a new set of custom integrations, leading to a brittle and complex web of connections often referred to as “spaghetti architecture.” Maintenance is a significant challenge, as a change in one system’s API can break multiple integrations.

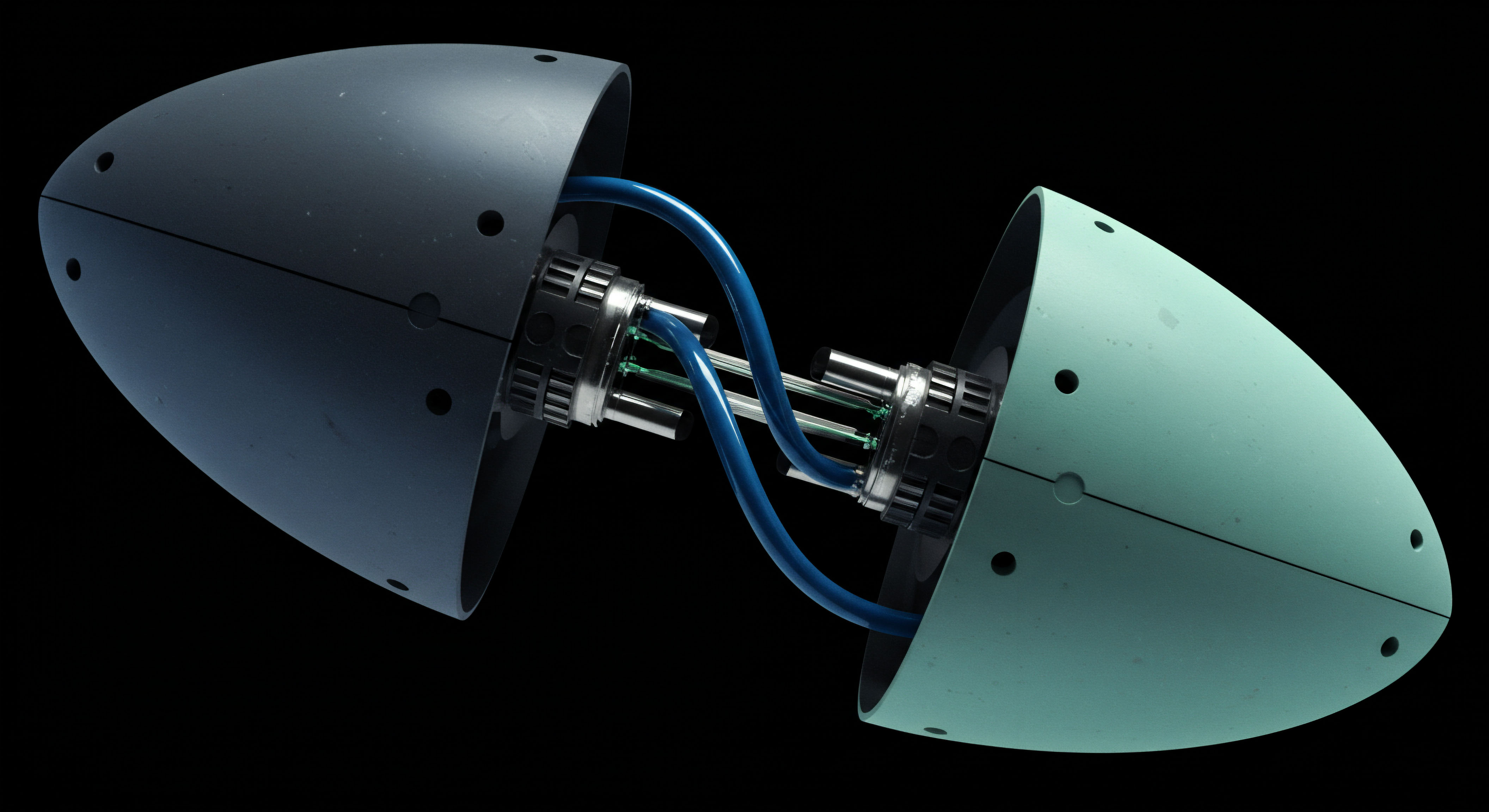

- Hub-and-Spoke Model (Middleware-Centric) ▴ This model introduces a central integration hub or middleware platform that acts as a translator and traffic controller. Each system (CRM, ERP, RFP) connects to the central hub, not directly to each other. The hub is responsible for all data transformation, routing, and mapping logic. This approach dramatically simplifies the architecture, as each system only needs one connection ▴ to the hub. Adding a new system is a matter of connecting it to the hub, without affecting the existing connections. This model promotes reusability and centralized management of integration logic, making it far more scalable and maintainable than the point-to-point approach.

- Canonical Data Model (CDM) with an Enterprise Service Bus (ESB) ▴ This is the most sophisticated and robust pattern. It extends the hub-and-spoke model by introducing a standardized, system-agnostic data model ▴ the Canonical Data Model. When the CRM sends data, the integration hub translates it from the CRM’s native format into the canonical format. The data is then transported across the Enterprise Service Bus and delivered to the ERP, where another connector translates it from the canonical format into the ERP’s native format. The CDM ensures that all systems are speaking the same “language” at the core level, completely decoupling them from one another. This provides maximum flexibility and scalability, as systems can be added or replaced with minimal impact on the rest of the architecture, so long as they can communicate with the CDM.

Strategic Trade-Offs in Integration Architecture

The selection of an integration pattern involves a careful evaluation of trade-offs between initial effort, long-term scalability, and flexibility. The following table provides a comparative analysis to guide this strategic decision.

| Integration Pattern | Initial Complexity | Scalability | Maintenance Overhead | Best Suited For |

|---|---|---|---|---|

| Point-to-Point | Low (for 2-3 systems) | Low | High (increases exponentially) | Small organizations with a limited number of static systems and no immediate growth plans. |

| Hub-and-Spoke | Medium | High | Medium (centralized logic) | Growing organizations that require a manageable and scalable way to connect multiple applications. |

| Canonical Data Model (CDM) | High | Very High | Low (decoupled systems) | Large enterprises with a complex and evolving application landscape that demand maximum flexibility and long-term architectural stability. |

Execution

The Operational Playbook for Data Field Mapping

Executing a data mapping project requires a disciplined, phased approach that translates strategic goals into concrete operational tasks. This playbook outlines a systematic process for achieving reliable and meaningful data integration between CRM, ERP, and RFP systems, assuming a Hub-and-Spoke or Canonical Data Model architecture has been chosen.

Phase 1 Discovery and Documentation

The initial phase is dedicated to building a comprehensive understanding of the data landscape across all three systems. This is a forensic exercise that requires meticulous attention to detail.

- Data Object Identification ▴ For each system (CRM, ERP, RFP), identify and document all relevant data objects. This includes standard objects like “Account,” “Contact,” “Product,” and “Order,” as well as any custom objects that are critical to the business process.

- Field-Level Analysis ▴ For each identified data object, conduct a thorough analysis of every field. This analysis must be captured in a shared repository, such as a master spreadsheet or a dedicated data governance tool. The following attributes must be documented for every single field:

- Field Name (System) ▴ The technical name of the field in the source system (e.g. cust_acct_name ).

- Field Label (UI) ▴ The user-facing label for the field (e.g. “Customer Account Name”).

- Data Type ▴ The technical data type (e.g. String, Integer, Datetime, Boolean).

- Length/Precision ▴ Maximum length for text fields or precision for numeric fields.

- Constraints ▴ Document any constraints, such as “Required,” “Unique,” or “Read-Only.”

- Picklist Values ▴ For any fields with a predefined set of values, document the complete list of options.

- Business Purpose ▴ A clear, concise description of what the field represents and how it is used in the context of its source system. This is a critical step that often gets overlooked.

- Relationship Mapping ▴ Document the relationships between data objects within each system. Identify primary and foreign keys, and map out how objects like “Contacts” relate to “Accounts” or how “Order Lines” relate to “Products.”

Phase 2 Design of the Canonical Model and Mapping Rules

With a complete inventory of the source systems, the focus shifts to designing the target state. If a full Canonical Data Model is being implemented, this phase involves defining the standard, system-agnostic data objects and fields. If a simpler Hub-and-Spoke model is used, this phase focuses on defining the mapping rules within the middleware.

A well-designed canonical model acts as the universal translator, ensuring semantic consistency across all integrated platforms.

The core output of this phase is the Data Mapping Specification Document. This document is the blueprint for the entire integration. It explicitly defines how each field in a source system corresponds to a field in the target system or canonical model.

Quantitative Modeling a Data Mapping Specification

The Data Mapping Specification is a granular, quantitative model that leaves no room for ambiguity. It details the precise transformation logic for every data element that will be synchronized between the systems. The following table provides a simplified example for mapping a “Customer” entity from a CRM and an ERP into a Canonical “Organization” Model.

| Canonical Field (Organization) | Source System | Source Field | Transformation Logic / Rules | Notes |

|---|---|---|---|---|

| OrganizationID | CRM | AccountId | Direct 1:1 mapping. This will be the master identifier. | The CRM is designated as the master system for Organization identity. |

| OrganizationName | CRM | AccountName | Direct 1:1 mapping. | – |

| TaxIdentifier | ERP | VAT_Number | Direct 1:1 mapping. | This field only exists in the ERP and will be synchronized back to a custom field in the CRM if required. |

| Status | CRM | Account_Status__c | CASE WHEN Account_Status__c = ‘Active Customer’ THEN ‘Active’ WHEN Account_Status__c = ‘Former Customer’ THEN ‘Inactive’ ELSE ‘Prospect’ END | Semantic mapping is required to align CRM statuses with the canonical model. |

| Status | ERP | Customer_On_Hold | IF Customer_On_Hold = TRUE THEN ‘Suspended’ ELSE NULL | ERP status is only mapped if it represents a credit hold, which overrides the CRM status. Priority logic must be applied. |

| FullAddress | CRM | BillingStreet, BillingCity, BillingState, BillingPostalCode | CONCAT(BillingStreet, ‘, ‘, BillingCity, ‘, ‘, BillingState, ‘ ‘, BillingPostalCode) | Concatenation is required to create a single address string from component fields. |

| AnnualRevenue | ERP | YTD_Sales | Direct 1:1 mapping. | ERP is the master for all financial data. Data flows one way to the CRM for informational purposes. |

Phase 3 Implementation and Testing

This is the development phase where the mapping logic defined in the specification document is implemented in the chosen integration platform. Rigorous testing is paramount.

- Unit Testing ▴ Test each field mapping in isolation to ensure the transformation logic is working correctly.

- System Integration Testing (SIT) ▴ Test the end-to-end data flow between two systems (e.g. CRM to ERP) to ensure records are created and updated as expected.

- User Acceptance Testing (UAT) ▴ Business users from each department must validate the integrated data in a sandbox environment. They will follow their standard business processes and confirm that the data is accurate, timely, and useful. This is the final quality gate before deployment.

Phase 4 Deployment and Monitoring

Following a successful UAT, the integration is deployed to the production environment. The project does not end here. Continuous monitoring is essential to ensure the long-term health of the data ecosystem.

Ongoing monitoring of data synchronization jobs and error logs is critical for maintaining the long-term integrity of the integrated system.

An effective monitoring strategy includes automated alerts for integration failures, regular data quality audits to catch inconsistencies, and a clearly defined process for managing and resolving data errors. The data governance council should review data quality metrics on a regular basis to identify trends and proactively address emerging issues. This operational discipline ensures that the integrated system remains a reliable and valuable asset for the organization.

References

- Nijssen, G. M. (2007). Fact-Oriented Modeling ▴ Past, Present and Future.

- Halpin, T. (2007). Fact-Oriented Modeling ▴ A Further Look.

- Calvanese, D. et al. (2007). The Master Data Management Challenge.

- Halevy, A. Rajaraman, A. & Ordille, J. (2006). Data Integration ▴ The Teenage Years.

- Lenzerini, M. (2002). Data Integration ▴ A Theoretical Perspective. Proceedings of the twenty-first ACM SIGMOD-SIGACT-SIGART symposium on Principles of database systems.

- Doan, A. Halevy, A. & Ives, Z. (2012). Principles of Data Integration. Morgan Kaufmann.

- Batini, C. Lenzerini, M. & Navathe, S. B. (1986). A comparative analysis of methodologies for database schema integration. ACM Computing Surveys (CSUR), 18(4), 323-364.

- Sheth, A. P. & Larson, J. A. (1990). Federated database systems for managing distributed, heterogeneous, and autonomous databases. ACM computing surveys (CSUR), 22(3), 183-236.

Reflection

From Data Mapping to Systemic Intelligence

The process of mapping data fields between CRM, ERP, and RFP systems, when approached with architectural rigor, transcends a mere technical exercise. It becomes a catalyst for profound organizational introspection. The effort forces a clear and unified definition of the enterprise’s core concepts ▴ what is a customer, what constitutes a product, how is value defined and tracked? Answering these questions builds a foundation of semantic consistency that is the prerequisite for true systemic intelligence.

The resulting integrated ecosystem is not just a set of connected applications. It is a cohesive operational fabric where information flows with context and integrity. A sales insight from the CRM is enriched with financial reality from the ERP, which in turn informs the strategic sourcing decisions within the RFP platform.

The operational playbook and quantitative models discussed are the tools for building this system, but the ultimate objective is to create an environment where data supports, rather than obstructs, coherent and intelligent decision-making at every level of the organization. The final architecture is a reflection of the organization’s commitment to clarity and operational excellence.

Glossary

Rfp System

Data Model Heterogeneity

Canonical Data Model

Governance Framework

Data Mapping

Data Governance

Source System

Data Model

Data Integration