Concept

You are asking about the obstacles to implementing a real-time consolidated tape for equities. The inquiry itself presupposes a certain model of the financial world a model where information can be centralized, synchronized, and disseminated with perfect fidelity and timeliness. This is the clean, logical world of system diagrams. The lived reality of market structure, however, is a far more complex system, governed by physics, economics, and deeply entrenched competitive interests.

The primary obstacles are not temporary roadblocks on a clear path; they are fundamental, structural attributes of the market itself. To truly grasp the challenge, one must first dispense with the notion of a single, objective “real-time.”

The core architectural challenge stems from a physical reality that no amount of regulatory will or technological investment can circumvent the finite speed of light. Data has mass. It takes time to travel. A consolidated tape, by its very definition, is an aggregator.

It must collect data from multiple, geographically dispersed trading venues. The system must wait for the data packet from the most distant venue to arrive before it can create a truly consolidated view of the market. In that interval, however small, the market has already moved. The consolidated quote is, by the laws of physics, an echo.

It is a historical record of a moment that has already passed. This inherent latency is the first and most immutable obstacle. It means the consolidated tape will always be slower than the proprietary data feeds offered directly by the exchanges. This creates a permanent, two-tier system of information, where participants who pay for direct, low-latency feeds operate on a different temporal plane from those who consume the consolidated view. This is a foundational constraint that shapes every subsequent challenge.

A consolidated tape is architecturally designed to be a lagging indicator of market activity.

This physical limitation gives rise to the second major obstacle the political economy of market data. Exchanges are not benevolent utilities; they are for-profit enterprises, and the sale of market data is a significant and high-margin revenue stream. Their business model is predicated on the value of their proprietary, low-latency data feeds. A comprehensive, low-cost, real-time consolidated tape is a direct existential threat to this revenue model.

Consequently, exchanges have a powerful incentive to resist its creation, not necessarily through overt opposition, but through strategic complexities in data licensing, access fees, and reporting standards. They will argue, with some justification, that they have invested heavily in the technology to generate this data and deserve to be compensated for it. The debate becomes one of public good versus private enterprise. Is market data a utility that should be widely and cheaply available to ensure a fair and transparent market for all?

Or is it a privately produced good whose price should be determined by market forces? This conflict of interest is the central political battleground upon which the fight for a consolidated tape is waged.

The third set of obstacles is logical and technical, born from the fragmented nature of the markets the tape seeks to consolidate. There is no universal standard for how market data is formatted, flagged, or disseminated. Each trading venue has its own proprietary symbology, its own set of codes to denote different trade conditions, and its own protocols for handling corporate actions. The task of building a consolidated tape provider (CTP) involves the immense data engineering challenge of ingesting dozens of disparate feeds, normalizing them into a single, coherent format, and resolving ambiguities and contradictions in real-time.

What happens when one venue reports a trade with a specific flag, and another venue uses the same flag for a different meaning? How are trades sequenced when they arrive with timestamps from clocks that are not perfectly synchronized? Solving these issues requires a massive investment in technology and the creation of a robust governance framework to enforce data quality and standardization upon all participants. It is a task of forging a common language from a babel of competing dialects, all while the conversation is happening at microsecond speeds. These three pillars the immutable laws of physics, the intractable conflict of economic interests, and the sheer complexity of data harmonization constitute the primary, systemic obstacles to implementing a truly real-time consolidated tape for equities.

Strategy

Navigating the implementation of a consolidated tape (CT) requires a strategic framework that acknowledges the systemic nature of its obstacles. A successful strategy is one that treats the problem not as a simple technological build, but as a complex negotiation between physics, economics, and governance. The core of this strategy involves a precise calibration of expectations and a clear-eyed understanding of the trade-offs involved. The objective is to create a system that is “good enough” to achieve its primary goals of enhancing transparency and accessibility for the majority of investors, while accepting its inherent limitations for high-frequency trading applications.

The Political Economy of Market Data

The most contentious strategic battleground is the economic model of the tape itself. The value of market data is perceived differently by its various stakeholders, and any viable strategy must address these conflicting viewpoints. Exchanges, as the primary producers of quote and trade data, view it as a proprietary product, the result of significant investment in infrastructure and technology. Their strategy is to maximize the revenue generated from this asset.

Conversely, asset managers, institutional investors, and retail brokers view market data as a fundamental input, a utility required for participation in the market. Their strategic goal is to minimize this cost and ensure fair access. A consolidated tape sits directly at the nexus of this conflict.

A successful strategy must therefore focus on creating a new equilibrium. This involves designing a commercial model for the Consolidated Tape Provider (CTP) that is both sustainable for the operator and palatable to both data producers and consumers. Several models have been proposed:

- Utility Model ▴ Under this model, the CTP operates on a cost-recovery basis, with fees set only to cover the operational expenses of collecting, consolidating, and disseminating the data. This is the model most favored by data consumers, as it promises the lowest cost. However, it is fiercely resisted by exchanges, who see it as a forced commoditization of their valuable data.

- Revenue-Sharing Model ▴ This model attempts to create a compromise. The CTP charges a commercial rate for the consolidated feed and then shares a portion of that revenue back to the contributing trading venues. The strategic challenge here lies in devising a fair and transparent formula for revenue allocation. Should it be based on the volume of trades, the number of quotes, or some other metric of “value”? This approach attempts to align the incentives of the exchanges with the success of the consolidated tape.

- Tiered Access Model ▴ This strategy acknowledges the different use cases for the data. It would offer a low-cost, delayed feed for retail investors and general market monitoring, and a higher-cost, “as-real-time-as-possible” feed for professional users. This allows the CTP to capture more value from sophisticated users while still achieving the goal of broad, affordable access.

The table below outlines the strategic positions of key market participants concerning the implementation of a consolidated tape, providing a framework for understanding the complex negotiations involved.

| Market Participant | Primary Objective | Preferred CT Commercial Model | Key Strategic Concern |

|---|---|---|---|

| Incumbent Exchanges | Protect and maximize proprietary data revenue | Revenue-Sharing (with favorable allocation) | Erosion of high-margin data products |

| Large Asset Managers | Reduce data costs and simplify data acquisition | Utility Model | High data fees impacting operational alpha |

| High-Frequency Trading Firms | Maintain information advantage via low-latency feeds | Indifferent (will use direct feeds regardless) | Regulatory mandates forcing use of slower CT data |

| Retail Brokerages | Provide clients with affordable, comprehensive market view | Utility Model or low-cost tier | Complexity and cost of integrating multiple direct feeds |

| Regulators | Enhance market transparency and fairness | Model that ensures widest possible dissemination | Market fragmentation and information asymmetry |

What Is the True Meaning of Real Time?

The strategic approach to the latency obstacle requires a fundamental shift in language and expectations. The term “real-time” is a misnomer in the context of a consolidated system. A more accurate and strategically sound term is “synchronized.” The goal is to provide a comprehensive snapshot of the market that is internally consistent and reliably sequenced, even if it is delivered with a small delay. The strategy here is to focus on the integrity and quality of the data, rather than an unwinnable race for zero latency.

The strategic focus must shift from achieving impossible speed to delivering impeccable data integrity.

This involves several key technical and policy decisions:

- Time-Stamping and Synchronization ▴ A critical strategic decision is to mandate a high-precision time-stamping protocol, such as Precision Time Protocol (PTP), across all contributing venues. This would ensure that all quotes and trades are timestamped at their source with a high degree of accuracy, allowing the CTP to sequence events correctly, even if they arrive out of order.

- Defining the “Consolidated BBO” ▴ The strategy must clearly define what the consolidated best bid and offer (BBO) represents. It does not represent the absolute, instantaneous best price available anywhere in the market. It represents the best price available according to the synchronized, consolidated view at a specific point in time. This distinction is subtle but vital for managing user expectations and avoiding regulatory challenges.

- Transparency of Latency ▴ A courageous strategy would be to make the latency of the system itself transparent. The CTP could publish metrics on the time it takes to receive data from each venue and the total processing time required to create the consolidated feed. This would provide users with a clear understanding of the data’s timeliness and help them to use it appropriately.

The Governance Dilemma Forging Consensus from Fragmentation

The final strategic pillar is the creation of a robust governance framework to overcome the technical obstacles of data harmonization. This is a political and diplomatic challenge as much as a technical one. The strategy must be to establish the CTP as a neutral and authoritative body with the power to set and enforce standards.

This requires a multi-pronged approach:

- Establishing a Common Data Dictionary ▴ The CTP, in collaboration with regulators and market participants, must develop and maintain a comprehensive data dictionary that defines every possible data field and flag in the consolidated feed. This would eliminate ambiguity and ensure that data from different sources is interpreted consistently.

- Mandating Data Quality Standards ▴ The governance framework must include strict data quality standards that all contributing venues are required to meet. This would include rules for data formatting, accuracy, and completeness. The CTP would need the authority to monitor compliance and impose penalties for non-compliance.

- Creating a Stakeholder-Led Governance Body ▴ To ensure buy-in from all parties, the governance of the CTP should be overseen by a board or committee that includes representatives from all major stakeholder groups ▴ exchanges, asset managers, brokers, and public interest groups. This would help to ensure that the rules are fair and that the CTP operates in the best interests of the market as a whole.

Ultimately, the strategy for implementing a consolidated tape is one of managed expectations and pragmatic compromise. It requires balancing the conflicting interests of powerful market players, accepting the hard limits imposed by physics, and methodically building the social and technical consensus required to create a single, authoritative view of a fragmented market.

Execution

The execution phase of implementing a consolidated tape for equities translates the strategic balancing act into a concrete operational and technical reality. This is where the architectural plans confront the granular complexities of market microstructure. The execution is not a singular event but a continuous process of data engineering, quantitative analysis, and system integration, all governed by a robust operational playbook. Success hinges on a meticulous, phased approach that can navigate the immense technical and political hurdles involved in building a system that serves as the market’s central nervous system.

The Operational Playbook

A hypothetical Consolidated Tape Provider (CTP) would need to execute a precise operational playbook to move from concept to a functioning market utility. This playbook involves a sequence of dependent steps, each with its own set of challenges and required outputs. The execution must be methodical, transparent, and built on a foundation of regulatory authority.

- Phase 1 Secure Regulatory Mandate and Establish Governance ▴ The absolute prerequisite for execution is a clear legal and regulatory mandate. This involves working with securities regulators to establish the CTP’s authority to compel data contributions from all trading venues. Concurrently, a multi-stakeholder governance body must be formed, comprising representatives from exchanges, buy-side firms, sell-side firms, and data vendors. This body’s first task is to ratify the CTP’s charter and the foundational rules for data contribution and quality.

- Phase 2 Develop and Publish the Technical Specification ▴ This is the core data engineering phase. The CTP’s technical team must draft a highly detailed technical specification document. This document, which would run to hundreds of pages, defines every aspect of the data feed, including the message formats (e.g. FIX or a proprietary binary protocol), the precise definition of every data field, the required time-stamping accuracy (e.g. synchronized to UTC within 10 microseconds), and the physical connectivity requirements for contributing venues.

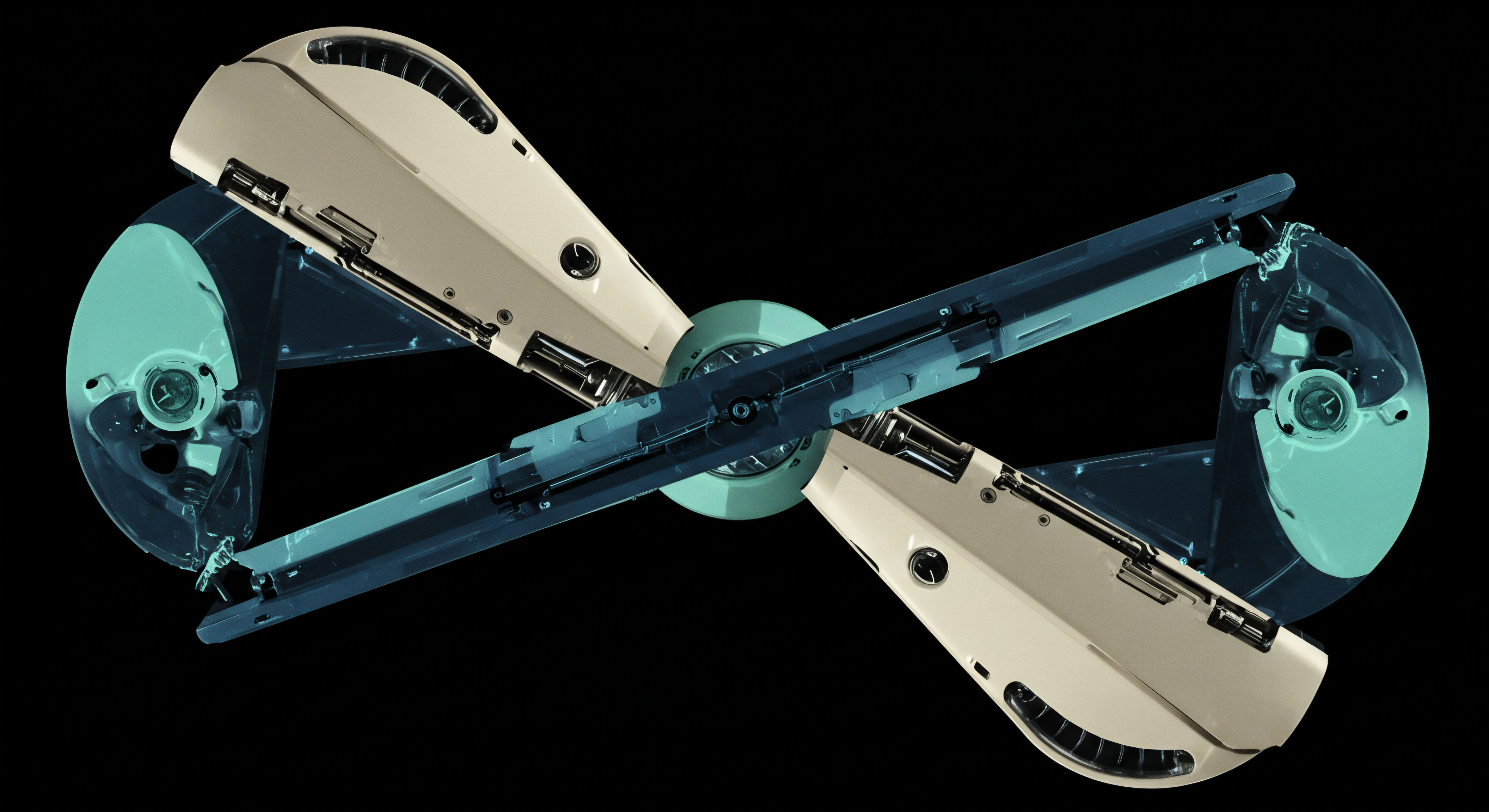

- Phase 3 Build the Consolidation and Dissemination Infrastructure ▴ This phase involves the physical construction of the CTP’s data centers. The architecture requires redundant, geographically dispersed data centers to ensure high availability. Within each data center, the CTP must deploy a sophisticated technology stack, including high-speed network switches, servers with hardware-accelerated processing capabilities, and a distributed software system for ingesting, normalizing, sequencing, consolidating, and disseminating the data.

- Phase 4 Onboard and Certify Contributing Venues ▴ This is a painstaking process of working with each individual trading venue to establish a secure, high-speed connection to the CTP’s data centers. Each venue must undergo a rigorous certification process to ensure that its data feed complies with the CTP’s technical specification. This involves testing for data quality, format compliance, and timestamp accuracy.

- Phase 5 Launch and Monitor ▴ The launch of the consolidated tape would likely be phased, starting with a limited set of symbols or a pilot group of users. Once live, the CTP’s network operations center (NOC) must monitor the system 24/7, tracking data quality, system latency, and connectivity with all venues. The CTP must have a clear protocol for handling data quality issues and outages at contributing venues.

Quantitative Modeling and Data Analysis

The execution of a consolidated tape is a data-intensive endeavor. Quantitative analysis is not just a tool for post-facto analysis; it is essential for designing the system and justifying its commercial model. The following tables provide a glimpse into the type of modeling required.

Table 1 Latency Impact Analysis

This table models the cumulative latency introduced during the consolidation process. It demonstrates quantitatively why a consolidated feed is inherently slower than a direct feed from a single exchange. The model assumes a CTP located in a central data center and receiving feeds from two different venues. All times are in microseconds (µs).

| Process Step | Venue A (Local) Latency (µs) | Venue B (Remote) Latency (µs) | Notes |

|---|---|---|---|

| Internal Venue Processing | 15 | 15 | Time from order match to data packet leaving the venue’s systems. |

| Network Transit to CTP | 50 | 1,500 | Assumes Venue A is in the same data center park; Venue B is in a different city. |

| CTP Ingestion & Normalization | 25 | 25 | Time for the CTP’s systems to parse the incoming data. |

| Consolidation & Sequencing Wait | 1,450 | 0 | The system must wait for the slower packet from Venue B to arrive. |

| Consolidated Feed Dissemination | 10 | 10 | Time to send the consolidated packet to a user in the same data center. |

| Total Latency to User | 1,550 µs | 1,550 µs | The final feed is constrained by the slowest input. A direct feed from Venue A would be ~65 µs. |

Table 2 Data Harmonization Cost Benefit Matrix

This matrix provides a qualitative framework for prioritizing which data elements to harmonize. It weighs the difficulty and cost of achieving consensus and technical implementation against the benefit to market transparency and data usability.

| Data Element | Harmonization Cost/Difficulty | Transparency Benefit | Execution Priority |

|---|---|---|---|

| Unique Instrument Symbology | High | Very High | Critical Path |

| Trade Condition Flags | Medium | High | High |

| Corporate Action Adjustments | High | Medium | Medium |

| Quote/Order Capacity Flags | Low | Medium | High |

| Regulatory Trade Identifiers | Medium | Very High | Critical Path |

Predictive Scenario Analysis

To illustrate the execution challenges, consider a hypothetical case study. A consortium, “EuroTape,” wins the bid to become the official equities CTP for the European Union. Their operational plan immediately confronts reality.

In the first six months, EuroTape’s primary struggle is with data contribution agreements. While the regulatory mandate compels exchanges to provide data, the commercial terms are left to negotiation. The German and French national exchanges demand a high revenue-sharing percentage, arguing their markets represent the bulk of liquidity.

Simultaneously, smaller multilateral trading facilities (MTFs) argue for a per-message contribution fee, which would be more favorable to their lower-volume models. The negotiations drag on, delaying the onboarding process by three months and forcing EuroTape to revise its revenue projections downwards.

Technically, the biggest hurdle proves to be time synchronization. While the technical specification mandates PTP, the audit during the certification phase reveals that three of the seventeen contributing venues have clock drift issues that exceed the 10-microsecond tolerance. This forces a difficult decision ▴ delay the launch to allow these venues to upgrade their infrastructure, or launch with a known data integrity issue for a subset of the market? Under pressure from regulators, they choose to launch but must implement a complex software patch to flag and potentially isolate data from the non-compliant venues, adding 5 microseconds of processing latency to every single message they handle.

Upon launch, the user feedback is bifurcated. Large asset managers praise the simplified data access, reporting that their data acquisition costs have dropped by 30%. However, quantitative hedge funds immediately complain that the EuroTape feed is, on average, 250 microseconds slower than their direct feeds from the major exchanges, making it unusable for their most latency-sensitive strategies.

This confirms the two-tier market structure predicted by the latency models. The execution for EuroTape becomes a continuous balancing act ▴ managing political relationships with data contributors, fighting an ongoing battle for data quality, and carefully managing the expectations of a diverse user base with conflicting needs.

How Should a System Integration and Technological Architecture Be Designed?

The technological architecture of a CTP is a high-performance, distributed system designed for resilience, accuracy, and speed. The design must prioritize data integrity and determinism.

- Ingestion Layer ▴ This layer consists of a series of dedicated servers, each responsible for terminating the physical connection from a specific trading venue. These servers run specialized network interface cards (NICs) capable of hardware-level timestamping of incoming packets, minimizing jitter and providing a highly accurate time of arrival.

- Normalization and Decoding Layer ▴ Once ingested, the raw data packets are passed to a farm of compute servers. These servers run the software that decodes the venue’s proprietary data format and translates it into the CTP’s common internal format. This is one of the most CPU-intensive parts of the process.

- Sequencing and Consolidation Layer ▴ The normalized data streams are then fed into the core of the system ▴ the sequencer. This is a fault-tolerant state machine that orders all incoming messages based on their source timestamp. Once ordered, the consolidation engine processes the stream of events, calculating the consolidated BBO and maintaining the official record of the market.

- Dissemination Layer ▴ The final consolidated data feed is then multicast from a set of dissemination servers to all subscribers. The system must support multiple distribution protocols and physical connection types to cater to a wide range of clients, from major investment banks co-located in the data center to cloud-based analytical platforms.

The integration with the broader market ecosystem is critical. The CTP must provide clear APIs and software development kits (SDKs) to help subscribers integrate the feed into their order management systems (OMS), execution management systems (EMS), and algorithmic trading engines. The execution of a consolidated tape is a monumental undertaking in systems engineering, requiring a deep understanding of both the technology of high-performance computing and the intricate, often conflicting, dynamics of the market it serves.

References

- O’Hara, Maureen. Market Microstructure Theory. Blackwell Publishers, 1995.

- Harris, Larry. Trading and Exchanges ▴ Market Microstructure for Practitioners. Oxford University Press, 2003.

- European Securities and Markets Authority. “MiFID II/MiFIR Review Report on the Development in Prices for Pre- and Post-trade Data and on the Consolidated Tape for Equity.” ESMA70-156-2284, 2019.

- Nasdaq. “Why Physics Makes a Pre-trade Tape Impossible.” Nasdaq Market Microstructure, 2023.

- ION Group. “The bumpy road to creating a consolidated tape.” ION Group Insights, 2025.

- Financial Conduct Authority. “UK Market Data Study.” MS22/1, 2024.

- Lehalle, Charles-Albert, and Sophie Laruelle. Market Microstructure in Practice. World Scientific Publishing, 2013.

- Budish, Eric, Peter Cramton, and John Shim. “The High-Frequency Trading Arms Race ▴ Frequent Batch Auctions as a Market Design Response.” The Quarterly Journal of Economics, vol. 130, no. 4, 2015, pp. 1547-1621.

Reflection

Recalibrating the Definition of Central

The pursuit of a consolidated tape forces a fundamental reflection on the architecture of modern financial markets. It is an attempt to impose a centralized, logical order upon a system that has become inherently decentralized and physically distributed. The knowledge gained through this process reveals that the primary obstacles are not bugs to be fixed, but features of the current market design. The speed of light, the profit motive, and the complexity of multi-agent coordination are immutable constraints.

Therefore, the strategic question for any market participant shifts. How does your own operational framework account for these realities? How is your information acquisition strategy designed to navigate a world where a single source of truth is an approximation, and timeliness is relative?

Viewing the consolidated tape not as a perfect solution, but as one component in a larger system of intelligence, is the first step. The ultimate operational edge comes from architecting a system that understands the limitations of each component and exploits the arbitrage between them.

Glossary

Consolidated Tape

Trading Venues

Market Data

Consolidated Tape Provider

Ctp

Data Quality

Asset Managers

Contributing Venues

Market Microstructure

Technical Specification