Concept

The selection of a market data feed is a foundational architectural decision that dictates the operational capacity and strategic posture of any trading entity. It is the primary conduit through which the market’s state is perceived. The technological distinctions between a low-latency, direct feed and a consolidated public data feed represent two fundamentally different philosophies of market engagement. One is an instrument of competitive, high-frequency participation, engineered for speed and granularity.

The other is a system of public record, designed for broad accessibility and regulatory compliance. Understanding the chasm between these two architectures is the first principle in designing a trading system that aligns with its intended strategy, whether that strategy is predicated on microsecond-level reactions or long-term value assessment.

A consolidated public data feed, often referred to as the Securities Information Processor (SIP) in U.S. equities, functions as a public utility. Its purpose is to create a single, unified view of the market by aggregating top-of-book quotes and last-sale data from numerous, geographically dispersed trading venues. The technological process involves collecting data from each exchange, transmitting it to a central processing facility, sequencing the messages, calculating a National Best Bid and Offer (NBBO), and then disseminating this unified stream to the public. This entire process, by design, introduces latency.

The system is built for comprehensiveness and standardization, ensuring that all market participants have access to a baseline view of prices. It is an architecture of aggregation, where the primary technological challenge is creating a fair and orderly composite view from a multitude of disparate sources.

A consolidated feed provides a standardized, top-of-book market view, prioritizing accessibility over raw speed.

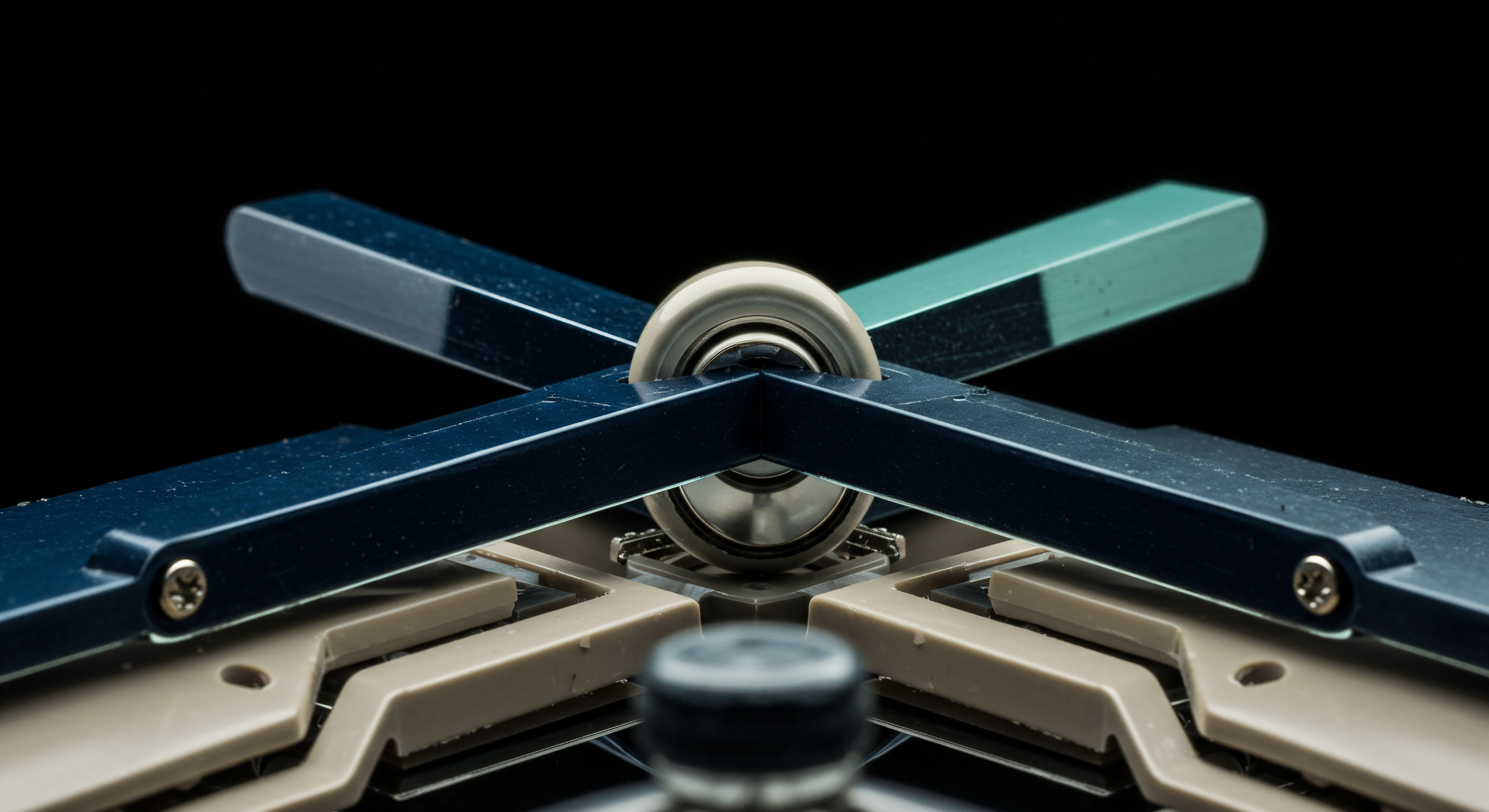

Conversely, a low-latency feed is an architecture of immediacy. It bypasses the entire aggregation and consolidation process. A firm using a direct feed establishes a private, point-to-point connection with an exchange’s matching engine, often through a physical fiber-optic cross-connect within the same data center ▴ a practice known as colocation. The data transmitted is the raw, unprocessed firehose of all market activity, including the full depth of the order book, which reveals buy and sell orders at every price level.

The technology is engineered to minimize every possible source of delay, from the network path to the data serialization format. Protocols are typically proprietary, highly efficient binary formats, and processing is often handled by specialized hardware like Field-Programmable Gate Arrays (FPGAs) capable of operating in nanoseconds. This feed is a system for participants who believe that information has a temporal value and that seeing the market’s true state fractions of a second before the public consolidated feed is a distinct competitive advantage.

The technological divergence, therefore, is not merely a matter of degree; it is a matter of kind. The consolidated feed delivers a processed summary of what the market was a few milliseconds ago. The low-latency feed delivers the raw, unfiltered reality of what the market is right now.

This distinction in temporal resolution and data granularity shapes every subsequent decision in the trading lifecycle, from signal generation and strategy design to risk management and execution routing. Choosing a feed is choosing the reality upon which the firm will operate.

Strategy

The strategic implications of selecting a market data architecture are profound, directly shaping a firm’s competitive landscape and the universe of viable trading strategies. The choice between a consolidated and a low-latency feed is a commitment to a specific mode of market interaction. This decision dictates not only the speed of engagement but also the very nature of the opportunities a firm can perceive and act upon.

A consolidated feed supports strategies where the primary analytical inputs are not time-sensitive to the microsecond level. A low-latency feed is the prerequisite for strategies that exploit transient market inefficiencies and ephemeral pricing discrepancies.

Defining the Strategic Arena through Data

The data feed is the lens through which a firm views the market. A consolidated feed, providing only the top-of-book (the best bid and offer), offers a narrow, simplified picture. This is sufficient for strategies that rely on longer-term signals, fundamental analysis, or macroeconomic trends.

A retail investor, a traditional asset manager, or a pension fund executing a large portfolio rebalance over several hours will find the NBBO provided by a consolidated feed perfectly adequate for their execution benchmarks. Their strategic goal is not to outrun other algorithms but to achieve a fair price over time, and the SIP provides the reference point for that fairness.

A low-latency, direct feed, in contrast, provides a high-resolution, multi-dimensional view of the market microstructure. By showing the full depth of the order book, it reveals the supply and demand dynamics at every price level. This information is the lifeblood of sophisticated, short-horizon strategies. Market makers, statistical arbitrageurs, and high-frequency traders build their entire business model on this granular data.

They can detect large hidden orders, predict short-term price movements based on order book imbalances, and structure their own orders to minimize market impact. For these participants, the top-of-book data from a consolidated feed is an incomplete and dangerously lagging indicator of the true state of liquidity.

How Does Data Feed Choice Impact Algorithmic Strategy?

The algorithm is the engine, but the data feed is the fuel. The quality of the fuel determines the performance of the engine. An algorithmic strategy designed to capture the spread between a security and its derivatives, for example, is entirely dependent on receiving price updates for all components simultaneously and with the lowest possible latency.

If the leg of the trade on one exchange is perceived through a slow, consolidated feed, while the other is seen directly, the temporal mismatch creates significant execution risk. The arbitrage opportunity may vanish in the time it takes for the consolidated data to arrive.

The table below outlines the alignment between specific trading strategies and the requisite data feed architecture. It illustrates how the choice of data feed is a primary determinant of strategic capability.

| Trading Strategy | Consolidated Feed Suitability | Low-Latency Feed Necessity | Rationale |

|---|---|---|---|

| Long-Term Investment | High | Low | Execution is based on fundamental value, not intraday price fluctuations. The NBBO is a sufficient benchmark for best execution over long time horizons. |

| VWAP/TWAP Execution | High | Medium | These algorithms aim to match a volume-weighted or time-weighted average price. While lower latency can improve slicing, the benchmark itself is based on historical (even if recent) data, making the SIP acceptable. |

| Market Making | Low | Critical | Requires seeing the full order book to manage inventory and quote placement effectively. Relying on a lagging top-of-book feed would expose the market maker to being picked off by faster traders. |

| Statistical Arbitrage | Very Low | Critical | Profitability is derived from small, fleeting price discrepancies between correlated assets. The strategy’s half-life is often measured in microseconds, demanding the absolute lowest latency possible. |

| Liquidity Detection | Low | High | Algorithms designed to uncover large institutional orders by analyzing changes deep within the order book cannot function with only top-of-book data. |

The Economic Tradeoff a Cost Benefit Analysis

The strategic decision is also an economic one. Consolidated feeds are, relatively speaking, inexpensive. They are mandated and regulated to provide a baseline of market access.

Low-latency direct feeds represent a significant financial and operational investment. The costs include:

- Exchange Fees ▴ Exchanges charge substantial fees for direct data access and even more for physical colocation within their data centers.

- Infrastructure Costs ▴ This includes leasing rack space, purchasing high-performance servers, specialized network cards, and FPGAs.

- Connectivity Costs ▴ Firms must pay for the physical fiber-optic cross-connects that link their servers to the exchange’s matching engine. For strategies requiring access to multiple exchanges in different geographic locations, this involves building or leasing a wide-area network.

- Development and Maintenance ▴ A team of highly skilled engineers is required to build and maintain the software and hardware systems capable of processing millions of messages per second.

The strategic calculus for a firm is whether the potential alpha generated by its latency-sensitive strategies justifies this substantial, ongoing expenditure. For a high-frequency trading firm, these costs are the price of doing business. For a long-term asset manager, they would be an unjustifiable expense. The choice of data feed, therefore, becomes a clear reflection of the firm’s business model and its position in the financial ecosystem.

Execution

Executing on a market data strategy requires a deep, granular understanding of the underlying technology and operational protocols. Moving from the strategic decision to implement a low-latency or consolidated feed to a functioning, reliable system is a complex undertaking. It involves a multi-stage process encompassing technological architecture, quantitative analysis, and system integration. This is the domain where theoretical advantages are forged into tangible operational capabilities.

The core difference in execution lies in the level of control and responsibility a firm assumes. Utilizing a consolidated feed is akin to consuming a managed service, while building a low-latency infrastructure is an act of systems engineering from the ground up.

The Operational Playbook

Implementing a market data solution is a structured process. For a firm transitioning to or building a low-latency infrastructure, the playbook involves several critical stages. Each stage requires meticulous planning and execution to ensure the final system is robust, scalable, and fit for purpose.

Phase 1 Needs Assessment and Vendor Selection

- Strategy Alignment ▴ The first step is to define the precise requirements of the trading strategies that will consume the data. What is the required latency tolerance in microseconds? Is full depth of book necessary, or just the top five levels? Which specific exchanges and asset classes must be covered?

- Build vs. Buy Analysis ▴ A firm must decide whether to build its entire data handling stack in-house, buy a turnkey solution from a vendor, or pursue a hybrid approach. Vendors like Exegy or ION MarketFactory offer managed services that can reduce the in-house engineering burden.

- Vendor Due Diligence ▴ If a vendor path is chosen, a rigorous evaluation process is necessary. This includes assessing the vendor’s network footprint, data normalization capabilities, API quality, and support model. Reference checks with existing clients are critical.

Phase 2 Infrastructure Deployment

- Colocation ▴ The firm must lease cabinet space within the primary data centers of the target exchanges (e.g. Mahwah for NYSE, Carteret for Nasdaq, Aurora for CME). This physical proximity is the first and most important step in reducing network latency.

- Hardware Procurement ▴ This involves selecting and purchasing servers optimized for low-latency processing (high clock speeds, specific cache architectures), network interface cards (NICs) that support kernel bypass, and potentially FPGAs for nanosecond-level decoding and filtering.

- Network Connectivity ▴ The firm’s engineers must work with the data center and exchange staff to provision physical fiber-optic cross-connects from their collocated servers directly to the exchange’s data distribution gateways. This is the “direct feed.”

Phase 3 Software Integration and Normalization

Each exchange broadcasts its direct feed in a unique, proprietary binary format. A significant engineering challenge is to create a “normalizer” or “feed handler” that can decode these disparate formats into a single, consistent internal data structure that the firm’s trading algorithms can understand. This process must be incredibly efficient to avoid introducing new latency.

Quantitative Modeling and Data Analysis

The quantitative difference between the data delivered by a consolidated feed and a low-latency feed is stark. This difference can be modeled to understand its impact on trading outcomes. The two primary dimensions of this difference are information granularity and temporal fidelity.

The value of a low-latency feed is measured in the microseconds saved and the depth of market visibility gained.

Data Granularity Full Depth Vs Top of Book

A consolidated feed provides a single data point for the best bid and offer. A direct feed provides a complete vector of bids and offers. The table below illustrates this difference with hypothetical data for a fictional stock (XYZ).

| Consolidated SIP/UTP Feed (NBBO) | Low-Latency Direct Exchange Feed (Full Depth of Book) | |||||||

|---|---|---|---|---|---|---|---|---|

| Bid Price | Bid Size | Ask Price | Ask Size | Bid Price | Bid Size | Ask Price | Ask Size | |

| $100.01 | 500 | $100.02 | 300 | $100.01 | 500 | $100.02 | 300 | |

| No further data available | $100.00 | 1200 | $100.03 | 800 | ||||

| $99.99 | 2500 | $100.04 | 1500 | |||||

| $99.98 | 5000 | $100.05 | 3000 | |||||

| $99.97 | 8000 | $100.06 | 4500 | |||||

An algorithm consuming the consolidated feed only knows that it can sell 500 shares at $100.01. An algorithm with the direct feed sees that there is substantial demand (a “thick book”) just below the best bid, which might influence its decision to sell aggressively or to wait. It can calculate the total market impact of a large order, a capability the consolidated feed user lacks entirely.

Temporal Fidelity the Cost of Latency

The Securities Information Processor (SIP) introduces latency by its very nature. Research has shown that this latency is not uniform and can be significant, especially in volatile markets. For high-volume stocks, a large percentage of trades reported by the SIP can be out of sequence, meaning a trade that occurred later in time is reported before a trade that occurred earlier.

This fundamental inaccuracy can corrupt any analysis based on trade sequences, such as calculating returns or VWAP. A direct feed, receiving data straight from the exchange’s matching engine, provides a true chronological record of events.

Predictive Scenario Analysis

Consider a hypothetical quantitative fund, “Helios Capital,” which has historically relied on a commercial, consolidated data feed for its mid-frequency statistical arbitrage strategies. Their models, while profitable, have seen decaying returns as the market has become more efficient. The principals decide that to compete, they must move into a higher-frequency domain, which requires a fundamental overhaul of their data infrastructure. Their target is a classic pairs trading strategy between two highly correlated technology stocks, traded across two different exchanges, NYSE and Nasdaq.

The first step for Helios is a nine-month, multi-million dollar project to build out a low-latency infrastructure. They lease cabinets in the NYSE data center in Mahwah, NJ, and the Nasdaq data center in Carteret, NJ. They procure high-performance servers and Solarflare network cards. A dedicated network engineering team spends weeks working with data center staff to provision the physical cross-connects to the NYSE’s Integrated Feed and Nasdaq’s TotalView ITCH feed.

Concurrently, a software team begins the arduous task of writing feed handlers. The NYSE feed uses one binary protocol, and the Nasdaq feed uses another. The team’s goal is to normalize both feeds into a common internal format with less than a 5-microsecond processing overhead.

Six months into the project, they have their first end-to-end data flow. They can now see the full order book for their target stocks directly from the source. The quantitative team begins analyzing the new data. They immediately discover patterns that were invisible on their old consolidated feed.

They can see large, passive orders resting several levels down in the book, providing clues to institutional intent. They also find that the true NBBO, as seen on the direct feeds, frequently flickers and changes faster than the SIP can report it. Their old system was, in effect, trading on a ghost of the market.

They model the financial impact. On their old system, a typical pairs trade opportunity might offer a theoretical profit of $0.01 per share. However, due to the 500-microsecond latency of their old feed, by the time their order reached the exchange, the price had often moved against them, resulting in an average realized profit of only $0.002 per share (a phenomenon known as slippage). With the new direct feed infrastructure, their total latency from market event to order entry is reduced to under 10 microseconds.

This allows them to capture the opportunity before it disappears. Their analysis shows the realized profit per share jumps to $0.008. While seemingly small, their strategy executes millions of shares per day. This latency reduction translates into an estimated $15 million in additional annual revenue, more than justifying the initial and ongoing cost of the infrastructure. The project is deemed a success, and Helios Capital has successfully transformed its operational and strategic capabilities.

System Integration and Technological Architecture

The technological architecture of a low-latency data system is a specialized field of engineering focused on eliminating delay at every possible point in the data processing chain. This extends from the physical layer of network transmission to the application layer of software design.

What Is the Core Network Technology?

The foundation of low-latency communication is the physical network. In the context of financial markets, this has evolved into a competition between fiber optics and microwave technology.

- Fiber-Optic Cables ▴ These are the standard for high-bandwidth, reliable data transmission. Within a data center, connections are short, direct fiber runs. Between data centers (e.g. from Chicago to New York), firms lease dedicated “long-haul” fiber paths that are engineered to be as straight and short as possible.

- Microwave Transmission ▴ For the most latency-sensitive strategies, even the speed of light in glass (which is slower than in a vacuum) is too slow. Microwave networks use towers to transmit data through the air, which offers a more direct, “line-of-sight” path between trading hubs. This can shave critical microseconds off the journey time compared to fiber.

Hardware the Role of FPGAs and Specialized NICs

Standard CPUs are versatile but can introduce latency through context switching and operating system overhead. To bypass this, high-frequency firms use specialized hardware.

- Field-Programmable Gate Arrays (FPGAs) ▴ These are semiconductor devices that can be programmed to perform a specific task. For market data, an FPGA can be programmed to do one thing perfectly ▴ decode a specific binary feed protocol. It performs this task in hardware, achieving processing times in the nanosecond range, something a software solution on a CPU cannot match.

- Kernel-Bypass NICs ▴ A standard Network Interface Card (NIC) passes incoming data packets to the operating system’s kernel, which then passes it to the user application. This journey introduces latency. Kernel-bypass NICs (from vendors like Solarflare or Mellanox) allow data to be delivered directly from the network wire to the application’s memory space, bypassing the OS kernel entirely and saving microseconds.

Software Protocols and Data Handling

The software stack is the final piece of the puzzle. It must be designed to be as “mechanically sympathetic” to the hardware as possible.

- UDP vs. TCP ▴ Consolidated feeds may use TCP, a protocol that guarantees delivery by retransmitting lost packets. Direct feeds almost universally use UDP with multicast. UDP is a “fire-and-forget” protocol; it does not guarantee delivery. In the context of market data, a single missed packet is irrelevant as a new, more current packet will arrive microseconds later. The overhead of TCP’s guaranteed delivery is an unacceptable latency cost. Multicast allows the exchange to broadcast a single stream of data that can be received by many clients simultaneously, which is highly efficient.

- Data Serialization ▴ The format used to represent data “on the wire” is critical. Consolidated feeds might use a more verbose, human-readable format. Direct feeds use highly compact, proprietary binary formats where every bit is optimized for fast machine parsing.

References

- Hasbrouck, Joel. “Trading Costs and Returns for U.S. Equities ▴ Estimating Effective Costs from Daily Data.” The Journal of Finance, vol. 64, no. 3, 2009, pp. 1445-1477.

- O’Hara, Maureen. “Market Microstructure Theory.” Blackwell Publishing, 1995.

- Ding, Shiyang, et al. “How Slow is the U.S. Securities Information Processor?” Journal of Financial Markets, vol. 25, 2015, pp. 54-76.

- Budish, Eric, et al. “The High-Frequency Trading Arms Race ▴ Frequent Batch Auctions as a Market Design Response.” The Quarterly Journal of Economics, vol. 130, no. 4, 2015, pp. 1547-1621.

- Harris, Larry. “Trading and Exchanges ▴ Market Microstructure for Practitioners.” Oxford University Press, 2003.

- Angel, James J. et al. “Equity Trading in the 21st Century ▴ An Update.” The Quarterly Journal of Finance, vol. 5, no. 1, 2015.

- Lehalle, Charles-Albert, and Sophie Laruelle. “Market Microstructure in Practice.” World Scientific Publishing, 2013.

- Securities and Exchange Commission. “Concept Release on Equity Market Structure.” Release No. 34-61358; File No. S7-02-10, 2010.

Reflection

The architecture of a firm’s data infrastructure is ultimately an expression of its market philosophy. It reveals whether the firm views the market as a landscape of long-term value or as a high-dimensional space of fleeting opportunities. The decision to invest in nanosecond-level data processing is a declaration of intent to operate at the furthest frontier of price discovery. The reliance on a consolidated feed is a statement that the firm’s edge is derived from sources other than speed.

The knowledge gained about these systems is a component in a larger operational intelligence framework. The truly resilient trading system is one where the data feed, the algorithmic strategies, the risk controls, and the human oversight are all designed as a single, coherent machine, perfectly calibrated to execute the firm’s core strategic vision.

Glossary

Direct Feed

Market Data

Securities Information Processor

Data Feed

Low-Latency Feed

Data Center

Field-Programmable Gate Arrays

Market Microstructure

Order Book

Algorithmic Strategy

Direct Feeds

Data Centers

Colocation

High-Frequency Trading

Depth of Book

Data Normalization

Consolidated Data Feed

Statistical Arbitrage