Concept

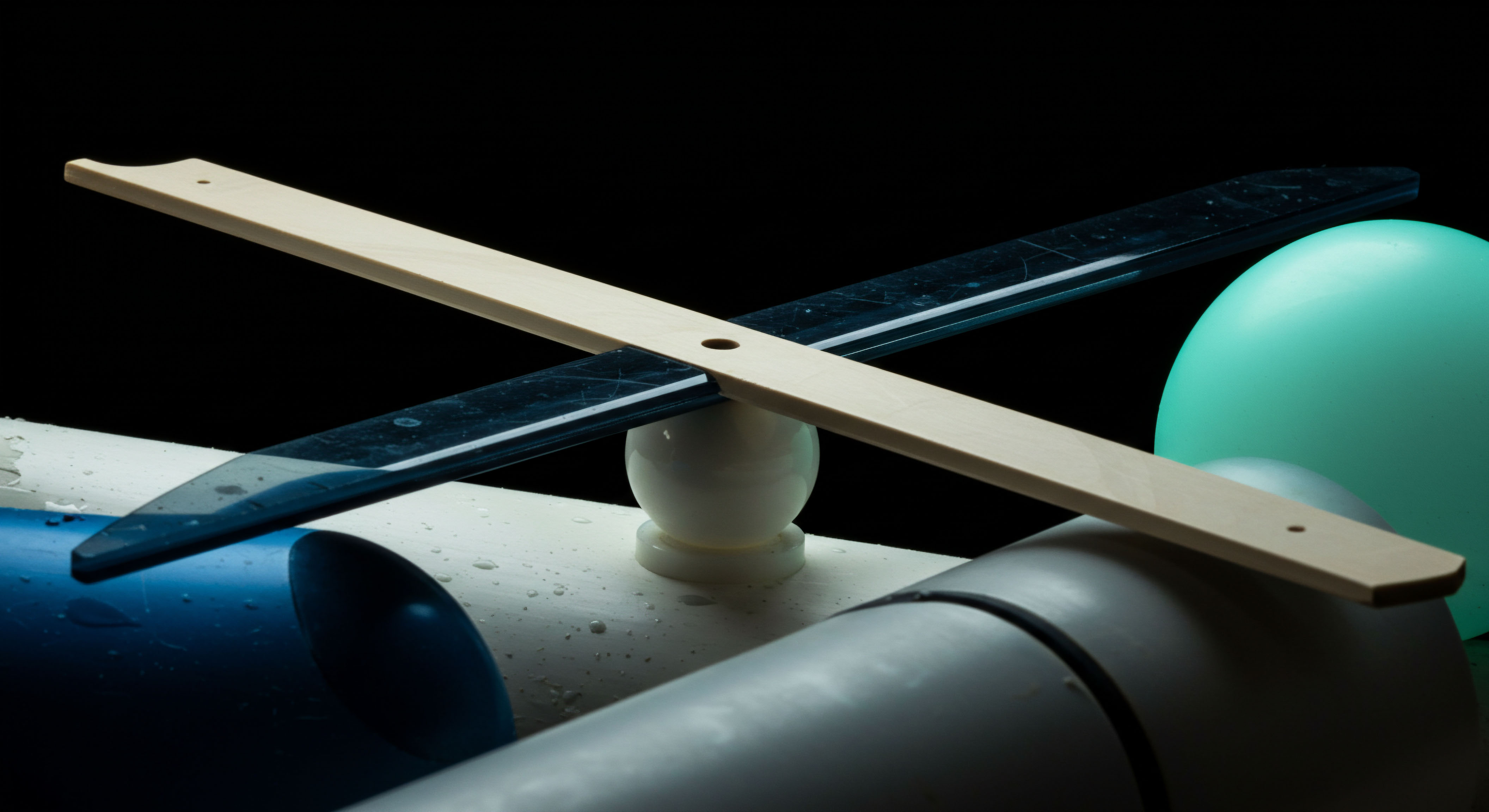

Integrating legacy systems for real-time monitoring presents a fundamental challenge of architectural dissonance. These established systems, often the operational bedrock of an institution, were engineered for stability, durability, and batch-oriented processing cycles. Their design philosophy prioritized transactional integrity within a closed, predictable environment. The introduction of real-time monitoring imposes a completely different paradigm, one that values continuous data streams, low-latency insights, and interoperability with a dynamic, heterogeneous technology ecosystem.

The core hurdle is the reconciliation of these two opposing design principles. It involves more than connecting disparate technologies; it requires creating a functional bridge between deeply ingrained operational philosophies.

The very definition of a legacy system contributes to this complexity. These are not merely old systems; they are systems deeply embedded in critical business processes, often with incomplete or nonexistent documentation and a shrinking pool of available expertise. They represent decades of accumulated business logic, a form of institutional memory encoded in programming languages like COBOL or running on resilient platforms like mainframes. Replacing them wholesale is often untenable due to the immense cost, risk, and potential for operational disruption.

Therefore, integration becomes the only viable path forward. This path, however, is fraught with obstacles that begin at the most basic levels of data structure and communication protocols.

The foundational challenge lies in making systems designed for periodic, predictable finality engage with a world that demands constant, instantaneous awareness.

The Impedance Mismatch in System Design

At the heart of the integration challenge is a concept analogous to impedance mismatch in electronics. Legacy systems operate with high impedance to change; they are built to resist fluctuations and ensure consistency. Modern real-time monitoring tools, conversely, are low-impedance systems, designed to be agile, adaptable, and responsive to high-frequency data.

Connecting these two systems directly results in a significant loss of signal fidelity and performance. The legacy system cannot service the rapid data requests of the monitoring platform without compromising its own stability, while the monitoring platform is starved of the timely data it needs to be effective.

This mismatch manifests in several key areas:

- Data Pacing ▴ Legacy systems are often designed to provide data in large, infrequent batches. Real-time systems expect a continuous, granular stream. Forcing a batch-oriented system to produce a continuous stream can overwhelm its resources and lead to performance degradation.

- Transactional Integrity vs. Observability ▴ The primary directive of many legacy systems is to protect the integrity of a transaction. Monitoring systems, on the other hand, prioritize observability, which may require access to data while a transaction is still in-flight. This creates a conflict between the core design principles of the two systems.

- Synchronous vs. Asynchronous Communication ▴ Many older systems rely on synchronous communication patterns, where a request is made and the system waits for a response. Modern, distributed systems heavily favor asynchronous communication to improve scalability and resilience. Bridging these two communication styles is a non-trivial architectural challenge.

Unearthing the Data Silos

A primary consequence of legacy architectures is the creation of deeply entrenched data silos. These systems were often developed to serve a specific departmental function, with little consideration for data sharing across the organization. As a result, critical data becomes trapped within proprietary formats and inaccessible databases. Integrating for real-time monitoring requires breaking down these silos, which is as much an archaeological endeavor as it is a technical one.

It involves reverse-engineering data formats, deciphering undocumented business logic, and mapping data flows that may not have been fully understood for years. This process is resource-intensive and carries the inherent risk of misinterpreting data, which could lead to flawed insights from the monitoring system.

Strategy

Addressing the technological hurdles of legacy system integration requires a strategic framework that acknowledges the inherent constraints of the existing infrastructure while pursuing the goal of real-time visibility. A direct, “big bang” integration is seldom feasible. Instead, a phased, multi-layered approach is necessary, focusing on creating intermediate layers of abstraction that decouple the legacy systems from the modern monitoring platforms.

This strategy mitigates risk, manages cost, and allows for incremental modernization without disrupting mission-critical operations. The objective is to build a resilient and adaptable data pipeline that can translate and transport data from the legacy core to the modern analytical edge.

The selection of an appropriate integration strategy depends on a careful analysis of the legacy system’s architecture, the real-time data requirements, and the organization’s tolerance for risk and expenditure. There is no single solution; the optimal approach is a composite of several techniques, tailored to the specific operational context. These strategies range from non-invasive data capture methods to the development of sophisticated middleware and API layers. Each strategy presents a different set of trade-offs between performance, complexity, and cost.

Effective strategy is not about replacing the old with the new, but about creating a symbiotic relationship where the stability of the legacy core feeds the agility of the modern monitoring system.

Architectural Patterns for Integration

Several architectural patterns have emerged as effective strategies for bridging the gap between legacy systems and real-time monitoring requirements. The choice of pattern is dictated by factors such as the accessibility of the legacy data, the required data latency, and the desired level of transformation.

- Data Virtualization ▴ This approach involves creating a virtual data layer that provides a unified, real-time view of data from multiple, disparate sources, including legacy systems. The data remains in the source systems, and the virtualization layer handles the querying and aggregation. This is a less invasive approach, as it does not require moving the data, but it can introduce performance bottlenecks if the underlying legacy systems cannot handle the query load.

- Change Data Capture (CDC) ▴ CDC is a technique used to monitor and capture data changes in a database and deliver those changes to downstream systems. This is a highly efficient method for real-time integration, as it only deals with the data that has changed, reducing the load on the legacy system. However, implementing CDC can be complex, especially on older database technologies.

- Middleware and Enterprise Service Bus (ESB) ▴ Middleware acts as an intermediary, facilitating communication between the legacy system and the monitoring platform. An ESB provides a more robust and scalable solution, offering services like message routing, protocol transformation, and data enrichment. This approach provides a high degree of decoupling but also introduces an additional layer of complexity and potential point of failure.

- API Gateway ▴ In this pattern, an API gateway is placed in front of the legacy system, exposing its functionality through a modern, well-defined API (e.g. REST). This is an effective way to modernize access to legacy systems, but it requires development effort to create and maintain the APIs. It may also not be suitable for high-volume, real-time data streaming without careful design.

Comparative Analysis of Integration Strategies

The selection of an integration strategy involves a careful consideration of various factors. The following table provides a comparative analysis of the primary architectural patterns.

| Strategy | Primary Use Case | Latency | Invasiveness | Complexity |

|---|---|---|---|---|

| Data Virtualization | Unified, on-demand data access across multiple systems. | Near real-time to high, depending on source system performance. | Low | Moderate |

| Change Data Capture (CDC) | Real-time replication of database transactions. | Very Low | Moderate to High | High |

| Middleware/ESB | Complex integrations requiring protocol and data format translation. | Low to Moderate | Moderate | High |

| API Gateway | Exposing legacy functionality as modern, managed services. | Low | Moderate | Moderate |

Risk Mitigation and Management

Any legacy integration project is attended by significant risk. These risks extend beyond the technical challenges and encompass operational, financial, and security domains. A robust strategic plan must include a comprehensive risk mitigation framework.

- Operational Risk ▴ The primary operational risk is the potential for the integration process to disrupt the functioning of the legacy system. This can be mitigated through phased rollouts, extensive testing in a sandboxed environment, and the use of integration patterns that minimize the load on the legacy system.

- Security Risk ▴ Opening up legacy systems, which may lack modern security features, to a network can create new vulnerabilities. A defense-in-depth strategy is required, incorporating measures such as network segmentation, intrusion detection systems, and the use of an API gateway to enforce security policies.

- Financial Risk ▴ Legacy integration projects can be costly and prone to scope creep. A clear business case, tight project management, and a focus on delivering incremental value can help to control costs. It is also crucial to factor in the long-term maintenance costs of the integration solution itself.

Execution

The execution phase of a legacy system integration project is where the strategic plans confront the granular realities of the technology. This is a phase that demands meticulous planning, deep technical expertise, and a disciplined, iterative approach. Success hinges on the ability to manage a complex interplay of data formats, communication protocols, and performance constraints.

The execution must be approached as a series of well-defined, manageable work packages, each with its own testing and validation criteria. This ensures that progress is measurable and that any issues are identified and resolved early in the process.

A critical aspect of the execution is the establishment of a dedicated, cross-functional team. This team should include not only modern software engineers and data architects but also, crucially, individuals with deep knowledge of the legacy system. This legacy expertise is often the most difficult resource to secure, yet it is indispensable for navigating the undocumented features and idiosyncrasies of the older technology. The team’s first task is often one of discovery and documentation, creating a detailed map of the legacy system’s data structures, business logic, and operational characteristics.

In the unforgiving environment of legacy integration, success is a function of precision, patience, and a profound respect for the complexity of the existing system.

The Data Transformation Pipeline

The core of the execution phase is the construction of a data transformation pipeline. This pipeline is responsible for extracting data from the legacy system, transforming it into a modern, usable format, and loading it into the real-time monitoring platform. Each stage of this pipeline presents its own set of challenges.

Data Extraction

Extracting data from a legacy system can be a formidable challenge. The methods available depend heavily on the underlying technology of the system.

- Database Connectors ▴ If the legacy system uses a database that is still supported, it may be possible to use a standard connector (e.g. JDBC, ODBC) to access the data. However, direct database access can put a significant load on the legacy system and must be carefully managed.

- File-Based Extraction ▴ Many older systems were designed to output data to flat files (e.g. EBCDIC encoded, with COBOL copybook layouts). This requires a process to transfer these files and a parser to interpret their structure and content.

- Screen Scraping ▴ In the most challenging cases, where no other method is available, it may be necessary to use screen scraping techniques. This involves emulating a terminal user to navigate the legacy application’s interface and extract data from the screen. This method is brittle and should be considered a last resort.

Data Transformation and Enrichment

Once the data is extracted, it must be transformed into a consistent, modern format like JSON or Avro. This transformation process is often complex, involving not just a change in format but also in data semantics.

The following table illustrates a simplified example of data mapping from a legacy format to a modern JSON structure.

| Legacy Field (COBOL Copybook) | Data Type | Modern Field (JSON) | Transformation Logic |

|---|---|---|---|

| CUST-ID | PIC 9(8) | customerId | Convert from numeric to string. |

| CUST-NAME | PIC X(40) | customerName | Trim trailing whitespace. |

| LAST-ORD-DT | PIC 9(8) COMP-3 | lastOrderDate | Convert from packed decimal (YYYYMMDD) to ISO 8601 format. |

| ORD-TOTAL | PIC S9(7)V99 COMP-3 | orderTotal | Convert from packed decimal to a floating-point number. |

Beyond simple format conversion, this stage may also involve data enrichment, where the legacy data is combined with data from other sources to provide a more complete picture for the monitoring system.

A Phased Implementation Protocol

A phased approach is crucial for managing the complexity and risk of the integration project. The following protocol outlines a structured, iterative methodology for execution.

- Phase 1 ▴ Discovery and Scoping ▴

- Conduct a thorough analysis of the legacy system to identify data sources, business logic, and performance characteristics.

- Define the specific data requirements for the real-time monitoring system.

- Select the appropriate integration strategy and architectural pattern.

- Develop a detailed project plan and risk assessment.

- Phase 2 ▴ Pilot Implementation ▴

- Select a small, non-critical subset of data to be integrated.

- Develop and test the data extraction, transformation, and loading (ETL) pipeline for this subset.

- Deploy the pilot solution in a pre-production environment.

- Validate the performance and accuracy of the integrated data.

- Phase 3 ▴ Incremental Rollout ▴

- Expand the integration to include additional data sets, prioritizing based on business value.

- Iteratively refine and optimize the ETL pipeline based on the learnings from the pilot.

- Conduct rigorous performance and stress testing at each stage.

- Implement a robust monitoring and alerting system for the integration pipeline itself.

- Phase 4 ▴ Operationalization and Governance ▴

- Transition the integration solution to full production.

- Establish a governance framework for managing the integrated data, including data quality and security policies.

- Develop a long-term maintenance and support plan for the integration solution.

- Continuously monitor and optimize the performance of the end-to-end system.

References

- Bisbal, J. Lawless, D. Wu, B. & Grimson, J. (1999). Legacy system migration ▴ A brief review of problems, solutions and research issues. IEEE Software, 16(5), 103-111.

- Brodie, M. L. & Stonebraker, M. (1995). Migrating Legacy Systems ▴ Gateways, Interfaces & the Incremental Approach. Morgan Kaufmann.

- Comella-Dorda, S. Wallnau, K. Seacord, R. & Robert, J. (2000). A survey of legacy system modernization approaches. Carnegie-Mellon Univ Pittsburgh Pa Software Engineering Inst.

- Hasan, M. R. & Loucopoulos, P. (2005). A framework for evolving legacy systems to the semantic web. International Conference on Interoperability of Enterprise Software and Applications.

- Khusro, S. Ali, Z. & Rauf, I. (2015). A systematic review of challenges in developing and deploying enterprise-level data warehouses. Journal of Systems and Software, 102, 39-64.

- Seacord, R. C. Plakosh, D. & Lewis, G. A. (2003). Modernizing Legacy Systems ▴ Software Technologies, Engineering Processes, and Business Practices. Addison-Wesley Professional.

- Sneed, H. M. (2004). A pragmatic approach to the problem of legacy data conversion. Proceedings of the 8th European Conference on Software Maintenance and Reengineering.

- Wu, B. Lawless, D. Bisbal, J. & Grimson, J. (1997). The challenges of migrating legacy systems to the web. Proceedings of the 21st International Computer Software and Applications Conference.

Reflection

The Systemic View of Integration

The process of integrating legacy systems for real-time monitoring forces a profound re-evaluation of an organization’s information architecture. It moves the conversation beyond a simple technological upgrade and toward a more holistic understanding of how data flows, where value is created, and how operational resilience is maintained. The hurdles encountered are symptoms of a deeper architectural truth ▴ that the systems which have provided stability for decades were built on principles that are fundamentally at odds with the demands of a real-time, data-driven world. Overcoming these hurdles, therefore, is an act of systemic evolution.

The knowledge gained through this process is a strategic asset. It provides a detailed, often painfully acquired, map of the organization’s true operational core. This map can guide future modernization efforts, inform data governance policies, and provide a clearer picture of technological risk.

The ultimate goal is to create a system where the legacy core and the modern analytical layer are not just connected, but are part of a coherent, well-understood whole. This is the foundation of a truly adaptive and resilient enterprise.

Glossary

Real-Time Monitoring

Legacy Systems

Business Logic

Legacy System

Monitoring Platform

Data Silos

Monitoring System

Real-Time Data

Data Capture

Change Data Capture

Enterprise Service Bus

Middleware

Api Gateway

Data Transformation