Concept

The calibration of a randomized algorithm within a financial execution system is an act of architectural design. It is the process of defining the core logic that governs how the system interacts with the market’s complex, stochastic environment. An institution’s ability to achieve its strategic objectives is directly linked to the precision with which this calibration reflects a deep understanding of the market’s microstructure and the firm’s own risk appetite.

The process involves a series of fundamental trade-offs, each representing a decision point in the design of an optimal execution protocol. These are not mere technical settings; they are deliberate choices that balance competing priorities, shaping the character and performance of the trading entity in the electronic marketplace.

At the heart of this calibration lies the elemental tension between exploration and exploitation. Exploration is the algorithm’s mandate to search the vast, often opaque, landscape of available liquidity. This includes probing dark pools, soliciting quotes from multiple counterparties, and discovering transient order book depth. Exploitation, conversely, is the directive to act decisively on known, high-quality opportunities.

This means executing against visible, stable liquidity with minimal delay. A system calibrated heavily towards exploration may discover superior pricing at the cost of time and potential information leakage. A system biased towards exploitation will be swift but may consistently miss opportunities for price improvement residing in undiscovered liquidity pockets. The art of calibration is the art of balancing this dynamic tension to match the specific goals of a given trading strategy.

The Triad of Scarcity

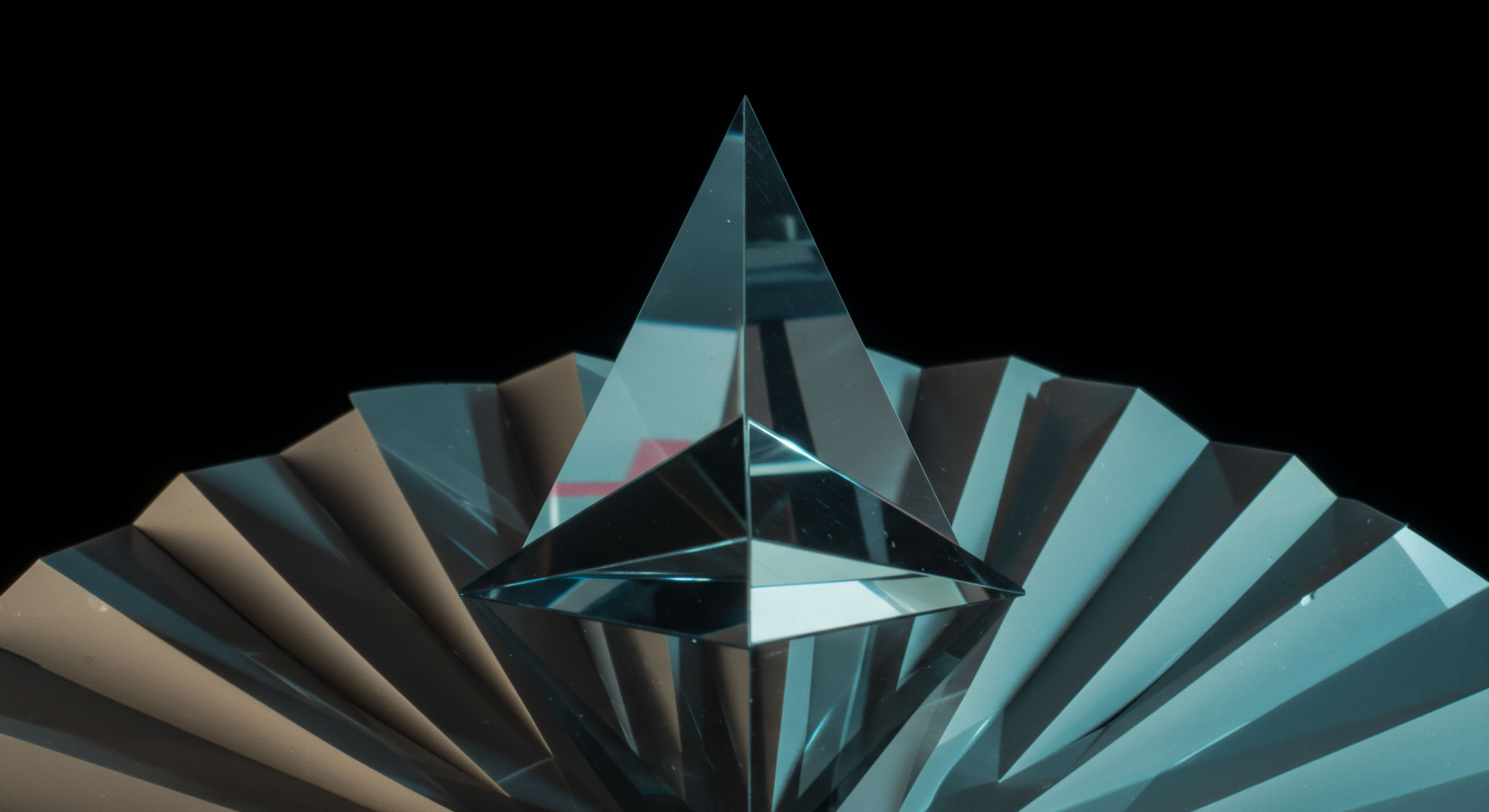

Every calibration decision is made under the constraints of three finite resources ▴ statistical certainty, computational capacity, and time. These three elements form a triangle of trade-offs, where optimizing for one invariably compromises another. Achieving a high degree of statistical certainty about an algorithm’s performance requires extensive backtesting and simulation across a multitude of market scenarios. This process consumes immense computational resources and time.

Conversely, a rapid calibration process, designed to adapt quickly to changing market regimes, will operate with a smaller data set, thus accepting a lower degree of statistical confidence in its output. The system architect must decide the appropriate balance based on the strategic importance and duration of the trading mandate.

Calibrating a randomized algorithm is the deliberate balancing of computational cost, statistical confidence, and execution speed to achieve a specific strategic outcome in financial markets.

This resource triad dictates the practical limits of algorithmic performance. For instance, a high-frequency strategy must prioritize time above all else. Its calibration will favor simpler models and heuristics that can be computed with minimal latency, accepting that this speed comes at the expense of a more comprehensive statistical analysis. A long-term portfolio rebalancing algorithm, on the other hand, can afford to expend significant computational resources over hours or even days to find a mathematically robust execution schedule.

Its calibration prioritizes statistical certainty and cost minimization over the immediacy of execution. The choice is a direct reflection of the strategy’s economic intent.

What Defines Algorithmic Efficacy?

The very definition of a “good” outcome is itself a calibration choice. Is the primary objective to minimize slippage against an arrival price? Or is it to minimize market impact and preserve the secrecy of the trading intention? These two goals are often in opposition.

An aggressive algorithm that consumes liquidity quickly will minimize arrival price slippage but generate significant market impact, signaling its intent to the wider market. A passive algorithm that works an order slowly will have low market impact but is exposed to adverse price movements during its longer execution window. The calibration process must therefore begin with a clear, quantitative definition of the objective function, which itself represents a strategic trade-off between competing measures of execution quality. This initial decision sets the entire direction for the subsequent technical and quantitative work.

The process of calibration is therefore a foundational act of translating strategic intent into operational reality. It is where abstract goals like “alpha preservation” and “risk management” are encoded into the concrete logic of an execution system. The trade-offs are not problems to be solved but parameters to be managed, reflecting a sophisticated understanding of the institution’s place within the market ecosystem.

Strategy

Strategic calibration of a randomized algorithm moves beyond foundational concepts to the architectural design of its interaction with the market. The core task is to construct a policy that intelligently navigates the primary trade-offs in a way that aligns with a specific investment thesis. This requires a framework for thinking about how different calibration choices produce distinct behavioral patterns in the algorithm, and how those patterns serve or undermine the overarching strategy. The design process involves making deliberate choices about the algorithm’s autonomy, its statistical underpinnings, and its operational scope.

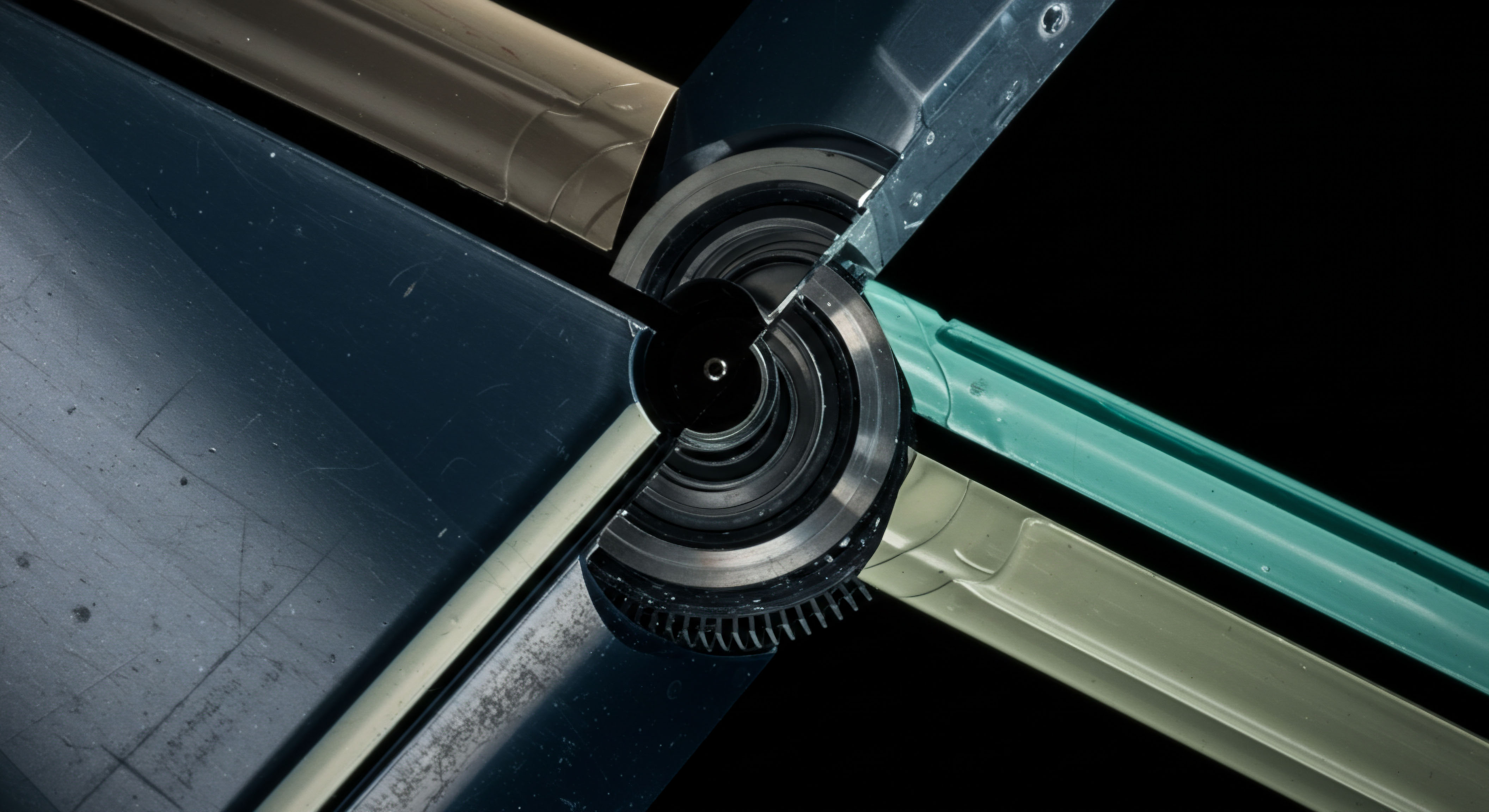

The Performance versus Control Dilemma

A primary strategic decision is determining the level of human oversight versus full automation. This is the performance-control trade-off. An algorithm given full autonomy, based on a robust calibration, will in many cases outperform a human trader burdened by behavioral biases and emotional responses. The fully automated system can execute a statistically optimized strategy with perfect discipline, 24/7.

This approach seeks to maximize theoretical performance by removing the potential for human error from the execution loop. The strategic bet is that the initial calibration is sound and that the market conditions will remain within the parameters for which the algorithm was optimized.

The alternative involves designing the system with a “human in the loop.” In this model, the algorithm acts as a sophisticated co-pilot, managing the minute-to-minute execution details while allowing a human trader to intervene at critical decision points. This could involve approving large “parent” orders, adjusting the algorithm’s aggression level in real-time in response to unexpected news, or manually overriding a decision that appears contextually unsound. This strategy sacrifices some measure of theoretical optimality to gain a layer of qualitative, experience-based risk management.

It acknowledges that no model can capture all possible market states and that human intuition can be valuable in navigating unforeseen events. The calibration here is dual-layered ▴ the algorithm itself is tuned for performance, while the interface and alert systems are tuned for effective human-machine collaboration.

The strategic choice between full automation and human oversight is a decision about where to locate the ultimate responsibility for risk management within the execution workflow.

The following table outlines the strategic considerations inherent in this choice:

| Framework | Primary Objective | Core Strength | Inherent Risk | Ideal Use Case |

|---|---|---|---|---|

| Full Automation | Maximize theoretical execution quality and eliminate behavioral bias. | Disciplined, high-speed, and consistent application of a statistically derived model. | Model risk; the potential for large losses if the market regime shifts beyond the calibration’s assumptions. | High-frequency trading, systematic strategies in liquid markets, and large-scale portfolio rebalancing. |

| Human-in-the-Loop | Blend quantitative rigor with qualitative human oversight for robust risk management. | Adaptability to unforeseen events and contextual market intelligence. | Sub-optimal execution due to human intervention, behavioral biases, or slower reaction times. | Trading in illiquid or volatile assets, large block trades, and strategies that are sensitive to news flow. |

Statistical Fidelity and Computational Budget

Another critical strategic axis is the trade-off between the statistical complexity of the algorithm’s internal model and the computational resources required to run it. A high-fidelity model might incorporate a vast number of factors, use sophisticated machine learning techniques to predict liquidity, and run complex simulations to determine its next action. Such a model offers the promise of a more accurate and nuanced view of the market, potentially leading to superior execution.

However, this accuracy comes at a significant cost in terms of computational power (CPU cycles, memory) and latency. The time it takes to compute the “optimal” action might be so long that the market has already moved, rendering the action obsolete.

A lower-fidelity model, by contrast, might use a simpler set of rules or a more constrained statistical approach, such as a low-rank approximation of a covariance matrix. This model is computationally “cheaper” and therefore faster. It can react more quickly to changing market data. The strategic trade-off is clear ▴ speed and efficiency are gained by sacrificing some degree of theoretical accuracy.

For certain strategies, particularly those that rely on speed, this is an advantageous trade. For small datasets, a simpler model may even be preferable as it is less likely to “overfit” the limited data and may produce more robust out-of-sample performance. The strategy of choosing a model’s complexity is an exercise in resource allocation, where the resource is computational power and the goal is to “purchase” the right amount of accuracy for the task at hand.

How Does Generality Impact Performance?

The final strategic dimension is the trade-off between creating a single, general-purpose algorithm versus a suite of specialized, highly-tuned algorithms. A generalist algorithm is designed to perform reasonably well across a wide range of assets and market conditions. Its calibration is robust, aiming for solid, average performance everywhere.

This approach simplifies development, maintenance, and user training. It provides a reliable workhorse for a trading desk.

The specialist approach involves creating a collection of distinct algorithms, each calibrated for a specific purpose. One might be tuned for illiquid small-cap stocks, another for volatile cryptocurrency markets, and a third for executing large, patient trades in government bonds. Each of these specialist tools can achieve a level of performance in its niche that the generalist algorithm cannot match.

The cost of this approach is increased complexity in development, infrastructure, and the operational burden on traders to select the right tool for the job. The strategic decision here is whether the operational complexity of managing a specialized toolkit is justified by the performance gains in specific, critical areas of the firm’s business.

- Generalist Algorithm Strategy ▴ This approach prioritizes operational simplicity and robustness. The goal is to build a single, reliable execution tool that can be deployed widely with minimal friction. The calibration focuses on achieving good, consistent performance across a diverse set of scenarios, accepting that it will not be the absolute best in any single one.

- Specialist Algorithm Strategy ▴ This approach prioritizes peak performance in specific, high-value domains. The firm invests in developing and maintaining a portfolio of algorithms, each a master of its particular niche. The calibration for each is highly specific and aggressive, tuned to extract maximum value from a narrow set of market conditions.

Execution

The execution of algorithmic calibration is the translation of high-level strategy into the granular, quantitative mechanics of the system. This is where the architectural vision is implemented in code and data. It is a deeply empirical process of hypothesis, testing, and refinement, grounded in the rigorous analysis of market data. The process requires a robust infrastructure and a clear, disciplined methodology for navigating the parameter space of the algorithm to arrive at a configuration that is not just theoretically sound, but operationally resilient.

Defining and Quantifying the Objective Function

The first and most critical step in execution is to create a precise, mathematical definition of “success.” This is the algorithm’s objective function. It is a formula that assigns a score to any given execution outcome, allowing for the comparison of different calibration settings. A poorly defined objective function will lead the calibration process astray, optimizing for a metric that does not truly reflect the firm’s strategic goals. The challenge lies in the fact that different measures of execution quality are often in conflict.

Consider the following common metrics:

- Implementation Shortfall ▴ This measures the total cost of execution relative to the decision price (the price at the moment the decision to trade was made). It is a comprehensive measure that captures both explicit costs (commissions) and implicit costs (slippage, market impact). Optimizing for this is often the ultimate goal.

- Arrival Price Slippage ▴ This measures the difference between the average execution price and the price at the time the order was first sent to the market. An aggressive calibration that executes quickly will typically minimize this, but at the potential cost of higher market impact.

- Market Impact ▴ This quantifies how much the algorithm’s own trading activity moves the price of the asset. A low-impact calibration is stealthy but may take longer to execute, exposing the order to adverse price movements (timing risk).

- Information Leakage ▴ This is a more subtle concept related to how much the algorithm’s actions reveal the trader’s underlying intent. An algorithm that consistently probes the same dark pools in the same sequence, for example, may be leaking information that can be exploited by other market participants.

The execution of calibration requires combining these competing metrics into a single, weighted objective function. For example, the function might be Cost = w1 (Market Impact) + w2 (Timing Risk). The weights, w1 and w2, are themselves critical calibration parameters that reflect the firm’s risk tolerance.

A firm highly sensitive to signaling risk would assign a high weight to market impact. This process of defining and weighting the objective function is a core part of the execution phase.

Parameter Space and Search Heuristics

Once the objective is defined, the next step is to systematically test different combinations of the algorithm’s internal parameters to find the set that optimizes the objective function. The “parameter space” is the multi-dimensional landscape of all possible settings. For even a moderately complex algorithm, this space can be enormous.

For example, a simple TWAP (Time-Weighted Average Price) algorithm might have parameters like:

- Participation Rate ▴ What percentage of the market volume should the algorithm target?

- Aggression Level ▴ How willing is the algorithm to cross the spread to get a trade done quickly?

- Look-back Window ▴ How much historical data is used to estimate volume and volatility?

Searching this space exhaustively (a “grid search”) is often computationally infeasible. Therefore, more sophisticated search heuristics are employed. These can include randomized search, which samples points from the parameter space at random, or more advanced methods like Bayesian Optimization.

Bayesian Optimization builds a probabilistic model of the objective function and uses it to intelligently select the next set of parameters to test, focusing on areas of the parameter space that are most likely to yield performance improvements. The choice of search heuristic is itself a trade-off between computational cost and the thoroughness of the search.

The backtesting environment is the laboratory in which algorithmic strategies are forged, and its fidelity to real-world market conditions determines the ultimate strength of the final product.

What Constitutes a High Fidelity Backtesting Environment?

The search for optimal parameters is conducted within a simulation environment, or backtester. The quality of the calibration is utterly dependent on the quality of this backtester. A low-fidelity backtester that makes naive assumptions will produce a calibration that appears excellent in simulation but fails catastrophically in live trading. Building and maintaining a high-fidelity backtesting environment is a major operational undertaking.

The following table details the essential components of an institutional-grade backtesting system:

| Component | Description | Primary Challenge |

|---|---|---|

| Historical Data | Full depth-of-book, tick-by-tick market data, including all quotes and trades. This data must be timestamped with high precision and be free of errors or gaps. | Storage and processing of terabytes or petabytes of data. Ensuring data quality and correcting for exchange-specific anomalies. |

| Market Impact Model | A model that simulates how the algorithm’s own trades would have affected the market price. A simple backtest assumes infinite liquidity at the best bid/offer, which is dangerously unrealistic. | Creating a realistic impact model is a complex quantitative finance problem. The model must account for factors like trade size, asset volatility, and overall market depth. |

| Latency Simulation | A model of the time delays inherent in the trading system. This includes network latency to the exchange and the internal processing time of the firm’s own software. | Accurately modeling the stochastic nature of network latency. A fixed latency assumption is often insufficient. |

| Fee and Commission Structure | A precise model of all transaction costs, including exchange fees, clearing fees, and regulatory charges. These costs can significantly affect the net performance of a strategy. | Fee structures can be complex and tiered. They can also change over time, requiring the model to be versioned. |

Robustness and Uncertainty Quantification

A sophisticated calibration process does not stop at finding a single “optimal” parameter set. It proceeds to quantify the uncertainty and robustness of that solution. The historical data used for backtesting is just one possible path the market could have taken.

A calibration that is perfectly tuned to this specific historical path may be “overfit” and perform poorly in the future. The goal is to find a parameter set that performs well across a wide range of plausible market conditions.

Techniques like cross-validation (testing the calibration on different subsets of the historical data) and scenario analysis (testing against simulated market shocks) are used to assess robustness. The output of the calibration process should be a probability distribution of expected performance, not just a single point estimate. For example, the result might be ▴ “With parameter set X, there is a 95% confidence that the implementation shortfall will be between 5.0 and 7.5 basis points.” This approach, sometimes drawing on concepts from distributionally robust optimization, provides a much more honest and operationally useful picture of the algorithm’s likely future performance. It allows the firm to make a risk-informed decision, balancing the potential for reward with a clear understanding of the potential downside.

References

- Bertsimas, Dimitris, and Melvyn Sim. “The price of robustness.” Operations research 52.1 (2004) ▴ 35-53.

- Dietvorst, Berkeley J. Joseph P. Simmons, and Cade Massey. “Algorithm aversion ▴ People erroneously avoid algorithms after seeing them err.” Journal of Experimental Psychology ▴ General 144.1 (2015) ▴ 114.

- Figueira, José, Salvatore Greco, and Matthias Ehrogott, eds. Multiple criteria decision analysis ▴ state of the art surveys. Vol. 78. Springer Science & Business Media, 2005.

- Gao, Rui, and Anton J. Kleywegt. “Distributionally robust stochastic optimization with Wasserstein distance.” arXiv preprint arXiv:1604.02195 (2016).

- Harris, Larry. Trading and exchanges ▴ Market microstructure for practitioners. Oxford University Press, 2003.

- Koc, Tufan, et al. “Distributionally Robust Multivariate Stochastic Cone Order Portfolio Optimization ▴ Theory and Evidence from Borsa Istanbul.” Mathematics 12.13 (2024) ▴ 2038.

- Niszczota, Pawel, and Dániel Kaszás. “Algorithm aversion in financial decision making.” Economics Letters 190 (2020) ▴ 109068.

- Ranjan, R. and S. S. Grewal. “A review of optimization techniques for integrated process planning and scheduling.” Journal of Manufacturing Systems 55 (2020) ▴ 146-166.

- Rühr, Anne-Sophie. “The performance-control dilemma in human-robot interaction.” Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction. 2020.

- Wang, Z. et al. “Statistical and Computational Trade-offs in Variational Inference ▴ A Case Study in Inferential Model Selection.” arXiv preprint arXiv:2207.11208 (2022).

Reflection

Architecting the Intelligence System

The process of calibrating a randomized algorithm, with all its intricate trade-offs, is a microcosm of a larger institutional challenge. It prompts a deeper question about the nature of the firm’s entire operational framework. Is that framework merely a collection of tools and processes, or is it a coherent, integrated system designed to generate and act upon intelligence? The knowledge gained from mastering the balance between statistical accuracy and computational cost, or between automation and human control, is a component of this larger system.

Viewing calibration through this lens transforms it from a purely quantitative task into a strategic one. Each decision about a parameter’s value is a decision about how the firm chooses to meet the market. It reflects a philosophy of risk, a perspective on information, and a commitment to a particular method of value extraction. The ultimate goal is to build an execution system that is more than just efficient; it must be an extension of the firm’s core intelligence, capable of learning, adapting, and executing with a precision that provides a durable competitive advantage.

Glossary

Randomized Algorithm

Execution System

Information Leakage

Computational Resources

Statistical Certainty

Calibration Process

Arrival Price

Market Impact

Adverse Price Movements

Arrival Price Slippage

Risk Management

Human Oversight

Market Conditions

Trade-Off Between

Market Data

Generalist Algorithm

Algorithmic Calibration

Parameter Space

Objective Function

Execution Quality

Implementation Shortfall

Historical Data

Bayesian Optimization

Computational Cost

High-Fidelity Backtesting

Distributionally Robust Optimization