Concept

The Inevitable Collision of Code and Consequence

The integration of artificial intelligence into the architecture of credit underwriting represents a fundamental systemic shift. We are moving from a paradigm of human-centric, rules-based decisioning to one of algorithmic probability assessment at immense scale. This transition introduces a new class of operational and compliance risk, rooted in the very nature of machine learning itself. The core challenge is the inherent opacity of complex models, which function as intricate, non-linear systems.

Their internal logic, derived from vast datasets, can create outcomes that are statistically powerful yet causally obscure. This creates a direct conflict with the foundational principles of consumer finance regulation, which are built upon transparency, fairness, and the right to a clear explanation for a credit decision.

Regulatory frameworks, such as the Equal Credit Opportunity Act (ECOA) and the Fair Housing Act, were designed to govern human discretion and prevent explicit bias. They operate on principles of causality and intent. An AI model, however, operates on correlation. It does not possess intent, yet its outputs can produce discriminatory effects with systemic precision.

This is the central paradox ▴ a system with no malicious intent can still perpetuate and even amplify historical biases embedded in the data it learns from. The regulatory implications, therefore, are a direct consequence of this systemic mismatch. The conversation is about how to impose principles of fairness and accountability onto a technological substrate that does not inherently possess them.

The central regulatory challenge is mapping principles of fairness, designed for human decision-making, onto the opaque, correlation-driven logic of artificial intelligence systems.

Redefining Risk in an Algorithmic Environment

The use of AI compels a redefinition of risk within a financial institution. Traditionally, underwriting risk was primarily focused on credit default. Now, it must expand to encompass algorithmic risk, which includes several distinct vectors. The most prominent is compliance risk, specifically the potential for fair lending violations.

A model that appears neutral on its face can still create a disparate impact, where a specific protected group is disproportionately disadvantaged, even if protected characteristics were not used as inputs. This is because complex algorithms can identify and utilize proxies for these characteristics from thousands of other data points, such as ZIP codes, purchasing habits, or educational background.

Another vector is model risk itself, as defined by regulatory bodies. This pertains to the potential for financial loss due to a model performing incorrectly. In the context of AI, this risk is magnified. The dynamic nature of machine learning models means they can “drift” over time as new data is introduced, potentially degrading their performance or, more critically, their fairness.

A model that was compliant upon deployment may become non-compliant through its own learning process. Consequently, the regulatory expectation shifts from a one-time validation to a continuous, dynamic monitoring and governance protocol. The system must be designed for perpetual oversight.

Finally, there is reputational risk. An allegation of discriminatory algorithmic practices, whether substantiated or not, can cause significant damage to an institution’s public standing. The technical complexity of defending an AI model’s decisions in a public forum is substantial. Therefore, the regulatory imperative is also a business imperative ▴ to build systems that are not only compliant but demonstrably fair and robust, capable of withstanding both regulatory scrutiny and public inquiry.

Strategy

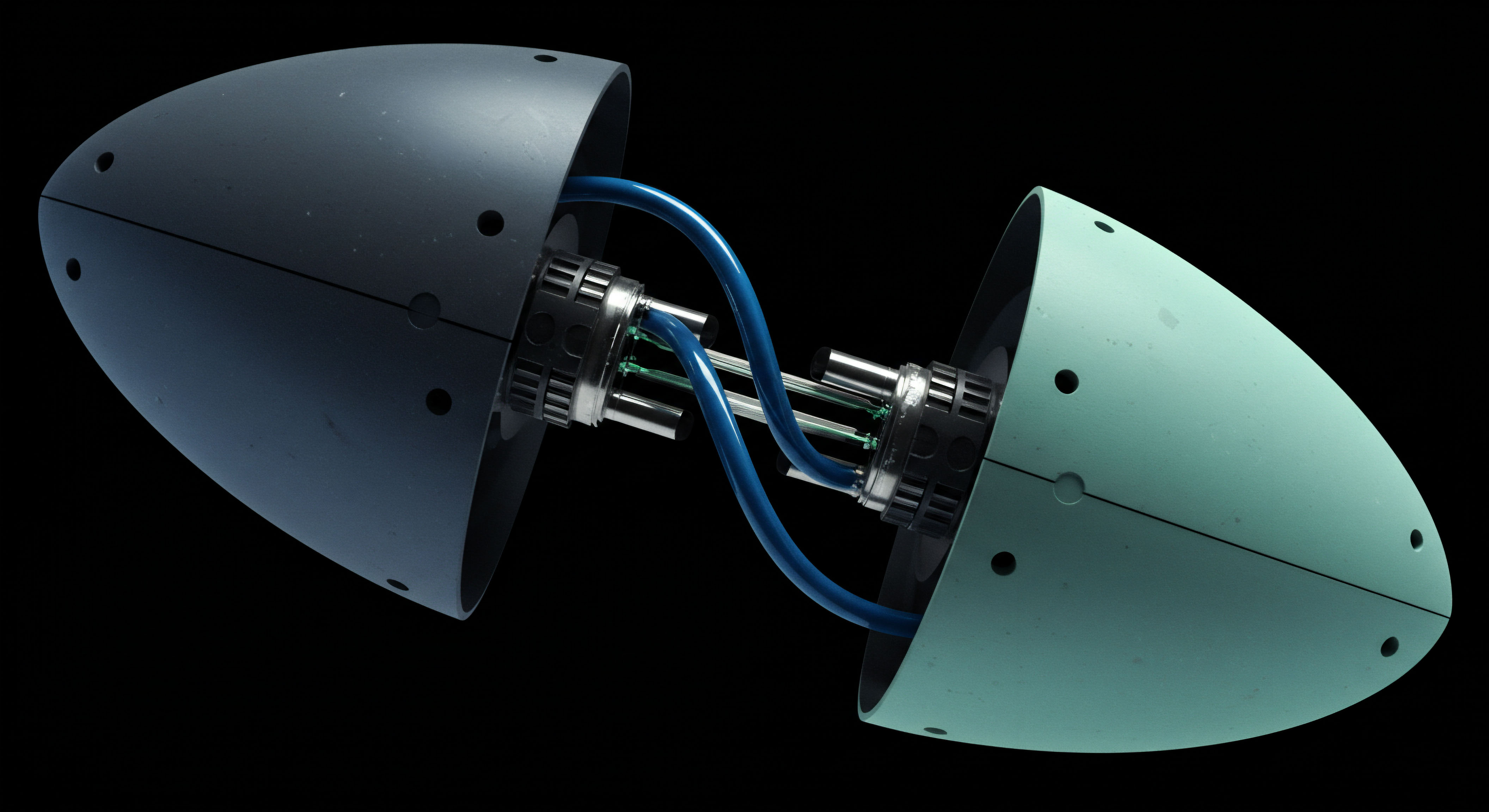

A Governance Protocol for Algorithmic Underwriting

A robust strategy for managing the regulatory implications of AI in credit underwriting begins with the establishment of a comprehensive governance protocol. This is a multi-disciplinary framework that extends beyond the data science department, integrating legal, compliance, risk management, and business line ownership into the entire lifecycle of the AI model. The objective is to create a system of checks and balances that ensures regulatory considerations are embedded from inception, not applied as a final check before deployment. This protocol treats the AI model as a core business process, subject to the same level of rigorous oversight as any other critical function.

The lifecycle of the model under this protocol consists of several distinct stages, each with specific governance requirements:

- Data Acquisition and Vetting ▴ Before any modeling begins, the data itself must be scrutinized. This involves assessing the data for historical biases, ensuring its accuracy and relevance, and documenting its lineage. A key strategic decision here is the inclusion of alternative data. While it can expand credit access, it also introduces potential proxies for protected classes. The governance committee must approve the use of all data sources, with a clear justification for how they enhance predictive power without introducing undue fairness risk.

- Model Development and Fairness Testing ▴ During development, the focus is on a dual mandate ▴ predictive accuracy and fairness. This requires establishing clear fairness metrics upfront. The strategy must define which statistical measures of fairness the institution will adhere to (e.g. disparate impact analysis, statistical parity, equal opportunity). The development process must be iterative, with data scientists actively working to mitigate any biases that are detected, even if it means sacrificing a small amount of model accuracy. This trade-off decision is a critical strategic choice that must be documented and approved by the governance committee.

- Validation and Pre-Implementation Review ▴ An independent validation team, separate from the model developers, must rigorously test the model. This includes stress-testing the model’s performance and fairness under various scenarios. The legal and compliance teams play a crucial role at this stage, reviewing the model’s documentation and testing results to provide a formal attestation of its compliance with fair lending laws. The model cannot proceed to deployment without this approval.

- Deployment and Continuous Monitoring ▴ Post-deployment, the model’s performance and fairness must be monitored in real-time. The strategy must include the development of early-warning systems that trigger alerts if the model’s outputs begin to deviate from established fairness corridors. This is not a passive process; it requires active surveillance and periodic, in-depth reviews to detect model drift or changing population dynamics.

- Adverse Action and Explainability ▴ The system must be capable of generating accurate and compliant adverse action notices. This is a significant technical challenge for complex “black box” models. The strategy must prioritize the use of explainable AI (XAI) techniques that can provide the principal reasons for a specific credit decision. This is a non-negotiable requirement under ECOA. The choice of model architecture itself can be a strategic one; sometimes a slightly less complex but more transparent model is preferable from a regulatory standpoint.

Comparative Frameworks for Model Architecture

The choice of AI model architecture has direct strategic implications for regulatory compliance. Different types of models offer varying levels of performance, complexity, and, most importantly, interpretability. The following table provides a strategic comparison of common model families used in credit underwriting, evaluated through a regulatory lens.

| Model Architecture | Performance Profile | Interpretability Level | Regulatory Compliance Considerations |

|---|---|---|---|

| Logistic Regression | Baseline performance, effective with linear relationships. | High. Coefficients are directly interpretable. | Considered the traditional standard. Easy to explain for adverse action notices and straightforward to test for bias. May lack the predictive power to compete effectively. |

| Gradient Boosting Machines (e.g. XGBoost) | High predictive accuracy, can capture complex non-linear interactions. | Moderate. Requires post-hoc explanation techniques like SHAP or LIME to interpret individual predictions. | Widely used due to high performance. Regulators accept these models, but expect robust explanation methodologies and documentation. The “black box” risk is present but manageable with proper controls. |

| Random Forest | Strong performance, robust to overfitting. | Moderate. Similar to gradient boosting, requires XAI methods for individual explanations. Feature importance is relatively easy to calculate globally. | A common choice that balances performance and risk. The ensemble nature can make precise causal pathways difficult to trace, requiring strong validation processes. |

| Neural Networks (Deep Learning) | Potentially the highest predictive accuracy, especially with vast and unstructured data. | Low. Highly opaque and difficult to interpret. Explaining individual decisions is a significant technical challenge. | Represents the highest level of regulatory risk. While powerful, the inability to provide clear, specific reasons for adverse actions can lead to direct violations of ECOA. Use in underwriting is still nascent and requires extensive justification and novel XAI frameworks to be viable. |

Choosing a model architecture is a strategic balancing act between maximizing predictive accuracy and maintaining the necessary transparency to meet regulatory obligations.

Execution

The AI Model Validation Protocol

Executing a compliant AI underwriting strategy requires a granular, evidence-based validation protocol. This protocol is the operational manifestation of the governance framework, providing a concrete set of procedures to ensure that a model is sound, fair, and compliant before it impacts a single applicant. The validation process must be conducted by an independent team and the results must be meticulously documented for regulatory review. The following steps constitute a baseline validation protocol for a new AI credit underwriting model.

- Conceptual Soundness Review ▴ The first step is a qualitative assessment. The validation team reviews the model’s theoretical underpinnings. This includes an evaluation of the business logic, the selection of input variables, and the appropriateness of the chosen modeling technique. The goal is to confirm that the model is built on a logical and defensible foundation, and that the data used is relevant to the task of assessing creditworthiness.

- Data Integrity and Bias Analysis ▴ The validation team conducts an independent audit of the model’s development data. This involves verifying the data’s accuracy, completeness, and lineage. Crucially, this stage includes a deep analysis for potential biases. The data is segmented by race, ethnicity, sex, and age groups to identify any pre-existing disparities that the model might learn and amplify. This analysis must be performed before evaluating the model’s outputs.

- Quantitative Model Performance Testing ▴ This involves a rigorous statistical evaluation of the model’s predictive accuracy. The validation team will use various metrics (e.g. Accuracy, Precision, Recall, AUC-ROC) to assess performance on a holdout dataset that was not used during model training. The performance is also evaluated across different demographic subgroups to ensure the model is not systematically less accurate for certain protected classes.

- Fair Lending Compliance Testing ▴ This is the most critical stage for regulatory purposes. The validation team runs a series of specific statistical tests to measure the model’s fairness. The results of these tests are compared against pre-defined thresholds set by the institution’s risk appetite and compliance policy. A detailed report is generated, which becomes a key component of the model’s compliance documentation.

- Explainability and Adverse Action Logic Verification ▴ The protocol requires testing the system’s ability to generate adverse action notices. The validation team will select a sample of denied applicants from the test data and review the reasons generated by the XAI system. These reasons are checked for accuracy, clarity, and compliance with Regulation B, which requires specific and principal reasons for denial.

- Implementation and Process Verification ▴ Before the model goes live, the validation team verifies that the model implemented in the production environment is the exact same version that was tested and approved. This includes checking the code, the data pipelines, and the surrounding decisioning logic to ensure nothing has changed that could alter the model’s behavior.

Executing a Fair Lending Analysis for Machine Learning Models

A fair lending analysis of an AI model is a multi-faceted process. It involves applying several different statistical metrics to view the model’s outcomes from different perspectives, as no single metric can capture all aspects of fairness. The following table details the key metrics and their operational execution.

| Fairness Metric | Definition | Execution Steps | Regulatory Relevance |

|---|---|---|---|

| Adverse Impact Ratio (AIR) | Compares the selection rate (approval rate) of a protected group to the selection rate of the most favored group. Often, a ratio below 80% is flagged. | 1. Calculate the approval rate for each demographic subgroup (e.g. by race, gender). 2. Identify the subgroup with the highest approval rate (the reference group). 3. For each other subgroup, divide its approval rate by the reference group’s approval rate. | This is the cornerstone of disparate impact analysis. It provides a direct measure of whether a neutral policy has a disproportionately negative effect on a protected class. |

| Standardized Mean Difference (SMD) | Measures the difference in the average model score between two demographic groups, normalized by the standard deviation. | 1. For each demographic group, calculate the mean and standard deviation of the model’s output scores. 2. For each pair of groups, calculate the difference in their means. 3. Divide this difference by the pooled standard deviation. | Helps to identify if the model is systematically assigning lower scores to a particular group, even if the final approval/denial decision doesn’t trigger an AIR flag. Useful for early-stage model development. |

| Marginal Effect Analysis | Examines the impact of individual input variables on the model’s output across different demographic groups. | 1. Use an XAI technique like SHAP to calculate the impact of each feature on the model’s output. 2. Compare the average marginal effect of key variables (e.g. income, debt-to-income ratio) between protected and reference groups. | This analysis helps to uncover “hidden” biases where a variable may be more impactful for one group than another. It provides a deeper diagnostic view into the model’s internal logic. |

| Less Discriminatory Alternative (LDA) Analysis | The process of searching for and evaluating alternative models or configurations that achieve a similar business objective with less disparate impact. | 1. If the primary model shows significant adverse impact, develop alternative candidate models. 2. These alternatives might use different variables, algorithms, or fairness constraints. 3. Evaluate each LDA’s predictive performance and fairness metrics against the primary model. 4. Document the search and the final decision. | This is a core requirement of disparate impact law. Regulators expect institutions to have conducted a rigorous search for LDAs if their chosen model produces a discriminatory effect. |

References

- Marshall, Mickey. “Regulatory Perceptions of Artificial Intelligence and What They Mean for Community Banks.” Independent Community Bankers of America, 30 Oct. 2023.

- Goodwin Procter LLP. “Double Clicking on Innovation in Consumer Finance ▴ Responsible Use of AI.” Goodwin Law, 1 Oct. 2024.

- O’Reilly, Patrick J. and Brian O’Donoghue. “AI’s Game-Changing Potential in Banking ▴ Are You Ready for the Regulatory Risks?” Stradley Ronon, 21 Oct. 2024.

- Bartlett, Robert P. “A New Era for Fair Lending ▴ A Conversation with the CFPB.” The CLS Blue Sky Blog, Columbia Law School, 2022.

- Rice, Lauren, and DeMario Carswell. “An AI fair lending policy agenda for the federal financial regulators.” Brookings Institution, 2 Dec. 2021.

- Hsu, Michael J. “Trust and Technology ▴ The Supervisory Implications of Artificial Intelligence.” Office of the Comptroller of the Currency, 11 Oct. 2023.

- Ficklin, Ben, et al. “Fair Lending in the digital age.” Grant Thornton, 9 Jun. 2023.

Reflection

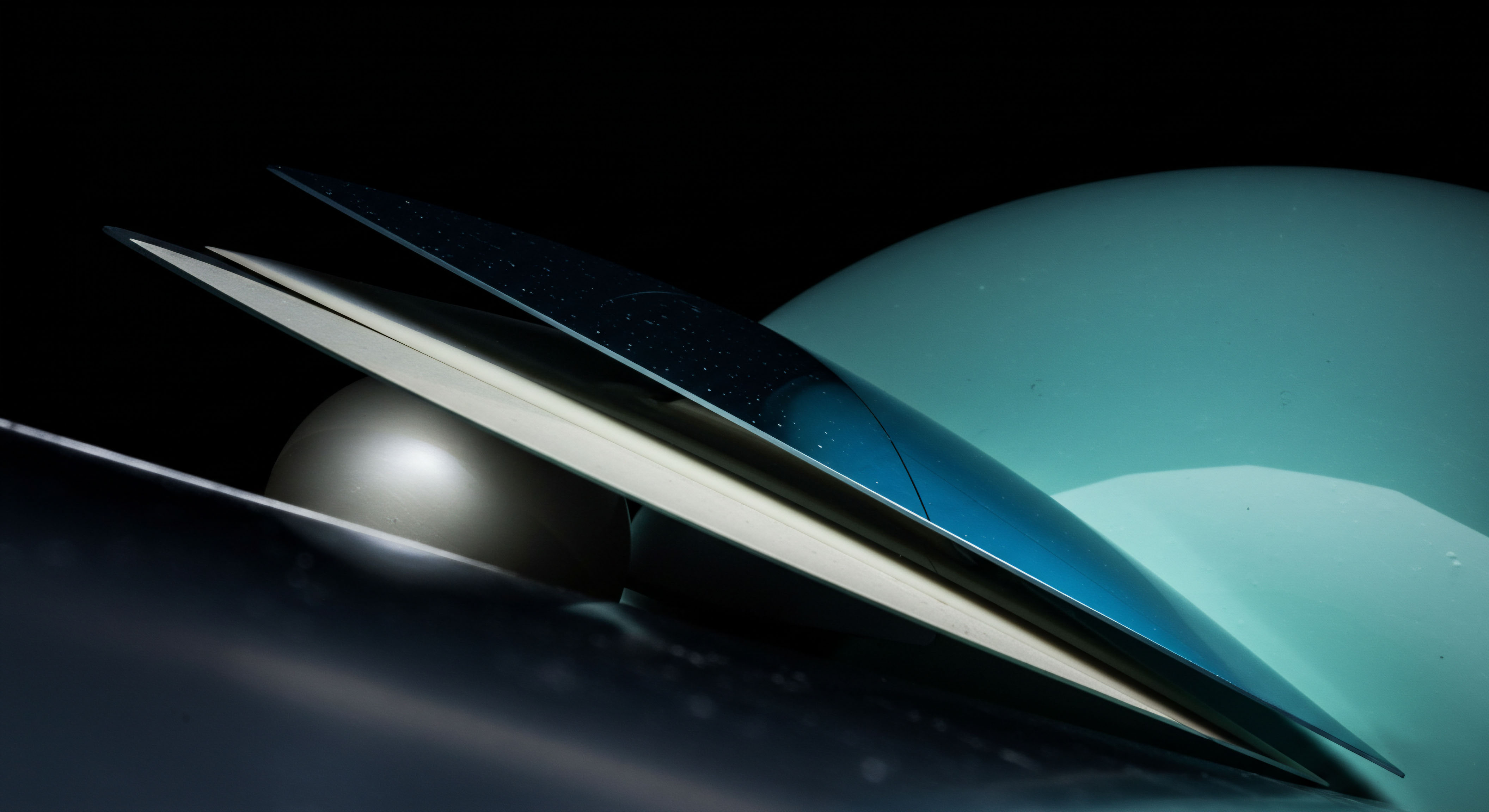

The System’s New Sensory Layer

The integration of AI into credit underwriting is the financial system developing a new, powerful sensory layer. It can perceive patterns of risk and opportunity in data that were previously invisible. Yet, like any new sense, its inputs must be calibrated. An uncalibrated system can be overwhelmed by noise or misinterpret signals, leading to flawed judgments.

The regulatory frameworks are not impediments to this evolution; they are the calibration protocols. They provide the necessary constraints to ensure that this new sensory capability is focused on its intended purpose ▴ assessing the financial risk of an individual ▴ without being distorted by the echoes of societal biases embedded in the data.

Ultimately, the challenge is one of system design. Building a compliant AI underwriting platform is an exercise in constructing a resilient, self-aware system. It requires an architecture that not only makes decisions but also explains them, monitors its own behavior for signs of drift, and contains the internal feedback loops necessary for self-correction. The institution that masters this will possess a significant operational advantage, one built not just on superior prediction, but on the trust and stability that comes from a demonstrably fair and transparent system.

Glossary

Artificial Intelligence

Credit Underwriting

Equal Credit Opportunity Act

Ecoa

Fair Lending

Disparate Impact

Alternative Data

Predictive Accuracy

Adverse Action Notices

Model Architecture

Adverse Action