Concept

The System Is the Strategy

The deployment of a new Smart Trading feature is not an isolated event. It represents a deliberate enhancement of a firm’s core operational apparatus ▴ the integrated system of technology, quantitative models, and human oversight that dictates its interaction with the market. Viewing this process through any other lens is a fundamental misinterpretation of its purpose. The objective is to refine the very mechanism of execution, embedding new intelligence directly into the firm’s market-facing infrastructure.

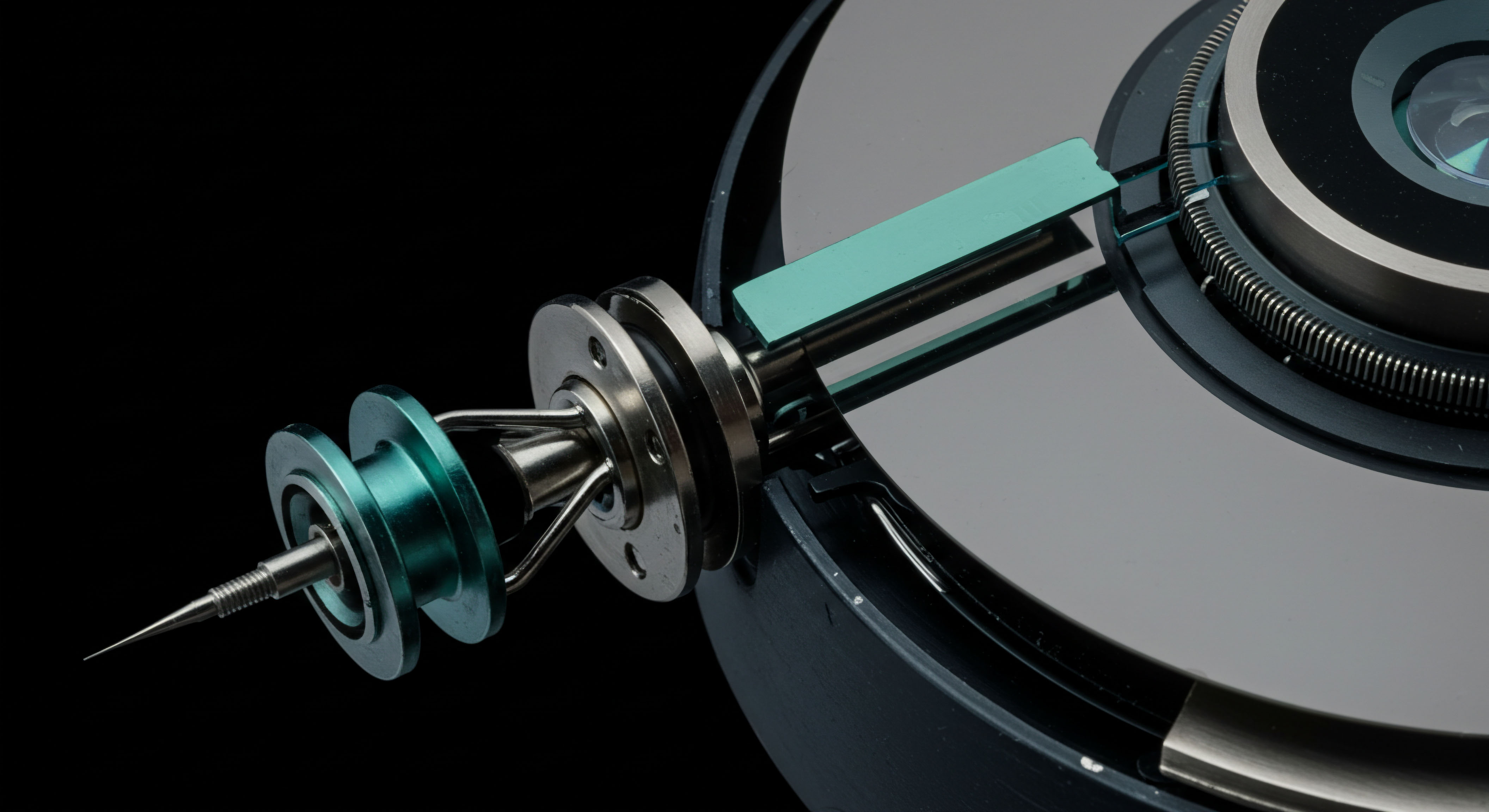

A new feature, whether it’s a sophisticated liquidity-seeking algorithm or a dynamic hedging tool, functions as a specialized module within this broader system. Its value is measured by its ability to improve the system’s overall performance metrics ▴ capital efficiency, execution quality, and risk mitigation. Therefore, the deployment process is a structured, multi-stage protocol designed to ensure that this new module integrates seamlessly, performs reliably, and contributes measurably to the strategic objectives of the institution.

This perspective shifts the focus from the feature itself to the system it inhabits. The central challenge lies in ensuring that the introduction of a new component enhances, rather than disrupts, the carefully calibrated equilibrium of the existing trading infrastructure. Each stage of the deployment, from initial conception to final production rollout, is a risk management checkpoint. It is a systematic validation that the feature’s logic is sound, its behavior is predictable under a wide range of market conditions, and its performance is aligned with its intended purpose.

The process is inherently iterative, involving a continuous feedback loop between quantitative researchers, developers, traders, and risk managers. This collaborative approach is essential for navigating the complexities of modern market microstructure and for building a robust, resilient, and high-performing trading system. The successful deployment of a Smart Trading feature is, in essence, a successful evolution of the firm’s entire operational capability.

Defining the Smart Trading Feature

A Smart Trading feature, in the institutional context, is a pre-programmed set of rules designed to automate complex trading decisions and execution workflows. These features are not simple buy or sell orders; they are sophisticated algorithms that can account for a multitude of variables, including market volatility, liquidity, order book depth, and transaction costs. Examples range from Volume-Weighted Average Price (VWAP) algorithms that aim to execute large orders with minimal market impact, to advanced options strategies that automatically manage delta hedging for a derivatives portfolio.

The “smart” component refers to the feature’s ability to adapt its behavior in real-time based on evolving market data, making decisions that a human trader might, but at a speed and scale that is impossible to replicate manually. The design of such a feature is a direct translation of a specific trading strategy into executable code, creating a tool that is both precise in its function and scalable in its application.

The core of a Smart Trading feature is the codification of expert judgment into a repeatable, automated process.

The development of these features is driven by the need to solve specific execution challenges. For instance, a liquidity-seeking algorithm is designed to uncover hidden pools of liquidity for large block trades, minimizing the information leakage that can lead to adverse price movements. A pairs trading feature automates the process of identifying and capitalizing on temporary pricing dislocations between two correlated assets.

Each feature is a purpose-built solution to a well-defined problem, and its success is contingent on the clarity of its objectives and the robustness of its underlying logic. The process of defining the feature, therefore, is the foundational step in the deployment lifecycle, requiring a deep understanding of both the market mechanics and the strategic intent behind the trade.

Strategy

A Framework for Deliberate Deployment

The strategic framework for deploying a new Smart Trading feature is a disciplined, multi-phased approach that moves from abstract idea to concrete implementation. This process is designed to maximize the probability of success while systematically mitigating the inherent risks. It begins with a rigorous analysis of the opportunity, followed by a detailed definition of the feature’s requirements, a careful selection of the underlying quantitative models, and the establishment of a comprehensive risk management framework.

Each phase is a critical building block, and the integrity of the entire process depends on the thoroughness with which each step is executed. This is a strategic endeavor that requires a cross-functional team of experts, including portfolio managers, quantitative analysts, software engineers, and compliance officers, all working in concert to achieve a common objective.

The initial phase, opportunity analysis, involves identifying a specific area where a new automated strategy can create a tangible advantage. This could be a response to changing market conditions, the emergence of a new asset class, or the identification of a persistent inefficiency in the execution process. Once an opportunity is identified, the team must articulate a clear and concise set of objectives for the proposed feature. These objectives will serve as the guiding principles for the entire development and deployment lifecycle, ensuring that the final product is aligned with the firm’s strategic goals.

This stage is about asking the right questions ▴ What problem are we trying to solve? How will we measure success? What are the potential risks and how will we mitigate them? The answers to these questions will form the foundation upon which the entire project is built.

Phase 1 Opportunity Analysis and Requirements Definition

The first phase of the strategic process is a dual-pronged effort that combines a high-level assessment of the opportunity with a granular definition of the feature’s technical and functional requirements. The opportunity analysis begins with a thorough market study to validate the hypothesis that a new Smart Trading feature can generate alpha or reduce transaction costs. This involves analyzing historical market data, studying the behavior of competing strategies, and assessing the potential impact of the proposed feature on the firm’s overall trading performance. The goal is to build a compelling business case for the project, demonstrating that the potential benefits justify the significant investment of time and resources required for development and deployment.

Once the business case is approved, the focus shifts to the detailed definition of requirements. This is a critical translation step, where the strategic objectives of the feature are converted into a precise set of specifications that will guide the development team. This process involves close collaboration between traders, who understand the market and the desired trading behavior, and engineers, who understand the technical constraints and possibilities. The requirements document should cover every aspect of the feature’s functionality, including:

- Order Logic ▴ The specific conditions that will trigger buy and sell orders, including price levels, volume thresholds, and technical indicators.

- Parameterization ▴ The user-configurable settings that will allow traders to customize the feature’s behavior for different market conditions and risk tolerances.

- Data Inputs ▴ The real-time market data feeds that the feature will require to make its decisions, such as a high-performance computing and internet connection.

- Risk Controls ▴ The built-in safety mechanisms that will prevent the feature from behaving erratically or causing unintended losses, such as kill switches and position limits.

- Performance Metrics ▴ The key performance indicators (KPIs) that will be used to evaluate the feature’s effectiveness, such as slippage, fill rates, and alpha generation.

Phase 2 Model Selection and Risk Framework

With a clear set of requirements in hand, the next phase focuses on selecting the appropriate quantitative models and designing a robust risk management framework. The choice of model is a critical decision that will have a profound impact on the feature’s performance and behavior. There are numerous approaches to model design, each with its own strengths and weaknesses.

For example, a trend-following strategy might use a simple moving average crossover model, while a more complex statistical arbitrage strategy might employ a sophisticated cointegration model. The selection process involves evaluating different models based on their historical performance, their robustness to changing market conditions, and their computational efficiency.

The risk framework is not an add-on; it is an integral part of the feature’s design from the very beginning.

Simultaneously, the team must develop a comprehensive risk management framework that will govern the feature’s operation in the live market. This framework should include a multi-layered system of controls designed to mitigate a wide range of potential risks, from model failure and software bugs to adverse market events and regulatory changes. The table below outlines the key components of a typical risk framework for a Smart Trading feature.

| Control Layer | Description | Examples |

|---|---|---|

| Pre-Trade Controls | Automated checks that are performed before an order is sent to the market. | Fat-finger checks, maximum order size limits, compliance checks. |

| Intra-Trade Controls | Real-time monitoring of the feature’s behavior while it is executing. | Position limits, intraday loss limits, deviation from benchmark alerts. |

| Post-Trade Controls | Analysis of the feature’s performance after the close of trading. | Transaction Cost Analysis (TCA), performance attribution, model validation. |

| Operational Controls | Manual oversight and intervention procedures. | Kill switch functionality, manual override capabilities, escalation procedures. |

Execution

The Operational Playbook for Flawless Integration

The execution phase is where the strategic vision for the new Smart Trading feature becomes a tangible reality. This is a highly structured and disciplined process that involves a series of distinct stages, each with its own set of protocols, deliverables, and success criteria. The overarching goal is to ensure that the feature is developed, tested, and deployed in a manner that is safe, reliable, and efficient.

This requires a seamless collaboration between the development team, the quantitative research team, and the trading desk, all operating within a clearly defined governance framework. The execution playbook is a detailed roadmap that guides the team through every step of the process, from writing the first line of code to monitoring the feature’s performance in the live market.

The journey begins with the core development and integration work, where the feature’s logic is translated into robust, production-quality code. This is followed by a rigorous testing and validation process, which includes both backtesting against historical data and forward-testing in a simulated market environment. Once the feature has been thoroughly vetted, it is ready for a phased rollout into the production environment, starting with a limited deployment and gradually expanding as confidence in its performance grows.

Throughout this entire process, a dedicated team is responsible for monitoring the feature’s behavior, collecting performance data, and making any necessary adjustments. This iterative approach to execution is essential for managing the complexities and risks inherent in algorithmic trading, and for ensuring that the final product meets the high standards of an institutional trading operation.

Development and System Integration

The development process begins with the translation of the detailed requirements document into a technical specification that will guide the software engineers. This involves making key architectural decisions, such as the choice of programming language (Python, C++, or Java are common choices ), the design of the data structures, and the overall structure of the code. The development team should follow a disciplined software development lifecycle (SDLC) methodology, such as Agile or Waterfall, to ensure that the project is well-organized and that progress is tracked effectively. Regular code reviews and unit testing are essential for maintaining a high level of code quality and for catching potential bugs early in the development process.

A critical aspect of the development phase is the integration of the new feature with the firm’s existing trading infrastructure. This includes connecting the feature to the Order Management System (OMS) and the Execution Management System (EMS), which are the core platforms for managing and executing trades. The integration process requires careful planning and coordination to ensure that data flows seamlessly between the different systems and that the new feature can interact with the market without causing any disruptions. The following checklist outlines the key steps involved in the system integration process:

- API Integration ▴ Establish a stable and reliable connection between the Smart Trading feature and the OMS/EMS platforms using their respective Application Programming Interfaces (APIs).

- Data Feed Integration ▴ Connect the feature to the necessary real-time market data feeds, ensuring that the data is accurate, timely, and properly formatted.

- Order Routing Configuration ▴ Configure the order routing rules to ensure that orders generated by the feature are sent to the appropriate execution venues.

- Compliance and Regulatory Reporting ▴ Ensure that the feature is integrated with the firm’s compliance and regulatory reporting systems to meet all legal and regulatory obligations.

- User Interface Development ▴ Create a user-friendly interface that allows traders to monitor the feature’s performance, adjust its parameters, and intervene manually if necessary.

Quantitative Modeling and Rigorous Backtesting

Before a new Smart Trading feature can be deployed in the live market, it must undergo a rigorous backtesting process to validate its logic and assess its historical performance. Backtesting involves running the feature’s algorithm against historical market data to see how it would have performed in the past. This is a critical step in the validation process, as it provides a quantitative assessment of the feature’s potential profitability and risk profile.

A thorough backtest should cover a long period of time and a wide range of market conditions, including periods of high and low volatility, bull and bear markets, and major market events. The goal is to build confidence that the feature’s performance is not simply the result of data mining or curve fitting, but rather the product of a sound and robust trading logic.

The backtesting process should generate a comprehensive set of performance and risk metrics that can be used to evaluate the feature’s effectiveness. The table below provides an example of the key metrics that should be included in a backtesting report.

| Metric Category | Metric Name | Description |

|---|---|---|

| Profitability | Total Return | The total percentage gain or loss over the backtesting period. |

| Annualized Return | The geometric average amount of money earned by an investment each year over a given time period. | |

| Win/Loss Ratio | The ratio of the number of winning trades to the number of losing trades. | |

| Risk | Sharpe Ratio | A measure of risk-adjusted return, calculated as the average return earned in excess of the risk-free rate per unit of volatility. |

| Maximum Drawdown | The maximum observed loss from a peak to a trough of a portfolio, before a new peak is attained. | |

| Volatility | The standard deviation of the feature’s returns, a measure of its price fluctuations. | |

| Execution Quality | Average Slippage | The average difference between the expected price of a trade and the price at which the trade is actually executed. |

| Fill Rate | The percentage of orders that are successfully executed. |

Staging, Simulation, and Phased Rollout

Once a Smart Trading feature has successfully passed the backtesting phase, it is ready to move into a simulated live environment for forward-testing, also known as paper trading. This involves running the feature in real-time with live market data, but without sending any actual orders to the market. The purpose of paper trading is to see how the feature behaves in a live market environment and to identify any potential issues that may not have been apparent during backtesting.

This is a critical step in the validation process, as it provides a much more realistic assessment of the feature’s performance than backtesting alone. The paper trading period should be long enough to capture a variety of market conditions and to build confidence that the feature is ready for production deployment.

A phased rollout is a disciplined approach to risk management that allows for a gradual and controlled introduction of the new feature into the live trading environment.

Following a successful paper trading period, the feature is ready for a phased rollout into the production environment. This is a carefully managed process that involves gradually increasing the feature’s exposure to the live market while closely monitoring its performance. The rollout typically proceeds through a series of stages:

- Stage 1 ▴ Limited Deployment ▴ The feature is deployed with a very small capital allocation and is closely monitored by the trading and development teams.

- Stage 2 ▴ Gradual Scaling ▴ If the feature performs as expected in Stage 1, its capital allocation is gradually increased over time.

- Stage 3 ▴ A/B Testing ▴ The performance of the new feature is compared to existing trading strategies or manual execution to provide a quantitative assessment of its value.

- Stage 4 ▴ Full Deployment ▴ Once the feature has demonstrated its effectiveness and reliability, it is fully deployed and becomes a permanent part of the firm’s trading toolkit.

Throughout the rollout process, it is essential to have a dedicated team responsible for monitoring the feature’s performance and for responding to any issues that may arise. This team should have a clear set of protocols for escalating issues and for making decisions about whether to reduce the feature’s capital allocation or to deactivate it entirely. This continuous monitoring and oversight are critical for ensuring the ongoing safety and effectiveness of the Smart Trading feature.

References

- Harris, L. (2003). Trading and Exchanges ▴ Market Microstructure for Practitioners. Oxford University Press.

- Chan, E. (2013). Algorithmic Trading ▴ Winning Strategies and Their Rationale. John Wiley & Sons.

- Lehalle, C. A. & Laruelle, S. (Eds.). (2013). Market Microstructure in Practice. World Scientific.

- Jain, P. K. (2005). Institutional design and the cost of raising capital in the stock market. The Journal of Finance, 60(2), 775-807.

- O’Hara, M. (1995). Market Microstructure Theory. Blackwell Publishing.

- Fabozzi, F. J. & Focardi, S. M. (2004). The Mathematics of Financial Modeling and Investment Management. John Wiley & Sons.

- Aldridge, I. (2013). High-Frequency Trading ▴ A Practical Guide to Algorithmic Strategies and Trading Systems. John Wiley & Sons.

- Cartea, Á. Jaimungal, S. & Penalva, J. (2015). Algorithmic and High-Frequency Trading. Cambridge University Press.

- Johnson, B. (2010). Algorithmic Trading and DMA ▴ An introduction to direct access trading strategies. 4Myeloma Press.

- Kissell, R. (2013). The Science of Algorithmic Trading and Portfolio Management. Academic Press.

Reflection

The Continual Evolution of the Execution System

The deployment of a single Smart Trading feature, when viewed in isolation, is a tactical maneuver. However, when understood as a component of a larger, ongoing process, it becomes a strategic act of institutional evolution. The framework detailed here is a repeatable system for integrating new intelligence into the firm’s operational core. Each successful deployment refines this system, making it more robust, more efficient, and more capable of adapting to the ceaseless change of the market.

The true asset being built is not any single algorithm, but the organizational capacity to conceive, validate, and deploy these features in a disciplined and systematic manner. This capacity is the foundation of a durable competitive advantage in the modern financial landscape.

Therefore, the conclusion of one deployment cycle is merely the prelude to the next. The data gathered from a live feature provides the raw material for the next generation of enhancements. Performance metrics reveal new opportunities for optimization. Unforeseen market behavior exposes the limitations of existing models, sparking new avenues of research.

The process is a closed loop, a perpetual engine of innovation that drives the firm’s trading capabilities forward. The ultimate question for any institution is not simply how to build a single successful feature, but how to cultivate an environment where this process of systematic evolution can thrive. The answer to that question lies in the firm’s commitment to a culture of rigor, collaboration, and continuous improvement.

Glossary

Smart Trading Feature

Performance Metrics

Market Conditions

Risk Management

Market Microstructure

Trading Feature

Smart Trading

Vwap

Market Data

Risk Management Framework

Management Framework

Phased Rollout

Backtesting

Algorithmic Trading

System Integration